I wanted to start a technology RAQ (Reasonably Asked Questions) for blockchain that could address weightier technology queries and hopefully merge technology with conceptual understanding. I am thankful for some wonderful diagrams i found on the internet that visualize concepts very nicely. Feel free to correct as well as contribute.

## why cannot we have multiple genesis blocks in a proof of work based system?

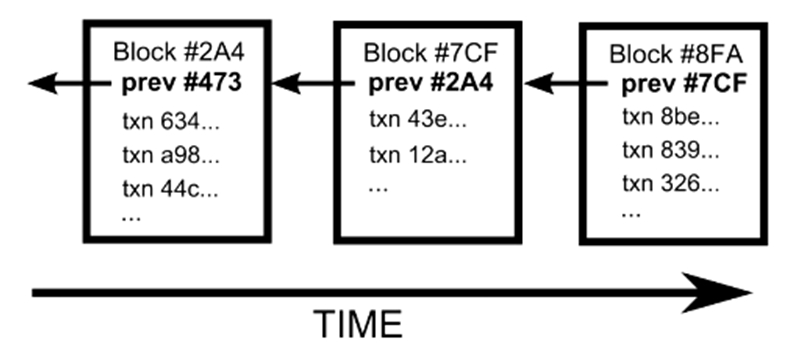

The genesis block is the very first one mined. It is not possible by design to have multiple genesis blocks because of the distributed consensus protocol. Remember that the winning chain is always the longest one and the length is measured by using the block height of the last block.

But there have been a few proposals to reset the network and create new genesis blocks every time performance problems occur due to the length of the block chain. Resetting the blockchain by introducing a new genesis block is exactly like a company’s ledger that is carried forward every year.

If a new genesis block is artificially added then all future transactions would have to start from it and transactions on older blocks would need to be denied. Also all unspent coins in the network would need to be output UTXOs of a coinbase transaction in the new genesis block. Just like a standard coinbase transaction, this will have no input UTXOs. Also provision must be made for all nodes to accept the new genesis block and discard the old chain otherwise we would have unauthorized forks.

A simpler and more obvious decongestion strategy would be to prune the Merkle tree in each block and archive old blocks instead of a radical overhaul.

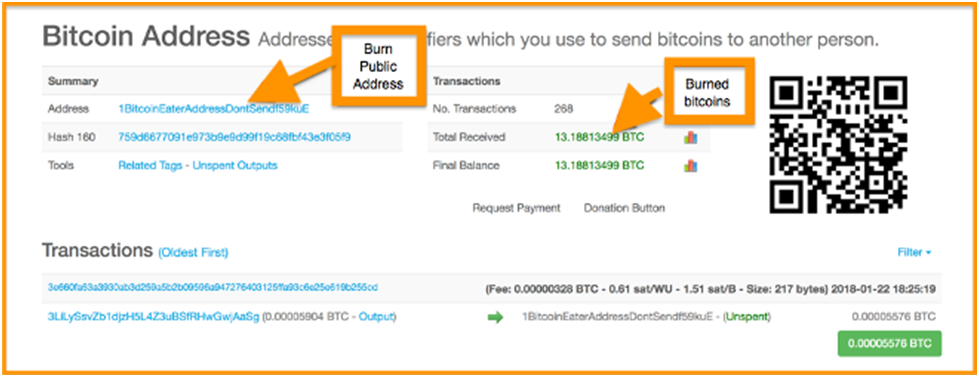

## When a coin is burnt in a blockchain, is there a physical transaction that will remember the operation and take the coin off the unspent list?

In the standard implementation, there is no way to destroy or blacklist a coin. In a distributed system, it is quite cumbersome if we have to check the validity of the coin itself once the coin has been created and accepted in the system.

One of the approaches that most follow is to commit a transaction to a ***sink*** public key holder who will not involve himself in any spending transaction henceforth. To make sure that the coin cannot be brought back into circulation or mined again, the recipient will be one without a valid private key. Another method is to exchange the coin for a special coin whose value is zero. Some implementations also use special transactions that *deliberately lie* about the output UTXO being zero.

## Will a node walk through all blocks and find the transaction for verification?

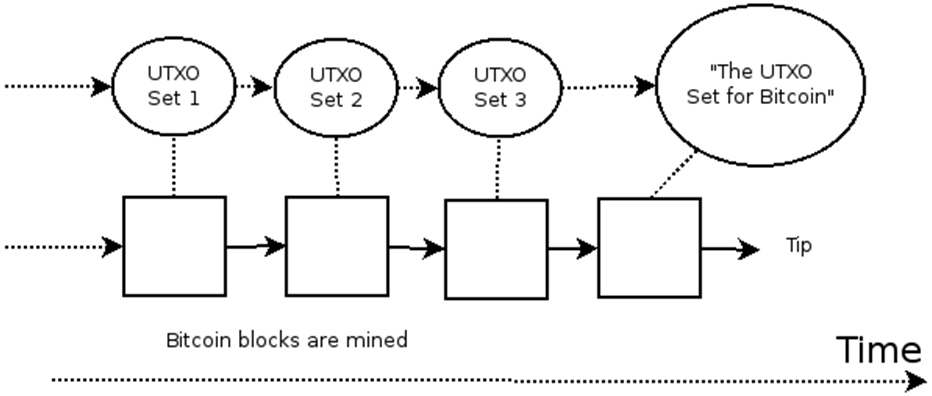

Most blockchain implementations will keep the set of unspent transaction (UTXO) references in a separate database (E.g. leveldb) so that any transaction input that refers to a UTXO can be quickly looked up without having to walk the chain.

The blockchain system is based on mutual trust. So in other words there is no powerful reason to lookup the block that contains the transaction as long as the transaction itself can be validated.

In other words, if the block containing the transaction is part of the chain, then the node will just accept the block and its transactions. Once each block is accepted then the UTXO set is updated by traversing all new blocks.

Typically an external application like a wallet will store the owner's (UTXO) references. The UTXO will include the transaction ID as well the output vector (index) within the transaction.

## Could the UTXO set be somehow be out of sync with the ledger?

*source forkdrop.io*

The UTXO set is always created on each node and not shared across the network. The UTXO set is only updated by each node after the block with transactions has been updated and hence there is really no synchronization protocol required. It also means that each node could elect to completely rebuild the UTXO set on demand. Of course the entire process would be slow as the node would have to traverse the chain and peek into each transaction in each block from the current block height to the genesis block.

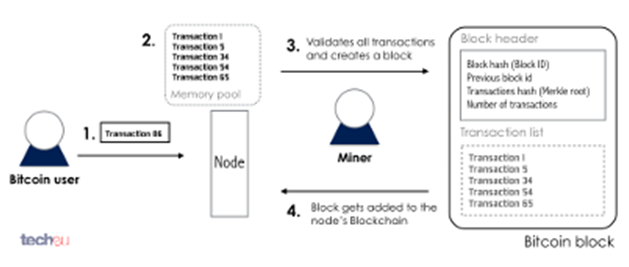

## Will it not be terribly slow for the blockchain miner nodes to try and find the previous transaction in order to commit submitted transactions?

The miner will get transactions as part of the network broadcast and validates it. It is assumed that miner nodes will get the transactions only after a certain number of nodes have validated the transaction. Validation of a transaction essentially means sanitizing the transaction structure and verifying the existence of the input UTXOs. This essentially sanctifies the transaction.

The previous transaction could be embedded deep down below a whole lot of blocks that have already been accepted. It makes no sense for the miner to search the chain for the previous transaction as it is incentivized to mine and not to verify. It is possible that a miner could deliberately insert a nonexistent transaction in defiance of the protocol. But in this case the block would still have to be accepted later on by a bunch of nodes using the consensus protocol (which would invariably fail).

## Will blockchain not be at great risk if a large number of nodes join simultaneously?

*source: eastwaco*

It is true that a blockchain is only as incorruptible as the number of honest full nodes in its network. The essential problem statement is how we ensure that a growing blockchain network is not overrun by the joining of an arbitrary number of full nodes that are dishonest and have enough hash power to corrupt the network.

Conceptually there is an assumption that any blockchain node that wants to join is not inherently malicious and wants to work to make money. It is also true that it is hard to get a bunch of unrelated nodes to behave maliciously together. Financially it makes more sense to mine for coin rather than destroy the network.

But that does not mean we can rule out a maniacal attacker having access to immense hash power and who just want to bring the network down!!

Technologically it is quite possible that a single entity with a huge number of machines at its disposal can try and overrun the network. It is also possible that we could have a blockchain virus that affects a large number of nodes thus achieving spurious control although we have not seen one yet.

**So what are the safeguards that exist to prevent such things?**

* Some implementations run a consensus protocol to recognize full nodes. This means that a large number of nodes cannot be simultaneously added to affect the balance of power

* The degree of difficulty of generating a new block is periodically revised upwards based on the aggregate hash rate available so that new blocks are not minted disproportionately

* Each node that joins has to download the entire blockchain ledger which is time consuming and varies based on bandwidth and storage performance. It could take days for a node to become fully operational and hence will stagger the union

* If the identity of infected nodes (if that were a possibility) were known, then hard forks would be enough to completely isolate them from the network. Replay attacks are a possibility in this case

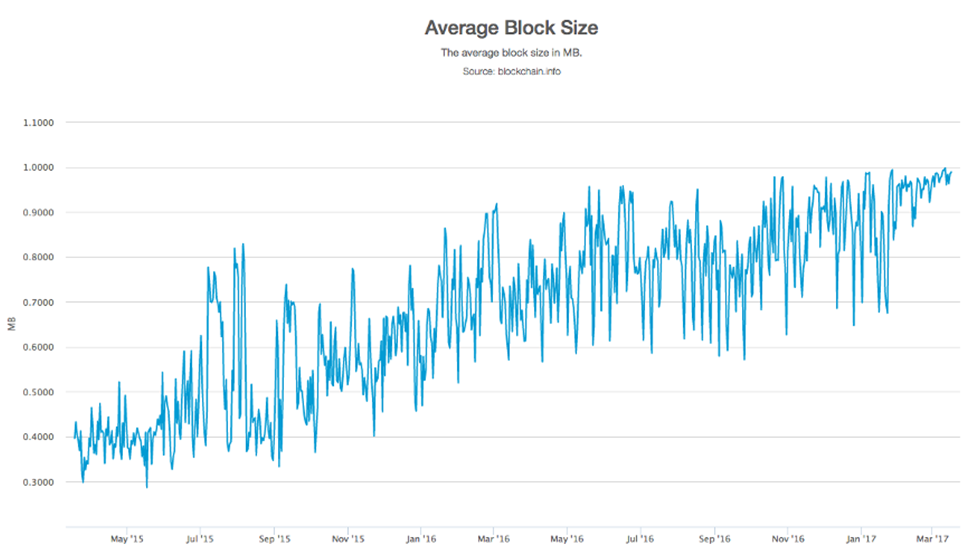

## Is there an issue with size of the block in a blockchain?

[source : Alex Evans](https://medium.com/@alexevo287/bitcoins-scalability-problem-c8f2df1523d9)

Currently blockchains use a fixed block size (say 1MB) and many say that this will be the downfall of the technology itself. Since there is a limit in the number of transactions that can be aggregated in a block there is an equivalent pressure on blocks to be approved quickly. Issues are all about scaling and being able to process many transactions as quickly as possible.

Smaller block sizes means that network interaction increases and consensus based approvals take time. Larger block sizes means that there will be network vulnerabilities and caching issues that come up when a node is waiting for a block to arrive. It also means increased paging overheads in transferring blocks from disk to memory and vice versa.

Since storage is cheap, it is memory that will be the bottleneck in future implementations. Larger block sizes will impact low bandwidth connections and thus reduce the number of publicly contributed full nodes. This is not desirable because the advantage of block chain is due to the democratic nature of participating nodes

In general, there needs to be a predictable stream of transactions flowing to the miners and being added into the block chain. If blocks are not filled up with transactions then network is underutilized and hence transaction confirmations keep getting pushed out. If miners keep waiting for transaction buildup, then turnaround times will rise. If more new blocks have to be mined because the size is small then overall performance nosedives.

## Why not use variable sized blocks to fit the transaction density in the pool?

There is nothing in the technology that will prevent variable sized blocks from being used. However the reasons are more financial because it brings out some quirky little issues with incentives.

Using Bitcoin as an example, let us say that the network would have to have different reward (coinbase) tiers for different block sizes (12.5 BTC to 1BTC). This means that miners do less work for small blocks and more work for large blocks. The answer seems obvious that miners would never put in more work when smaller blocks are available cheaper.

It has been suggested by many that a combination of increasing network/storage costs and reduced coinbase rewards might actually force miners to start adjusting block sizes so that they can make more money on transaction fees. When that happens, the miners may end up using virtual block sizes to fit the number of transactions they include in a block.

## Can the blockchain randomly access any block given the block number or hash?

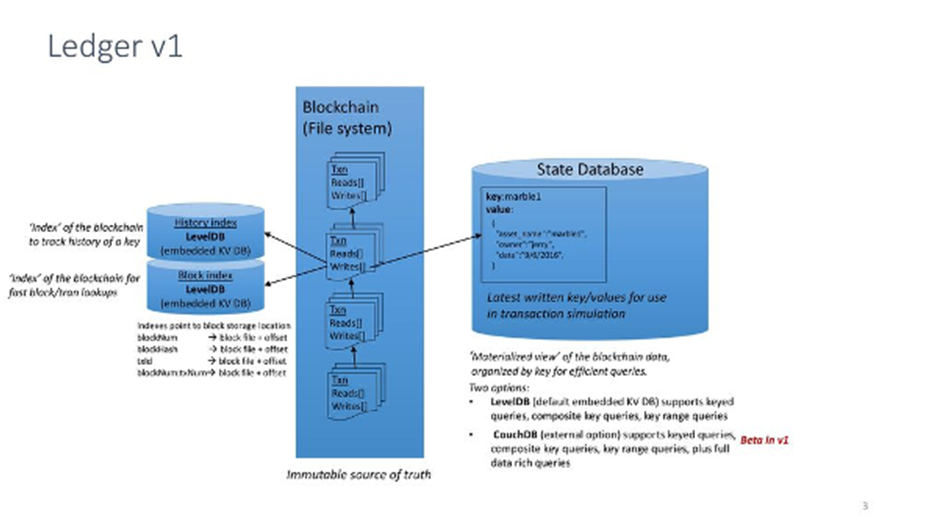

*source: hyperledger*

Since blocks are buried under other blocks as the chain grows, it is a moot point as far as the operation of the system is concerned. But if you look at sites that are block explorers all of them allow you to access the block given the block index or block hash.

But there are several technical reasons why a block needs to be found quickly.

1. Specific blocks could be invalidated and may need to be taken out

2. Blocks could be reorganized due to forks + joins

3. Certain blocks could be orphaned by the consensus protocol

4. In non-coin based blockchains, adjacent blocks could be merged after reorganization

5. Audit process would need instant access to the random blocks

Most implementations would store blocks and index them. Hyperledger for example stores indexes using LevelDB and blocks separately as flat files. Others store blocks themselves as blobs in databases. Typically the block would be stored separately from the index so that reorganizations can occur quickly. There are also multiple indices for accessing state changes, key histories…

hiveblocks

hiveblocks