Complex networked applications often require groups of processes to coordinate

to provide a particular service. For example, a multistage workflow

automation application may spawn multiple processes to work on different

parts of a large problem. One master process may wait for the entire

group of worker processes to exit before proceeding with the next stage

in the workflow. This is such a common paradigm that ACE provides the

ACE_Process_Manager class.

process_table_

current_count_

: ACE_Process

: size_t

+ open (start_size : size_t) : int

+ close () : int

+ spawn (proc : ACE_Process *, opt : ACE_Process_Options) : pid_t

+ spawn_n (n : size_t, opts : ACE_Process_Options, pids : pid_t [])

: int

+ wait (timeout : ACE_Time_Value) : int

+ wait (pid : pid_t, status : ACE_exitcode *) : pid_t

+ instance () : ACE Process Manager *

Figure 8.4: The ACE_ProcessJXIanager Class Diagram

Class Capabilities

The ACE_Process_Manager class uses the Wrapper Facade pattern to combine

the portability and power of ACE__Process with the ability to manage

groups of processes as a unit. This class has the following capabilities:

• It provides internal record keeping to manage and monitor groups of

processes that are spawned by the ACE_Process class.

• It allows one process to spawn a group of process and wait for them

to exit before proceeding with its own processing.

The interface of the ACE_Process_Manager class is shown in Figure 8.4

and its key methods are outlined in the following table:

• By instantiating one or more instances. This capability can be used

to support multiple process groups within a process.

Example

The example in Section 7.4 illustrated the design and implementation of a

reactive logging server that alleviated the drawbacks of a purely iterative

server design. Yet another server model for handling client requests is to

spawn a new process to handle each client. Process-based concurrency

models are often useful when multithreaded solutions are either:

• Not possible, for example, on older UNIX systems that lack efficient

thread implementations or that lack threading support altogether, or

• Not desired, for example, due to nonreentrant third-party library restrictions

or due to reliability requirements for hardware-supported

time and space partitioning.

Section 5.2 describes other pros and cons of implementing servers with

multiple processes rather than multiple threads.

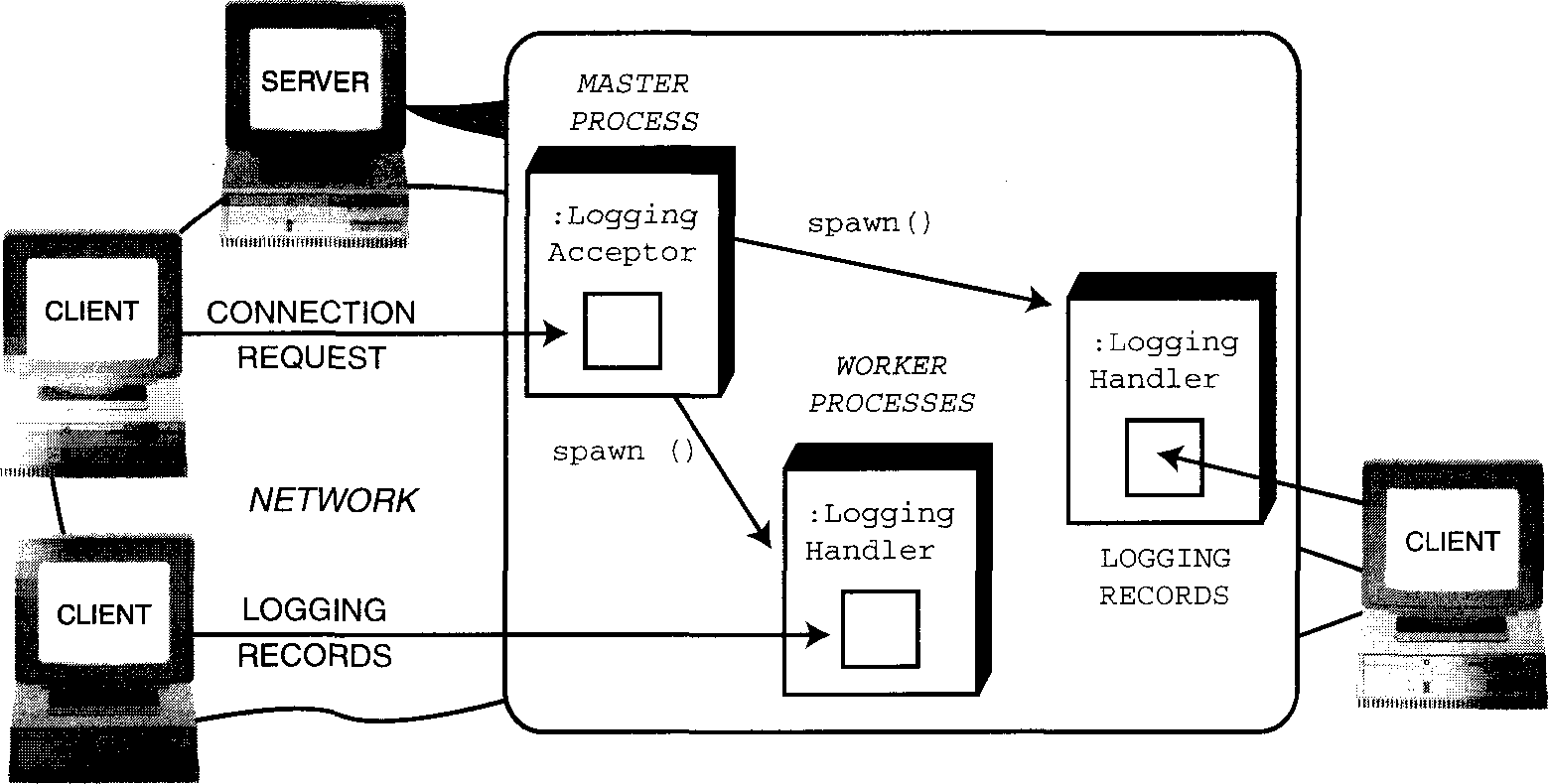

The structure of our multiprocess-based logging server is shown in Figure

8.5. This revision of the logging server uses a process-per-connection

concurrency model. It's similar in many ways to the first version in Section

4.4.3 that used an iterative server design. The main difference here

is that a master process spawns a new worker process for each accepted

connection to the logging service port. The master process then continues

to accept new connections. Each worker process handles all logging requests

sent by a client across one connection; the process exits when this

connection closes.

A process-per-connection approach has two primary benefits:

1. It "ruggedizes" the logging server against problems where one service

instance corrupts others. This could happen, for example, because of

internal bugs or by a malicious client finding a way to exploit a buffer

overrun vulnerability.

2. It's a straightforward next step to extend the logging server to allow

each process to verify or control per-user or per-session security

and authentication information. For example, the start of each userspecific

logging session could include a user/password exchange. By

using a process-based server, rather than a reactive or multithreaded

server, handling of user-specific information in each process would

not be accidentally (or maliciously!) confused with another user's

since each process has its own address space and access privileges.

The process-per-connection server shown below works correctly on both

POSIX and Win32 platforms. Given the platform differences explained in

Section 8.2 and Sidebar 16 on page 164, this is an impressive achievement.

Due to the clean separation of concerns in our example logging server's design

and ACE's intelligent use of wrapper facades, the differences required

for the server code are minimal and well contained. In particular, there's a

conspicuous lack of conditionally compiled application code.

Due to differing process creation models, however, we must first decide

how to run the POSIX process. Win32 forces a program image to run in a

new process, where as POSIX does not. On Win32, we keep all of the server

logic localized in one program and execute this program image in both the

worker and master processes using different command-line arguments. On

POSIX platforms, we can either:

• Use the same model as Win32 and run a new program image or

• Enhance performance by not invoking an exec* ( ) function call after

fork () returns.

source: C++ programming network book

hiveblocks

hiveblocks