## Context

Please read [last post](/@felixxx/a-hacky-guide-to-hive-part-221-blocks) for context.

## SQLAlchemy

This is no Python guide, this is no SQL guide.

I just want to get the job done, so I use [SQLAlchemy](https://www.sqlalchemy.org/).

You could build the following better or faster, in any language you want, without SQLAlchemy, for a different storage than SQLite... the main thing is: You'll probably need other data.

This is just a way to get to a working demo quickly.

### db.py

```

from typing import List

import datetime

from sqlalchemy import String, ForeignKey, DateTime, create_engine

from sqlalchemy.sql import func

from sqlalchemy.orm import DeclarativeBase, Mapped, mapped_column, relationship, Session

class Base(DeclarativeBase):

pass

class Block(Base):

__tablename__ = "blocks"

num: Mapped[int] = mapped_column(primary_key=True)

timestamp: Mapped[str] = mapped_column(String(19))

sql_timestamp: Mapped[datetime.datetime] = mapped_column(DateTime(timezone=True), server_default=func.now())

yos: Mapped[List["Yo"]] = relationship(back_populates="block", cascade="all, delete-orphan")

class Yo(Base):

__tablename__ = "yos"

id: Mapped[int] = mapped_column(primary_key=True, autoincrement=True)

author: Mapped[str] = mapped_column(String(16))

tx_id: Mapped[str] = mapped_column(String(40))

block_num: Mapped[int] = mapped_column(ForeignKey("blocks.num"))

block: Mapped[Block] = relationship(back_populates="yos")

```

This is no SQLAlchemy quide.

It is for the most part just the example from the [SQLalchemy tutorial](https://docs.sqlalchemy.org/en/20/orm/quickstart.html), adapted to my data.

It allows me to map SQL tables _blocks_ and _yos_ as classes _Block_ and _Yo_.

I am not even sure, if I am doing it right, but for the demo it doesn't matter.

```

def make_db(db):

Base.metadata.create_all(create_engine(db))

def set_blocks(blocks, session: Session):

session.add_all(blocks)

def get_last_block(session: Session):

return session.query(Block.num).order_by(Block.num.desc()).limit(1).first()[0]

```

The main goal of db.py is to be able to:

- make a DB

- add blocks to DB table (set)

- return blocks from DB (get)

For now, I only need to get to the last block, so I built a specialized function, to do just that.

```

def make_yo_blocks(start, blocks):

num = start

yo_blocks = []

for block in blocks:

yos = []

for tx_i, transaction in enumerate(block['transactions']):

for operation in transaction['operations']:

if operation['type'] == 'custom_json_operation':

if operation['value']['id'] == 'YO':

yo = Yo(author= operation['value']['required_posting_auths'][0], tx_id= block['transaction_ids'][tx_i])

yos.append(yo)

yo_block = Block(num= num, timestamp= block['timestamp'], yos= yos)

yo_blocks.append(yo_block)

num += 1

return yo_blocks

```

The above is the last piece.

It does a lot of things for a single function.

It takes the _blocks_ data from the next block and converts it to _Blocks_ (and _Yos_).

It can break in a lot of places, but that's exactly what it should do for now.

#### Example

```

db_file = "sqlite:///yo.db"

init_block = Block(num = 89040473, timestamp = '2024-09-18T12:55:36', yos = [Yo(author = 'felixxx', tx_id = 'eb025cf797ee5bc81d7399282268079cc29cc66d')])

def init():

make_db(db_file)

with Session(create_engine(db_file, echo=True)) as session:

set_blocks([init_block], session)

session.commit()

```

If you run this example init twice, you will get an error:

Demonstrating that SQL is already doing stuff; it prevents inserting a block of the same _num_ twice.

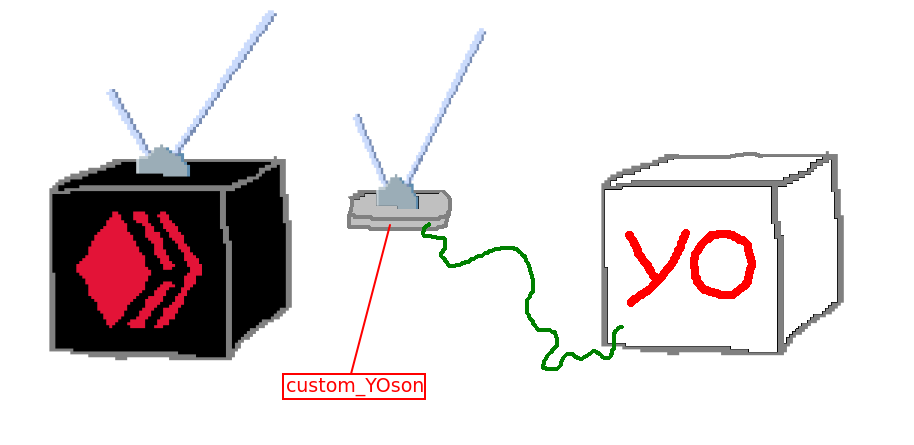

## custom_YOson

In the final process the api and db handling meet.

### api.py

```

import requests

def get_block_range(start, count, url):

data = '{"jsonrpc":"2.0", "method":"block_api.get_block_range","params":{"starting_block_num":'+str(start)+',"count": '+str(count)+'},"id":1}'

return requests.post(url, data)

```

That's the Hive api from last post. It's really that short.

I modified it just a little, to move data conversion further upstream; Instead of a list of blocks, it now returns raw data.

### custom_YOson.py

```

import db, api

url = 'https://api.hive.blog'

db_file = "sqlite:///yo.db"

count = 10

innit_block = db.Block(num = 89040473, timestamp = '2024-09-18T12:55:36', yos = [db.Yo(author = 'felixxx', tx_id = 'eb025cf797ee5bc81d7399282268079cc29cc66d')])

def innit():

db.make_db(db_file)

with db.Session(db.create_engine(db_file)) as session:

db.set_blocks([innit_block], session)

session.commit()

def tick():

with db.Session(db.create_engine(db_file)) as session:

last = db.get_last_block(session)

response = api.get_block_range(last + 1, count, url)

blocks = response.json()['result']['blocks']

with db.Session(db.create_engine(db_file)) as session:

if last == db.get_last_block(session): # cheap way to make this thread-safe

db.set_blocks(db.make_yo_blocks(last + 1, blocks), session)

session.commit()

```

The main procedure, that should happen during every tick:

- with open db session:

- get last block's num from db

- query Hive node for next (count = 10) blocks

- convert Hive node response to dictionary, select key 'blocks'

- with open db session:

- get last block's num again, if same:

- convert blocks to Blocks

- store Blocks

### Process

custom_YOson is only 75 lines long:

- api.py (5)

- db.py (48)

- custom_YOson.py (22)

You'd still have to wrap this in a loop, but the core procedure works for stream and resync.

My main goal here was to use functions in tick() that each represent one step of the procedure.

### Performance

1 block @ 26 KB|10 blocks @ 168 KB|100 blocks @ 1919 KB

-|-|-

api.hive.blog: 0.693 s<br>YOson: 0.020 s<br>total: 0.714 s|api.hive.blog: 0.976 s<br>YOson: 0.017 s<br>total: 0.993 s|api.hive.blog: 7.910 s<br>YOson: 0.028 s<br>total: 7.939 s

I did 3 test shots and what's clear: The node performance is the bottleneck.

I could optimize my code or try a different language and database, but I could only shave off miliseconds.

Acessing a node via localhost would probably be substantially faster, but even then: to improve this there probably isn't much you can do in Python...

## Conclusion

Hive's state changes. The only way to observe state changes is querying a node.

In many ways, Hive is a like a black box.

Goal was to build a white box, that has healthy data. (yo.db)

How to best sanitize, store and handle the data depends on the application.

You could build something like HiveSQL, hive-engine, a voting service or a game with a yo.db as engine, like above.

Next post I'll cover error handling and deployment and build an application.

Had I understood 8 years ago, when I first found this chain, how to build stuff like above... I could have made a lot of money. A lot of it isn't even Hive specific...

**Please** test & comment!| author | felixxx |

|---|---|

| permlink | a-hacky-guide-to-hive-part-222-customyoson |

| category | dev |

| json_metadata | "{"app":"peakd/2024.8.7","description":"finishing first demo of custom_YOson","format":"markdown","image":["https://files.peakd.com/file/peakd-hive/felixxx/23wMQ8bUMubWa5kZfGGCiibyJtDVy9QXdN6ze5v4HL3nRaVrmio9jjipahrVJf8sArgis.png"],"tags":["dev","hive-dev","hivedev","hive"],"users":["felixxx"]}" |

| created | 2024-09-22 09:51:45 |

| last_update | 2024-09-22 14:01:39 |

| depth | 0 |

| children | 5 |

| last_payout | 2024-09-29 09:51:45 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 16.826 HBD |

| curator_payout_value | 16.801 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 7,288 |

| author_reputation | 217,799,078,443,713 |

| root_title | "A Hacky Guide to Hive (part 2.2.2: custom_YOson)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 137,312,539 |

| net_rshares | 106,605,795,154,346 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| leprechaun | 0 | 6,296,503,750 | 32.5% | ||

| juanmiguelsalas | 0 | 7,519,594,812 | 10% | ||

| kenny-crane | 0 | 747,662,179,319 | 50% | ||

| good-karma | 0 | 12,457,528,619 | 1% | ||

| jeffjagoe | 0 | 802,726,035,673 | 100% | ||

| ace108 | 0 | 1,114,170,437,840 | 25% | ||

| shaka | 0 | 7,620,569,704,855 | 100% | ||

| avellana | 0 | 21,740,362,835 | 19.6% | ||

| miketr | 0 | 197,155,123,595 | 60% | ||

| erikkartmen | 0 | 4,129,057,948 | 100% | ||

| themonetaryfew | 0 | 449,158,464,185 | 100% | ||

| pollux.one | 0 | 690,153,860,905 | 80% | ||

| darkflame | 0 | 267,574,418,814 | 100% | ||

| uwelang | 0 | 1,125,902,358,417 | 50% | ||

| phusionphil | 0 | 14,905,472,120 | 95% | ||

| ssekulji | 0 | 60,222,960,405 | 100% | ||

| bleujay | 0 | 2,252,386,633,085 | 100% | ||

| freiheit50 | 0 | 684,317,150 | 99% | ||

| esteemapp | 0 | 2,825,631,179 | 1% | ||

| alexvan | 0 | 96,431,280,130 | 100% | ||

| darth-azrael | 0 | 29,537,996,940 | 9% | ||

| darth-cryptic | 0 | 5,210,339,088 | 9% | ||

| thereikiforest | 0 | 1,126,030,334 | 10% | ||

| danielsaori | 0 | 540,140,021,347 | 100% | ||

| freebornsociety | 0 | 5,625,319,661 | 10% | ||

| thatgermandude | 0 | 216,624,151,988 | 100% | ||

| andyjaypowell | 0 | 901,104,826,428 | 100% | ||

| grider123 | 0 | 3,183,321,934 | 9% | ||

| morph | 0 | 6,351,442,393 | 50% | ||

| kilianmiguel | 0 | 2,172,614,695 | 10% | ||

| tonyz | 0 | 769,957,545,589 | 70% | ||

| leontr | 0 | 2,533,639,687 | 40% | ||

| alphacore | 0 | 5,432,503,909 | 5.39% | ||

| rival | 0 | 10,724,274,091 | 100% | ||

| roxane | 0 | 919,396,070 | 50% | ||

| joeyarnoldvn | 0 | 461,933,149 | 1.47% | ||

| bluemist | 0 | 24,223,984,929 | 10% | ||

| lenasveganliving | 0 | 914,017,695 | 5% | ||

| appreciator | 0 | 36,960,354,946,715 | 10% | ||

| shanibeer | 0 | 605,883,790,018 | 35% | ||

| etblink | 0 | 318,871,204,611 | 50% | ||

| musicgeek | 0 | 999,118,842 | 50% | ||

| marketinggeek | 0 | 719,388,206 | 100% | ||

| sportschain | 0 | 943,852,465 | 50% | ||

| cconn | 0 | 12,518,866,331 | 100% | ||

| sorin.cristescu | 0 | 56,555,400,426 | 10% | ||

| deathwing | 0 | 2,016,697,935,302 | 50% | ||

| revisesociology | 0 | 1,282,114,324,134 | 50% | ||

| yangyanje | 0 | 5,515,192,649 | 25% | ||

| esteem.app | 0 | 335,765,163 | 1% | ||

| der-prophet | 0 | 41,307,151,215 | 16.5% | ||

| dkid14 | 0 | 214,645,125,015 | 100% | ||

| sunsea | 0 | 12,773,505,854 | 5% | ||

| emrebeyler | 0 | 3,492,148,248,137 | 35% | ||

| docmarenkristina | 0 | 790,897,589 | 50% | ||

| mytechtrail | 0 | 18,184,944,739 | 15% | ||

| fourfourfun | 0 | 4,270,457,791 | 12.5% | ||

| abeba | 0 | 988,913,938 | 12.25% | ||

| itwithsm | 0 | 480,823,655 | 10% | ||

| piotrgrafik | 0 | 1,151,338,601,752 | 80% | ||

| investyourvote | 0 | 40,733,068,426 | 60% | ||

| kkarenmp | 0 | 5,148,440,496 | 5% | ||

| franciscomarval | 0 | 4,975,479,631 | 24.5% | ||

| minerspost | 0 | 2,221,459,063 | 50% | ||

| iddaa | 0 | 1,232,997,857 | 25% | ||

| sudefteri | 0 | 38,356,325,338 | 50% | ||

| tomhall | 0 | 1,280,631,866,499 | 100% | ||

| mindscapephotos | 0 | 912,983,091 | 100% | ||

| bhattg | 0 | 19,728,672,132 | 3% | ||

| bertrayo | 0 | 6,053,097,527 | 5% | ||

| movement19 | 0 | 1,745,358,570 | 6.25% | ||

| akifane | 0 | 1,443,800,603 | 50% | ||

| jagoe | 0 | 112,807,821,678 | 100% | ||

| siphon | 0 | 83,448,051,171 | 50% | ||

| wildarms65 | 0 | 8,568,077,321 | 100% | ||

| wilsonblue5 | 0 | 536,100,182 | 50% | ||

| backinblackdevil | 0 | 41,314,282,391 | 100% | ||

| oadissin | 0 | 2,273,277,911 | 0.5% | ||

| satren | 0 | 42,730,164,437 | 30% | ||

| ravenmus1c | 0 | 10,839,158,864 | 0.5% | ||

| rivalzzz | 0 | 96,449,898,709 | 100% | ||

| inciter | 0 | 6,965,319,022 | 10% | ||

| memepress | 0 | 1,617,588,408 | 47.5% | ||

| solominer | 0 | 2,458,177,842,812 | 25% | ||

| fw206 | 0 | 2,579,651,865,158 | 30% | ||

| slobberchops | 0 | 6,974,005,779,948 | 100% | ||

| steemulant | 0 | 273,584,714 | 14.23% | ||

| radiosteemit | 0 | 4,506,660,589 | 24.5% | ||

| velazquezboy | 0 | 6,308,992,116 | 100% | ||

| dalz | 0 | 1,902,388,580,155 | 100% | ||

| smartvote | 0 | 159,369,412,999 | 6.2% | ||

| nancybmp | 0 | 505,628,248 | 11.02% | ||

| artmedina | 0 | 2,945,644,776 | 12.25% | ||

| altonos | 0 | 3,316,320,011 | 100% | ||

| lagitana | 0 | 1,078,404,916 | 19.6% | ||

| berthold | 0 | 10,371,806,702 | 40% | ||

| janettyanez | 0 | 1,388,805,380 | 24.5% | ||

| captain.future | 0 | 5,044,882,394 | 100% | ||

| kizumo | 0 | 209,536,430,724 | 100% | ||

| kiel91 | 0 | 559,765,565,798 | 100% | ||

| retrodroid | 0 | 3,043,063,071 | 9% | ||

| leosoph | 0 | 193,196,893,149 | 100% | ||

| sophieandhenrik | 0 | 7,776,558,202 | 50% | ||

| alenox | 0 | 576,933,878 | 5% | ||

| blue.rabbit | 0 | 230,185,613,635 | 100% | ||

| steemvpn | 0 | 37,394,985,384 | 95% | ||

| borjan | 0 | 2,238,881,421,820 | 100% | ||

| ph1102 | 0 | 1,816,064,563,623 | 60% | ||

| kittykate | 0 | 113,259,155,446 | 100% | ||

| investinthefutur | 0 | 88,708,423,599 | 60% | ||

| mind.force | 0 | 6,942,136,525 | 25% | ||

| whangster79 | 0 | 3,960,565,142 | 25% | ||

| dpoll.witness | 0 | 877,065,900 | 35% | ||

| sandymeyer | 0 | 37,123,370,870 | 12.5% | ||

| tobago | 0 | 558,785,244 | 35% | ||

| an-sich-wachsen | 0 | 4,442,149,518 | 33% | ||

| lotto-de | 0 | 89,472,382,684 | 60% | ||

| rcaine | 0 | 8,199,356,511 | 6% | ||

| pavelsku | 0 | 16,924,575,370 | 12.25% | ||

| iceledy | 0 | 822,139,054 | 100% | ||

| garlet | 0 | 33,968,690,354 | 50% | ||

| ugochill | 0 | 44,258,286,517 | 100% | ||

| nerdvana | 0 | 472,486,376 | 5% | ||

| danielhuhservice | 0 | 99,281,210,357 | 33% | ||

| hive-127039 | 0 | 520,082,207 | 25% | ||

| shinoxl | 0 | 4,242,278,092 | 100% | ||

| zelegations | 0 | 13,925,121,233 | 95% | ||

| radiohive | 0 | 6,710,471,095 | 24.5% | ||

| tht | 0 | 36,328,646,942 | 100% | ||

| janaliana | 0 | 671,976,197 | 12.5% | ||

| timhorton | 0 | 13,506,966,781 | 95% | ||

| cerberus-dji | 0 | 3,114,994,187 | 95% | ||

| hivelist | 0 | 9,894,185,011 | 3% | ||

| tht1 | 0 | 14,256,792,913 | 100% | ||

| ecency | 0 | 448,292,280,308 | 1% | ||

| mangowambo | 0 | 296,791,485 | 100% | ||

| kvfm | 0 | 522,035,043 | 12.25% | ||

| noelyss | 0 | 2,671,109,724 | 5% | ||

| hivecannabis | 0 | 11,418,129,429 | 95% | ||

| lucianav | 0 | 2,160,792,225 | 5% | ||

| laradio | 0 | 1,160,141,581 | 24.5% | ||

| gabilan55 | 0 | 665,520,751 | 5% | ||

| ecency.stats | 0 | 377,066,849 | 1% | ||

| herz-ass | 0 | 92,919,818,410 | 100% | ||

| recoveryinc | 0 | 8,217,622,176 | 12.5% | ||

| liz.writes | 0 | 1,060,059,566 | 50% | ||

| dying | 0 | 917,057,302 | 25% | ||

| hive-vpn | 0 | 2,802,776,192 | 95% | ||

| cleydimar2000 | 0 | 2,473,081,648 | 12.25% | ||

| noalys | 0 | 2,339,800,902 | 5% | ||

| radiolovers | 0 | 5,415,907,588 | 24.5% | ||

| rima11 | 0 | 53,082,203,935 | 2% | ||

| trcommunity | 0 | 0 | 50% | ||

| alberto0607 | 0 | 10,708,153,545 | 24.5% | ||

| kattycrochet | 0 | 8,059,606,858 | 5% | ||

| bea23 | 0 | 5,703,999,740 | 24.5% | ||

| ciudadcreativa | 0 | 957,455,803 | 19.6% | ||

| hykss.leo | 0 | 102,139,382,112 | 10% | ||

| kenechukwu97 | 0 | 297,215,773,038 | 100% | ||

| samrisso | 0 | 8,845,591,203 | 12.5% | ||

| trostparadox | 0 | 4,570,791,548,088 | 100% | ||

| coinomite | 0 | 1,160,306,537 | 100% | ||

| xyba | 0 | 49,002,635,527 | 100% | ||

| vaipraonde | 0 | 32,116,346,323 | 100% | ||

| tomtothetom | 0 | 3,635,657,402 | 25% | ||

| princeofbeyhive | 0 | 1,410,180,212 | 50% | ||

| jane1289 | 0 | 3,947,478,700 | 2% | ||

| mein-senf-dazu | 0 | 216,037,117,204 | 63% | ||

| power-kappe | 0 | 669,895,254 | 10% | ||

| fotomaglys | 0 | 4,065,780,887 | 5% | ||

| seryi13 | 0 | 1,230,644,795 | 7% | ||

| lxsxl | 0 | 30,260,204,650 | 50% | ||

| photolovers1 | 0 | 1,884,724,915 | 3% | ||

| meesterbrain | 0 | 582,750,317 | 32.5% | ||

| proymet | 0 | 1,630,953,640 | 24.5% | ||

| t-nil | 0 | 1,779,646,551 | 30% | ||

| aprasad2325 | 0 | 1,816,206,928 | 5% | ||

| yoieuqudniram | 0 | 1,362,255,299 | 5% | ||

| dungeondog | 0 | 67,879,913,201 | 100% | ||

| bilgin70 | 0 | 23,146,982,492 | 25% | ||

| ronymaffi | 0 | 3,292,448,552 | 24.5% | ||

| acantoni | 0 | 3,632,893,919 | 12.5% | ||

| marsupia | 0 | 890,609,342 | 25% | ||

| malhy | 0 | 2,066,167,130 | 5% | ||

| eolianpariah2 | 0 | 1,847,322,238 | 0.5% | ||

| arc7icwolf | 0 | 173,190,002,459 | 100% | ||

| relf87 | 0 | 78,944,247,434 | 100% | ||

| susurrodmisterio | 0 | 923,752,240 | 12.25% | ||

| wellingt556 | 0 | 43,106,776,014 | 100% | ||

| adventkalender | 0 | 2,397,401,832 | 50% | ||

| heteroclite | 0 | 15,375,152,943 | 25% | ||

| patchwork | 0 | 1,765,895,049 | 31.5% | ||

| palomap3 | 0 | 166,745,907,591 | 49% | ||

| us3incanada | 0 | 1,665,210,763 | 100% | ||

| dusunenkalpp | 0 | 25,623,402,301 | 50% | ||

| doobetterhive | 0 | 4,388,930,074 | 100% | ||

| ipexito | 0 | 877,287,912 | 40% | ||

| visionarystudios | 0 | 8,998,820,100 | 100% | ||

| visualblock | 0 | 71,130,612,408 | 24.5% | ||

| castri-ja | 0 | 479,756,172 | 2.5% | ||

| mukadder | 0 | 80,146,835,798 | 35% | ||

| juansitosaiyayin | 0 | 1,753,065,011 | 100% | ||

| incublus | 0 | 419,029,684,301 | 50% | ||

| ezgicop | 0 | 6,879,249,106 | 50% | ||

| pinkchic | 0 | 1,150,337,390 | 2.5% | ||

| slicense | 0 | 1,944,553,071 | 60% | ||

| megstarbies | 0 | 1,878,341,618 | 100% | ||

| awildovasquez | 0 | 37,752,574,607 | 100% | ||

| duskobgd | 0 | 90,731,725,433 | 100% | ||

| hive-195880 | 0 | 1,085,454,234 | 25% | ||

| naters | 0 | 477,734,916 | 100% | ||

| hive-coding | 0 | 758,646,190 | 63% | ||

| lu1sa | 0 | 120,052,721,922 | 100% | ||

| bemier | 0 | 79,293,244,662 | 100% | ||

| chinay04 | 0 | 52,394,033,101 | 100% | ||

| hivegadgets | 0 | 1,346,100,622 | 31.5% | ||

| e-sport-gamer | 0 | 2,411,032,618 | 33% | ||

| tahastories1 | 0 | 478,112,661 | 5% | ||

| les90 | 0 | 1,048,959,349 | 5% | ||

| e-sport-girly | 0 | 1,923,027,221 | 33% | ||

| hivetycoon | 0 | 994,789,899 | 63% | ||

| foodiefrens | 0 | 473,260,267 | 100% | ||

| empo.voter | 0 | 12,877,263,515,967 | 50% | ||

| jkatrina | 0 | 4,298,720,124 | 50% | ||

| jahanzaibanjum | 0 | 505,957,470 | 10% | ||

| bellscoin | 0 | 1,645,389,031 | 100% | ||

| learn2code | 0 | 1,139,841,479 | 50% | ||

| lolz.byte | 0 | 0 | 100% | ||

| hive-188753 | 0 | 1,227,944,201 | 50% | ||

| hive-world-champ | 0 | 35,248,755,505 | 100% |

Congratulations for your level of mastery. Hats off !

| author | sorin.cristescu |

|---|---|

| permlink | re-felixxx-2024922t153915752z |

| category | dev |

| json_metadata | {"content_type":"general","type":"comment","tags":["dev","hive-dev","hivedev","hive"],"app":"ecency/3.1.6-mobile","format":"markdown+html"} |

| created | 2024-09-22 13:39:15 |

| last_update | 2024-09-22 13:39:15 |

| depth | 1 |

| children | 3 |

| last_payout | 2024-09-29 13:39:15 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 54 |

| author_reputation | 255,754,000,681,122 |

| root_title | "A Hacky Guide to Hive (part 2.2.2: custom_YOson)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 137,316,985 |

| net_rshares | 0 |

Thanks! Certainly no master. I have advanced a level above writing long scripts. Still haven't deployed anything cool.

| author | felixxx |

|---|---|

| permlink | re-sorincristescu-sk7xfj |

| category | dev |

| json_metadata | {"tags":"dev"} |

| created | 2024-09-22 14:26:12 |

| last_update | 2024-09-22 14:26:27 |

| depth | 2 |

| children | 2 |

| last_payout | 2024-09-29 14:26:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.023 HBD |

| curator_payout_value | 0.023 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 118 |

| author_reputation | 217,799,078,443,713 |

| root_title | "A Hacky Guide to Hive (part 2.2.2: custom_YOson)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 137,317,657 |

| net_rshares | 150,754,688,046 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| sorin.cristescu | 0 | 150,754,688,046 | 30% |

Have a look at what we are trying to do with OffChain Luxembourg (@offchain-lux) and let me know if you are interested in collaborating. The coolest things come from collaboration IMO

| author | sorin.cristescu |

|---|---|

| permlink | re-felixxx-sk7xii |

| category | dev |

| json_metadata | {"tags":["dev"],"app":"peakd/2024.8.7"} |

| created | 2024-09-22 14:27:54 |

| last_update | 2024-09-22 14:27:54 |

| depth | 3 |

| children | 1 |

| last_payout | 2024-09-29 14:27:54 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.026 HBD |

| curator_payout_value | 0.026 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 184 |

| author_reputation | 255,754,000,681,122 |

| root_title | "A Hacky Guide to Hive (part 2.2.2: custom_YOson)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 137,317,680 |

| net_rshares | 169,655,355,620 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| felixxx | 0 | 169,655,355,620 | 11% |

thats interesting and i loke that you are caring about the performance from start. great job. i was wondering how i could use custom_json without having a database. not sure yet what path i will take, but this solution was something like i had in mind. good to see that i was not wrong having a database to query it easier when need past data.

| author | vaipraonde |

|---|---|

| permlink | re-felixxx-sk95mx |

| category | dev |

| json_metadata | {"tags":["dev"],"app":"peakd/2024.8.7"} |

| created | 2024-09-23 06:21:00 |

| last_update | 2024-09-23 06:21:00 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-09-30 06:21:00 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 346 |

| author_reputation | 80,908,452,666,131 |

| root_title | "A Hacky Guide to Hive (part 2.2.2: custom_YOson)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 137,332,517 |

| net_rshares | 0 |

hiveblocks

hiveblocks