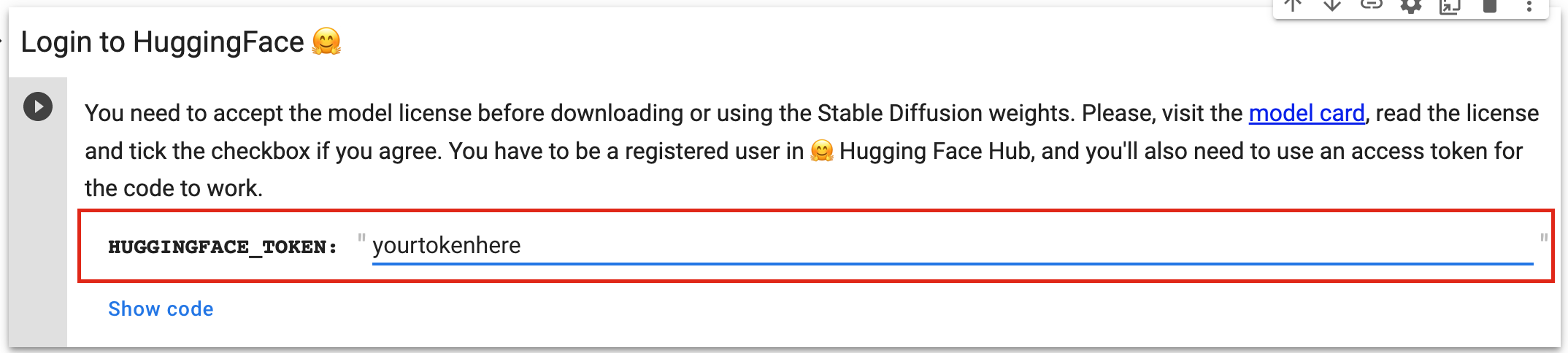

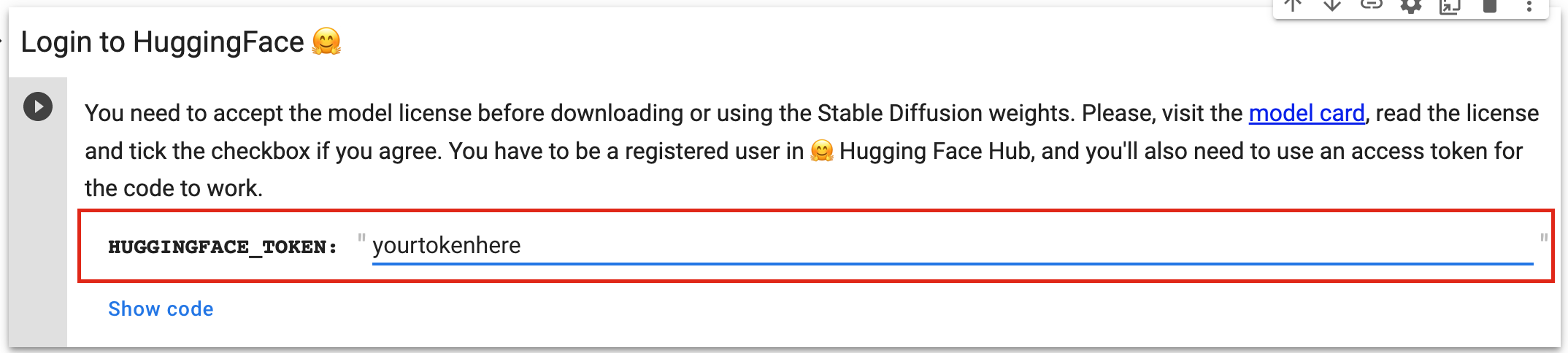

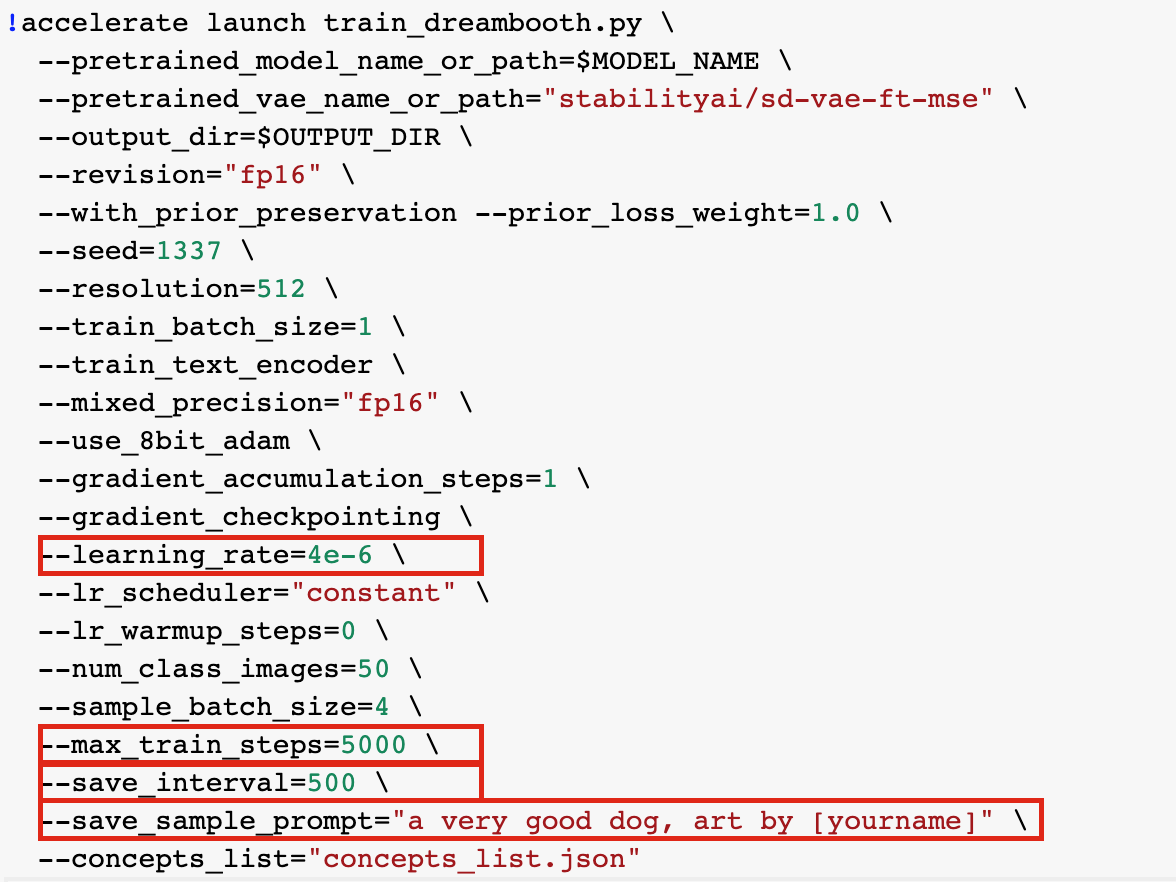

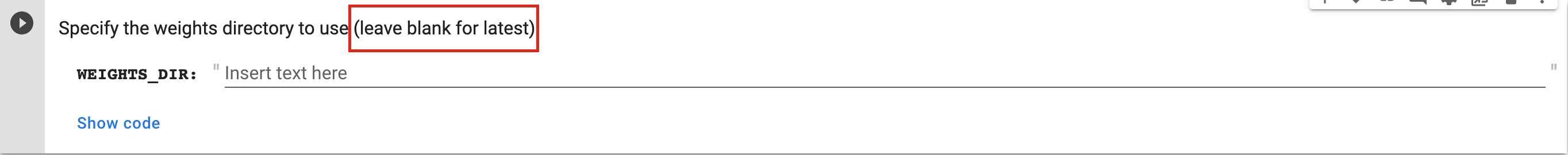

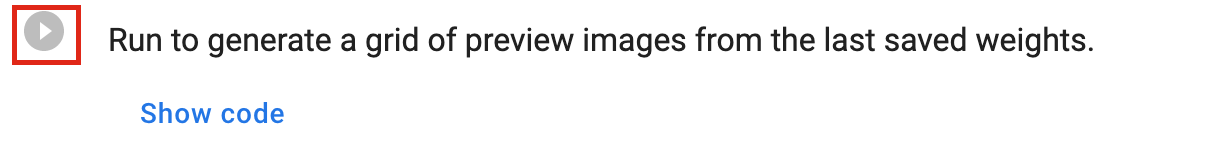

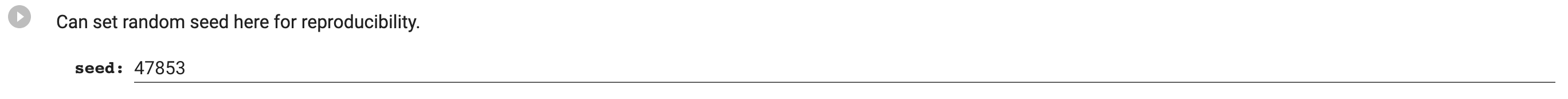

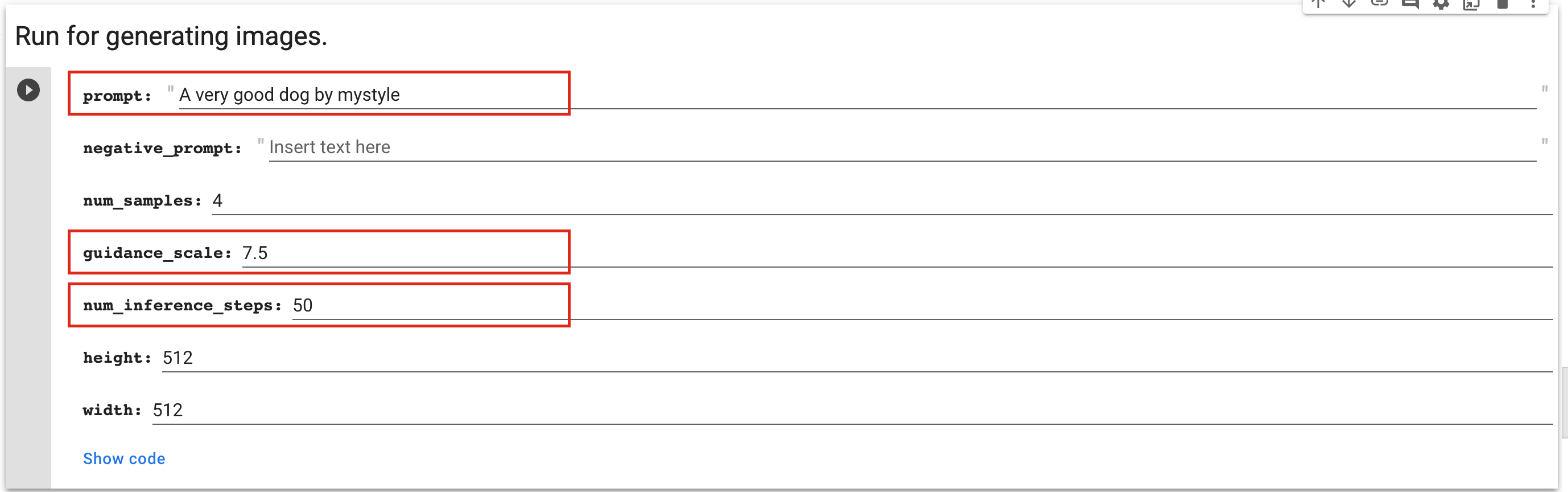

Stable Diffusion V2 [was released on November 23](https://stability.ai/blog/stable-diffusion-v2-release), and is the first text-to-image model to be comprised completely of open-source components (previous versions still relied on OpenAi's CLIP model, whose dataset is closed-source). While V2 prompts really well for a number of use-cases right out of the box (albeit differently than its predecessors), one of the things it was designed to do best was to act as a base upon which to fine-tune models. Model creation is kind of my jam. I've been creating fine-tuned unconditional diffusion models like [Pixel Art Diffusion](https://github.com/KaliYuga-ai/Pixel-Art-Diffusion) and [Sprite Sheet Diffusion](https://hive.blog/hive-158694/@kaliyuga/coming-very-soon-sprite-sheet-diffusion) since June of this year, and I started training GANs in early 2020. I also wrote a guide to [training your own unconditional diffusion model](https://peakd.com/hive-158694/@kaliyuga/training-your-own-unconditional-diffusion-model-with-minimal-coding), as well as [a guide to GAN-building](https://peakd.com/@kaliyuga/using-runwayml-to-train-your-own-ai-image-models-without-a-single-line-of-code). I'd already been working with a Dreambooth notebook to fine-tune Stable Diffusion 1.5 with my own datasets, so when Stable Diffusion v2 came out, I made a really simple fork of the notebook with it--and a few improved default settings--added in. In the last few days since release, a number of people have asked me for both the notebook and training tips, so I figured I'd kill two birds with one stone, share the notebook, and write a training guide for it.  --- #### To complete this tutorial, you will need: - A Google Colab account (Pro is recommended) - [ImageAssistant](https://chrome.google.com/webstore/detail/imageassistant-batch-imag/dbjbempljhcmhlfpfacalomonjpalpko/related?hl=en), a Chrome extension for bulk-downloading images from websites - [BIRME](https://www.birme.net/?target_width=512&target_height=512&rename=3image-xxx&rename_start=555), a bulk image cropper/resizer accessible from your browser - [My Fork](https://github.com/KaliYuga-ai/DreamBoothV2fork/blob/main/DreamBooth_Stable_Diffusion_V2.ipynb) of [Shivam Shrirao's](https://github.com/ShivamShrirao) DreamBooth colab notebook ------- ## Step 1: **Gathering your dataset** *This section is more or less a direct port from my [2020 piece](https://peakd.com/@kaliyuga/using-runwayml-to-train-your-own-ai-image-models-without-a-single-line-of-code) on training GANS, since dataset gathering and prep is basically the same for diffusion models. The only changes made pertain to dataset size for DreamBooth.* AI models generate new images based upon the data you train the model on. The algorithm's goal is to approximate as closely as possible the content, color, style, and shapes in your input dataset, and to do so in a way that matches the general relationships/angles/sizes of objects in the input images. This means that having a quality dataset collected is vital in developing a successful AI model. If you want a very specific output that closely matches your input, the input has to be fairly uniform. For instance, if you want a bunch of generated pictures of [cats](https://thiscatdoesnotexist.com/), but your dataset includes birds and gerbils, your output will be less catlike overall than it would be if the dataset was made up of cat images only. Angles of the input images matter, too: a dataset of cats in one uniform pose (probably an impossible thing, since cats are never uniform about *anything*) will create an AI model that generates more proportionally-convincing cats. Click through the site linked above to see what happens when a more diverse set of poses is used--the end results are still definitely cats, but while some images are really convincing, others are eldritch horrors.   If you're interested in generating more experimental forms, having a more diverse dataset might make sense, but you don't want to go too wild--if the AI can't find patterns and common shapes in your input, your output likely won't look like much. Another important thing to keep in mind when building your input dataset is that both quality and quantity of images matter. Honestly, the more high-quality images you can find of your desired subject, the better, though the more uniform/simple the inputs, the fewer images seem to be absolutely necessary for the AI to get the picture. Even for uniform inputs in a non-DreamBooth model, I'd recommend no fewer than 1000 quality images for the best chance of creating a model that gives you recognizable outputs. For more diverse subjects, three or four times that number is closer to the mark, and even that might be too few. Really, just try to get as many good, high res images as you can. **For DreamBooth,** way fewer images are needed than for a full model. My datasets for it are usually around 30-70 high-quality images and event that may be too many for a lot of cases. But how do you get high-res images without manually downloading every single one? Many AI artists use some form of bulk-downloading or web scraping. Personally, I use a Chrome extension called [ImageAssistant](https://chrome.google.com/webstore/detail/imageassistant-batch-imag/dbjbempljhcmhlfpfacalomonjpalpko/related?hl=en). This extension bulk-downloads all the loaded images on any given webpage into a .zip file. Downsides of ImageAssistant are that it sometimes duplicates images, and it will also extract ad images, especially if you try to bulk download Pinterest boards. There are Mac applications that you can use to scan the download folders for duplicated images, though, and the ImageAssistant interface makes getting rid of unwanted ad images fairly easy, and it's WAY faster than downloading tons of images by hand. Images that are royalty-free are obviously the best choice to download from a copyright perspective. AI outputs based on datasets with copyrighted material are a somewhat grey area legally. That being said, it does seem to me that Creative Commons laws should cover such outputs, especially when the copyrighted material is not at all in evidence in the end product. I'm no lawyer, though, so use your discretion when choosing what to download. A safe, high-quality bet would be to search on Getty images for royalty-free images of whatever you're building an AI model to duplicate, and then bulk-download the results. ------- ## Step 2: Preprocessing Your Dataset This is where we get all of our images nice and cropped/uniform so that the training notebook (which only processes square images) doesn't squash rectangular images into 1:1 aspect ratios. For this step, head over to [BIRME](https://www.birme.net/?target_width=512&target_height=512&rename=3image-xxx&rename_start=555) (**B**ulk **I**mage **R**esizing **M**ade **E**asy) and drag/drop the file you've saved your dataset in. Once all your images upload (might take a minute, depending on the number of images), you'll see that all but a square portion of the images you've uploaded are greyed out. The link I've provided should have "autodetect focal point" enabled, which will save you a ton of time manually choosing what you want included in the square, but you can also do your selections by hand, if you wish. When you're satisfied with all the images you've selected, click "*save as Zip*." We're choosing to save images as 512x512 squares instead of 256x256 squares because even though our model outputs will be 256x256, the training model doesn't care what size the square images it's provided are. Saving our dataset as 512x512 images means that, should we decide to train a 512x512 model in the future, we don't have to re-preprocess our dataset. ------- ## Step 3: Training your Model Head over to the [DreamBooth notebook](https://github.com/KaliYuga-ai/DreamBoothV2fork/blob/main/DreamBooth_Stable_Diffusion_V2.ipynb). First, you'll click the play button arrow next to the "Check type of GPU and VRAM" section.  If this is the first time you've ever used a Colab notebook, please note that you'll be clicking a lot of these. From here on out, I'll only mention the sections that you need to enter information into or understand before hitting the play button, but generally speaking, if you see a play button, click it. ----- The first section you'll need to enter information into is the "**Login to HuggingFace 🤗**" section. You do this by creating an account on Huggingface, agreeing to the ToS linked in the notebook, creating a "Write" token in your settings, and pasting the token into the field in the notebook.  ---- The next section you'll need to enter info in is "**Settings and run**". Here, specify your desired output directory in Drive. Type something you'll remember, it will make a folder for you.  --- In the "**Define your Concepts List**" section, you will need to specify a few things: **instance_prompt:** decide what word or phrase you want to use to evoke the style you're baking into Stable Diffusion. If you're training on a dataset of your own art, for instance, you might want to use "art by [your name]." **class_prompt:** a prompt that invokes the category of thing your dataset belongs to. For instance, if your art is all pen-and-ink pointillism art, try something like "pen-and-ink pointillist illustration". It pays to double-check the prompt in Stable Diffusion before committing to it--if it generates crummy images, you may want to reconsider a prompt that works better. **instance_data_dir:** what you want the folder your instance data is saved in to be called **class_data_dir:** what you want the folder your class data is saved in to be called  ----------- The "**Training Settings**" section is mostly best left alone unless you're really experienced--or feeling adventurous! The exceptions to this are as follows: **learning_rate:** the default of 4e-6 seems to work pretty well for a diverse range of image types, but it could be that raising it or lowering makes more sense for your datasets. Experiment if you like--we might all learn something new and cool! **max_train_steps:** You'll likely want to bump this up for datasets over about 30 images and down for smaller ones. **save_interval:** How often your model saves weights and outputs training sampled to your Drive. The files are big, and can quickly take up room, so if you have limited Drive space, delete older ones as you train. **save_sample_prompt:** Change this to one that makes sense with your model--and make sure to include your instance prompt!! This will be the prompt used to save images at regular intervals (set with **save_interval**) during training.  ----- ## Step 4: Testing your Model After you're done training, you'll definitely want to take your new model for a spin. This notebook makes that really easy to do. First, you'll want to determine which checkpoint you want to use. You'll do this by 1. Loading the latest weights directory  2. Generating a grid of all the sample images from the weights saved in your weights folder  Have a look at all of the samples compared to one another and choose the saved step number that you like best. Copy that weights folder's path into the weights_dir field (or just delete the number at the tail end of the path already there and type the step number you want in its place). After that, run the next two cells to package the weights directory into a smaller package that can run in things like Deforum Diffusion. Now for the fun part! To test your model once it's all packaged up, enter an arbitrary seed number in the seed field...   ... then enter whatever prompt you want in the prompt field, change the cfg scale and steps if you want (the defaults are the same settings that generated the sample images during training), and hit the play button to see your new model in action! Before you forget, move the .ckpt file you've created from the weights folder and onto your main drive so that you don't accidentally delete it when you're clearing up your drive later, and also make sure you save it as something you'll be able to remember easily. Now you have a .ckpt file you can use in web interfaces to create anything you like in the specific style you've trained! [I'll add some sample images from a model I created here later today, but I'm having colab issues as I write this, so it'll have to wait until my resource credits recharge to do it! Be excited, though--they're WAY neat.]

| author | kaliyuga |

|---|---|

| permlink | training-a-dreambooth-model-using-stable-diffusion-v2-and-very-little-code |

| category | hive-158694 |

| json_metadata | {"app":"peakd/2022.11.1","format":"markdown","tags":["aiart","stablediffusion","generativeart","experimental","digitalart"],"users":["kaliyuga"],"image":["https://files.peakd.com/file/peakd-hive/kaliyuga/23x1DPaoQouecA3estpAar4UsMs77rcvsDtVyo56FWjE1dkG1SSd8zuYzXfAx4LxzVYB8.png","https://files.peakd.com/file/peakd-hive/kaliyuga/sqYph4bg-image.png","https://files.peakd.com/file/peakd-hive/kaliyuga/R0y6VINf-image.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23wrBwCsTSvDb4uQMPjKUGUGjxKsLkFYfBxjf37umfAfGtFVgLWzioCDX5VRVC4hSyui9.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23uRL1csPQhHfEUbGskUDjdRTzfzbcwjZTQuA8EEZvABX8Lc9mxnRKdWMccq3LU96zXHP.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23u6WTiTnsi5soAafM9xcJM6rm55VB1Jve2AyHK5LUby1grquoUXHPwy8FTJX8q9NAP52.png","https://files.peakd.com/file/peakd-hive/kaliyuga/EoAgY6foRv3bdYxuqXTN7WqmfXs9NcoTmzP5LFGNsca7gbwjSdMtXKjZgp9kXPnox51.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23uFw4yxah9oRiKvTD38agnpdMPtAu4vfihzrsMsJdmNPJMVCa8LxiRtXo81uKuJ1gPZh.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23wrCEBjUMVpfnstDf5Doq2z7bUEooYj6mcqGSocWzU26zuMPAdndgRMPr6NLymKZzR44.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23viTmjeTFWFmGQHBo6bBqLFGDeDf8FR9UhkPDeLK6jP9nwQSyCacaBPCy41453gN6LCJ.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23t8CSNuzLQvbSb8koHz9rmkF1us1cxBS54eB9vkio7fDqMSKC1MWsERNGbb3xw78j7Gu.png"]} |

| created | 2022-11-27 19:47:57 |

| last_update | 2022-11-27 20:13:12 |

| depth | 0 |

| children | 15 |

| last_payout | 2022-12-04 19:47:57 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 25.488 HBD |

| curator_payout_value | 25.425 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 14,491 |

| author_reputation | 243,481,853,306,775 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,659,815 |

| net_rshares | 103,074,453,720,469 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| pfunk | 0 | 9,272,776,679,496 | 100% | ||

| kevinwong | 0 | 59,976,007,933 | 8% | ||

| leprechaun | 0 | 1,338,381,894 | 25.5% | ||

| mammasitta | 0 | 2,561,972,959 | 0.8% | ||

| roelandp | 0 | 26,308,195,564 | 2.8% | ||

| ausbitbank | 0 | 400,493,600,489 | 20.4% | ||

| steempress | 0 | 93,830,360,863 | 8% | ||

| stevescoins | 0 | 2,594,079,809 | 25.5% | ||

| wakeupnd | 0 | 98,689,559,788 | 50% | ||

| tftproject | 0 | 1,332,048,509 | 7.65% | ||

| jimbobbill | 0 | 2,219,948,662 | 15% | ||

| ebargains | 0 | 2,456,206,491 | 7.6% | ||

| clayboyn | 0 | 25,785,488,671 | 25% | ||

| nathanjtaylor | 0 | 0 | 100% | ||

| ura-soul | 0 | 51,813,868,229 | 25.5% | ||

| oleg326756 | 0 | 692,056,505 | 2% | ||

| lostkluster | 0 | 600,594,014 | 50% | ||

| yadamaniart | 0 | 3,144,044,772 | 4% | ||

| justinw | 0 | 10,787,620,842 | 2.5% | ||

| walterjay | 0 | 20,293,500,813 | 2.4% | ||

| v4vapid | 0 | 5,536,469,971,736 | 33.66% | ||

| askari | 0 | 9,630,639,736 | 8% | ||

| erikaflynn | 0 | 18,588,602,434 | 30% | ||

| thereikiforest | 0 | 428,131,027 | 10% | ||

| ganjafarmer | 0 | 20,643,103,603 | 17.5% | ||

| detlev | 0 | 17,099,641,053 | 1.2% | ||

| ma1neevent | 0 | 3,799,375,062 | 15% | ||

| jdc | 0 | 1,043,308,034 | 20% | ||

| frankydoodle | 0 | 1,636,534,646 | 12.75% | ||

| batman0916 | 0 | 551,281,589 | 3.8% | ||

| rt395 | 0 | 2,673,130,351 | 2.5% | ||

| newsflash | 0 | 1,990,011,751,126 | 6% | ||

| varunpinto | 0 | 121,114,680,038 | 100% | ||

| rawselectmusic | 0 | 1,592,120,682 | 4% | ||

| drwom | 0 | 8,618,830,270 | 25% | ||

| saintopic | 0 | 664,648,199 | 50% | ||

| truthforce | 0 | 3,221,759,484 | 51% | ||

| guchtere | 0 | 777,235,977 | 3.8% | ||

| vachemorte | 0 | 17,305,172,297 | 25% | ||

| juliakponsford | 0 | 371,234,191,603 | 50% | ||

| auditoryorgasms | 0 | 704,152,593 | 25% | ||

| bluemist | 0 | 19,320,338,154 | 9% | ||

| diegoameerali | 0 | 698,418,838 | 2.4% | ||

| scrodinger | 0 | 1,507,284,416 | 50% | ||

| lenasveganliving | 0 | 1,872,303,912 | 4% | ||

| hope-on-fire | 0 | 183,795,525,948 | 26% | ||

| howo | 0 | 249,965,332,929 | 8% | ||

| appreciator | 0 | 33,985,813,528,803 | 9% | ||

| bambukah | 0 | 7,639,734,897 | 35% | ||

| ocd | 0 | 1,073,167,071,047 | 8% | ||

| vikisecrets | 0 | 0 | 30% | ||

| martibis | 0 | 3,690,005,115 | 2.4% | ||

| redrica | 0 | 2,916,977,455 | 4.18% | ||

| hdmed | 0 | 2,695,538,224 | 8% | ||

| zyx066 | 0 | 6,084,892,711 | 2.4% | ||

| steemorocco | 0 | 1,118,364,072 | 100% | ||

| drax | 0 | 4,116,778,532 | 0.96% | ||

| insanityisfree | 0 | 999,175,814 | 51% | ||

| jlsplatts | 0 | 20,973,701,261 | 2% | ||

| risemultiversity | 0 | 3,693,026,309 | 25.5% | ||

| makerhacks | 0 | 11,372,303,782 | 7.6% | ||

| bigdizzle91 | 0 | 1,013,076,848 | 4% | ||

| hanggggbeeee | 0 | 704,832,413 | 4% | ||

| technicalside | 0 | 1,308,659,123 | 4.5% | ||

| morwhale | 0 | 1,593,382,621 | 100% | ||

| x30 | 0 | 402,906,737,838 | 9% | ||

| morwhaleplus | 0 | 850,133,307 | 100% | ||

| informationwar | 0 | 319,161,193,633 | 51% | ||

| morwhalebonus | 0 | 843,205,709 | 100% | ||

| kedi | 0 | 0 | 100% | ||

| sunsea | 0 | 7,097,621,089 | 4.5% | ||

| eonwarped | 0 | 33,698,946,129 | 2.4% | ||

| postpromoter | 0 | 165,848,991,678 | 7.6% | ||

| feedme | 0 | 634,091,992 | 100% | ||

| sarmitirajaa | 0 | 544,603,996 | 100% | ||

| mytechtrail | 0 | 49,729,742,418 | 35% | ||

| kernelillo | 0 | 2,127,480,080 | 4% | ||

| elderson | 0 | 7,395,506,138 | 8.75% | ||

| drunksamurai | 0 | 3,362,361,963 | 50% | ||

| najat | 0 | 3,783,021,695 | 100% | ||

| dmwh | 0 | 66,507,822,279 | 25.5% | ||

| tomatom | 0 | 861,919,464 | 4% | ||

| amerlin | 0 | 969,960,512 | 100% | ||

| r00sj3 | 0 | 16,261,055,922 | 4% | ||

| anikekirsten | 0 | 5,524,803,259 | 50% | ||

| ronpurteetv | 0 | 752,424,943 | 4% | ||

| gabrielatravels | 0 | 1,715,610,337 | 3.2% | ||

| hdmed.dev | 0 | 564,935,405 | 100% | ||

| roadstories | 0 | 4,964,986,743 | 20% | ||

| gokhan83kurt | 0 | 1,717,842,324 | 50% | ||

| ricardo993 | 0 | 988,554,250 | 4.8% | ||

| empress-eremmy | 0 | 57,696,046,724 | 25.5% | ||

| aagabriel | 0 | 3,132,255,043 | 65% | ||

| dynamicrypto | 0 | 21,093,330,981 | 5% | ||

| fieryfootprints | 0 | 33,235,170,553 | 20% | ||

| shahabshah | 0 | 1,303,638,672 | 100% | ||

| ocd-witness | 0 | 194,206,523,429 | 8% | ||

| fireguardian | 0 | 2,758,388,082 | 20% | ||

| agememnon | 0 | 2,333,452,547 | 80% | ||

| takowi | 0 | 142,919,041,912 | 8% | ||

| russellstockley | 0 | 33,346,119,522 | 35% | ||

| jglake | 0 | 5,697,731,814 | 20% | ||

| honeymoon-1611 | 0 | 1,923,474,559 | 20% | ||

| dumnebari | 0 | 1,153,645,980 | 50% | ||

| djoi | 0 | 1,758,673,846 | 50% | ||

| miroslavrc | 0 | 2,673,903,161 | 4% | ||

| peri | 0 | 1,412,594,515 | 100% | ||

| ravenmus1c | 0 | 1,887,102,118 | 10% | ||

| sanderjansenart | 0 | 14,226,768,988 | 8% | ||

| reversehitler88 | 0 | 13,607,312,559 | 100% | ||

| obsesija | 0 | 2,055,522,697 | 3.8% | ||

| fotogruppemunich | 0 | 2,597,428,918 | 1.9% | ||

| inciter | 0 | 5,657,139,624 | 9% | ||

| indigoocean | 0 | 1,810,208,084 | 4% | ||

| retard-gamer-de | 0 | 659,941,731 | 25.5% | ||

| veteranforcrypto | 0 | 520,932,755 | 2.4% | ||

| bennie-13 | 0 | 523,880,649 | 50% | ||

| inyoursteem | 0 | 0 | 100% | ||

| yensesa | 0 | 24,962,912,321 | 100% | ||

| kamalamezwar | 0 | 591,765,275 | 100% | ||

| steem.services | 0 | 7,716,897,928 | 7.6% | ||

| commonlaw | 0 | 4,669,946,190 | 35% | ||

| uche-nna | 0 | 71,561,382,163 | 80% | ||

| marblely | 0 | 7,895,962,170 | 8% | ||

| haccolong | 0 | 1,192,114,827 | 4% | ||

| newsnownorthwest | 0 | 767,554,874 | 7.65% | ||

| jvphotography | 0 | 1,073,181,401 | 20% | ||

| ocdb | 0 | 23,555,568,876,275 | 7.6% | ||

| boyanpro | 0 | 0 | 100% | ||

| iammegankylie | 0 | 2,701,186,470 | 100% | ||

| luciannagy | 0 | 7,036,152,608 | 50% | ||

| litguru | 0 | 110,790,265,076 | 100% | ||

| hoaithu | 0 | 1,047,578,493 | 3.4% | ||

| damian-z | 0 | 760,413,011 | 10% | ||

| aconsciousness | 0 | 2,223,036,562 | 95% | ||

| deepdives | 0 | 238,928,444,399 | 51% | ||

| artmedina | 0 | 662,398,351 | 4% | ||

| anhvu | 0 | 695,435,334 | 3.2% | ||

| mobi72 | 0 | 3,703,138,351 | 21% | ||

| pradeepdee6 | 0 | 3,727,717,378 | 3.2% | ||

| javyeslava.photo | 0 | 1,161,270,593 | 3.2% | ||

| athunderstruck | 0 | 1,816,644,232 | 4% | ||

| riskneutral | 0 | 5,927,646,139 | 51% | ||

| kind.network | 0 | 1,310,858,756 | 35% | ||

| vasigo | 0 | 25,141,124,280 | 100% | ||

| multifacetas | 0 | 1,112,317,344 | 4% | ||

| devann | 0 | 12,034,556,316 | 4% | ||

| francescomai | 0 | 101,334,522,390 | 100% | ||

| variedades | 0 | 1,071,229,120 | 3.04% | ||

| primeradue | 0 | 553,680,721 | 33.66% | ||

| apokruphos | 0 | 3,556,539,753 | 1% | ||

| nftshowroom | 0 | 26,135,571,794 | 50% | ||

| smartwallet | 0 | 0 | 100% | ||

| thelogicaldude | 0 | 43,878,839,394 | 50% | ||

| alchemystones | 0 | 23,854,203,103 | 50% | ||

| maxsieg | 0 | 6,833,740,294 | 51% | ||

| photographercr | 0 | 3,397,610,115 | 1.52% | ||

| squareonefarms | 0 | 781,601,552 | 4% | ||

| poliwalt10 | 0 | 668,557,263 | 1.9% | ||

| deeanndmathews | 0 | 540,622,278 | 35% | ||

| nooblogger | 0 | 943,155,388 | 50% | ||

| beerlover | 0 | 1,690,083,908 | 1.2% | ||

| shauner | 0 | 8,506,389,107 | 25% | ||

| clownworld | 0 | 2,343,642,223 | 25.5% | ||

| anosys | 0 | 1,462,033,008 | 50% | ||

| peachymod | 0 | 1,710,076,550 | 50% | ||

| ziggysd | 0 | 4,338,333,762 | 100% | ||

| myfreshes | 0 | 5,218,662,930 | 7.2% | ||

| maddogmike | 0 | 48,111,089,827 | 35% | ||

| oniemaniego | 0 | 0 | 100% | ||

| nata86 | 0 | 1,643,829,167 | 50% | ||

| rootdraws | 0 | 7,045,426,557 | 50% | ||

| oratione | 0 | 859,142,996 | 100% | ||

| zeesh | 0 | 1,636,215,653 | 4% | ||

| dw-glgsy112321s | 0 | 868,469,264 | 100% | ||

| thecontesttrain | 0 | 607,404,088 | 25.5% | ||

| veryanprime | 0 | 5,218,608,215 | 100% | ||

| hope.venezuela | 0 | 9,875,508,133 | 35% | ||

| inigo-montoya-jr | 0 | 1,255,889,896 | 43.35% | ||

| atma.love | 0 | 49,745,814,870 | 10.2% | ||

| marblesz | 0 | 519,034,117 | 8% | ||

| bilpcoinbpc | 0 | 7,589,077,104 | 100% | ||

| vault.tokens | 0 | 936,757,457 | 50% | ||

| julesquirin | 0 | 2,496,695,706 | 10% | ||

| zeusflatsak | 0 | 1,881,865,782 | 17.5% | ||

| groove-logic | 0 | 1,245,734,772 | 17.5% | ||

| dpend.active | 0 | 561,956,542 | 1.6% | ||

| shinoxl | 0 | 0 | 100% | ||

| stormbourne45 | 0 | 0 | 100% | ||

| alienarthive | 0 | 2,017,904,895 | 50% | ||

| hivetrending | 0 | 402,899,039,626 | 100% | ||

| thepeakstudio | 0 | 750,323,950 | 35% | ||

| velinov86 | 0 | 3,858,139,520 | 3.8% | ||

| veeart | 0 | 1,619,766,167 | 100% | ||

| the100 | 0 | 3,759,323,648 | 8% | ||

| hivelist | 0 | 120,641,457,875 | 35% | ||

| kennysgaminglife | 0 | 1,301,550,743 | 50% | ||

| kingneptune | 0 | 3,535,613,251 | 25.5% | ||

| woelfchen | 0 | 93,004,925,855 | 100% | ||

| actioncats | 0 | 13,343,407,757 | 9% | ||

| hivehustlers | 0 | 135,141,177,046 | 70% | ||

| discoveringarni | 0 | 43,930,396,104 | 15% | ||

| noelyss | 0 | 2,866,226,908 | 4.5% | ||

| zottone444 | 0 | 2,084,942,626 | 2% | ||

| lucianav | 0 | 1,061,489,913 | 4.5% | ||

| jilt | 0 | 8,492,125,743 | 50% | ||

| portsundries | 0 | 1,550,005,384 | 50% | ||

| vokus | 0 | 3,834,588,949 | 50% | ||

| martial.media | 0 | 824,499,628 | 17.5% | ||

| kawsar8035 | 0 | 2,078,741,986 | 2.28% | ||

| senseiphil | 0 | 4,504,397,140 | 8% | ||

| finris | 0 | 1,320,072,140 | 50% | ||

| noalys | 0 | 779,675,904 | 4.5% | ||

| aemile-kh | 0 | 0 | 100% | ||

| chucknun | 0 | 3,015,554,742 | 8% | ||

| ismaelrd04 | 0 | 10,195,827,077 | 98% | ||

| kattycrochet | 0 | 3,832,629,488 | 4.5% | ||

| tempertantric | 0 | 121,258,942,842 | 37% | ||

| birdbeaksd | 0 | 724,279,277 | 100% | ||

| meritocracy | 0 | 427,736,868,342 | 3.8% | ||

| n0m0refak3n3ws | 0 | 3,879,823,155 | 25.5% | ||

| peakd.top.d001 | 0 | 525,263,041 | 100% | ||

| feanorgu | 0 | 12,288,117,703 | 100% | ||

| dcrops | 0 | 57,104,799,688 | 3.8% | ||

| rondonshneezy | 0 | 7,027,904,342 | 50% | ||

| dadspardan | 0 | 7,194,109,790 | 50% | ||

| cielitorojo | 0 | 7,355,302,507 | 5.32% | ||

| eldritchspig | 0 | 623,591,905 | 50% | ||

| traderhive | 0 | 8,039,520,672 | 8% | ||

| hairosoto | 0 | 0 | 100% | ||

| an-man | 0 | 4,794,680,368 | 30% | ||

| huzzah | 0 | 33,370,931,583 | 100% | ||

| elgatoshawua | 0 | 1,606,866,320 | 3.8% | ||

| cooperclub | 0 | 28,300,722,805 | 50% | ||

| limn | 0 | 6,256,910,613 | 21% | ||

| saltyreptile | 0 | 8,974,743,208 | 10% | ||

| nyxlabs | 0 | 10,155,230,500 | 50% | ||

| draygyn | 0 | 3,486,987,314 | 50% | ||

| efastromberg94 | 0 | 2,081,149,266 | 35% | ||

| creodas | 0 | 2,641,836,372 | 75% | ||

| iviaxpow3r | 0 | 5,884,524,307 | 50% | ||

| wizzitywillican | 0 | 3,165,077,639 | 50% | ||

| hexagono6 | 0 | 944,758,604 | 3.8% | ||

| trouvaille | 0 | 677,729,518 | 4.5% | ||

| hive.pizza | 0 | 2,593,453,384,693 | 100% | ||

| leveluplifestyle | 0 | 1,890,619,498 | 4% | ||

| nfttunz | 0 | 417,076,376,612 | 25% | ||

| power-kappe | 0 | 606,891,539 | 9% | ||

| sagadahoctrott | 0 | 0 | 100% | ||

| pizzabot | 0 | 13,828,719,658 | 100% | ||

| dajokawild | 0 | 18,188,964,456 | 50% | ||

| soychalbed | 0 | 1,086,463,627 | 8% | ||

| stickupmusic | 0 | 807,814,294 | 50% | ||

| brujita18 | 0 | 1,311,118,255 | 4% | ||

| kellyane | 0 | 33,079,185,313 | 100% | ||

| shanhenry | 0 | 2,184,842,000 | 100% | ||

| fotomaglys | 0 | 1,511,676,692 | 4.5% | ||

| partiesjohall | 0 | 1,305,891,461 | 8% | ||

| dibblers.dabs | 0 | 118,027,413,149 | 60% | ||

| nfthypesquad | 0 | 572,721,084 | 10% | ||

| jessicaossom | 0 | 974,101,854 | 4% | ||

| hankanon | 0 | 1,061,648,919 | 20% | ||

| josdelmi | 0 | 925,840,216 | 4% | ||

| aprasad2325 | 0 | 1,423,718,691 | 3.8% | ||

| john9inch | 0 | 2,568,158,450 | 50% | ||

| szmobacsi | 0 | 1,420,985,698 | 90% | ||

| delver | 0 | 20,756,790,814 | 51% | ||

| rayius | 0 | 1,977,394,491 | 100% | ||

| ivycrafts | 0 | 1,274,065,088 | 4% | ||

| andriko | 0 | 566,885,755 | 100% | ||

| rauti | 0 | 4,264,378,343 | 100% | ||

| jam728 | 0 | 1,510,246,914 | 100% | ||

| lucianaabrao | 0 | 0 | 100% | ||

| sovstar | 0 | 1,637,920,622 | 50% | ||

| quycmf8 | 0 | 209,264,978,102 | 9% | ||

| drabs587 | 0 | 472,332,909 | 25% | ||

| thaddeusprime | 0 | 3,186,873,054 | 50% | ||

| onewolfe | 0 | 998,745,206 | 50% | ||

| speko | 0 | 2,114,617,465 | 16% | ||

| banzafahra | 0 | 6,385,950,304 | 50% | ||

| rentaw03 | 0 | 1,148,994,272 | 50% | ||

| gehenna08 | 0 | 1,356,173,159 | 50% | ||

| momongja | 0 | 492,438,135 | 100% | ||

| ariuss | 0 | 832,781,474 | 100% | ||

| xclie | 0 | 0 | 100% | ||

| mcgilli | 0 | 748,505,038 | 100% | ||

| iproto | 0 | 509,084,485 | 50% | ||

| kettchup | 0 | 732,662,852 | 50% | ||

| stickupofficial1 | 0 | 617,813,785 | 50% | ||

| gejzep | 0 | 8,624,339,359 | 4% | ||

| dstampede | 0 | 0 | 100% | ||

| ingi1976 | 0 | 10,347,865,179 | 25% | ||

| lauti | 0 | 3,623,536,903 | 100% | ||

| tdctunes | 0 | 132,362,168,578 | 50% | ||

| thercek | 0 | 530,003,149 | 50% | ||

| jxalvar | 0 | 0 | 100% | ||

| epicur0 | 0 | 2,816,352,378 | 100% | ||

| marcinxyz | 0 | 1,313,919,341 | 50% | ||

| fairyberry | 0 | 979,283,120 | 3.8% | ||

| h3m4n7 | 0 | 6,370,453,429 | 90% | ||

| rammargarita | 0 | 54,763,508,899 | 100% | ||

| spiritverve | 0 | 12,340,625,778 | 100% | ||

| stickupcash | 0 | 4,978,355,805 | 50% | ||

| tillmea | 0 | 536,958,804 | 100% | ||

| kqaosphreak | 0 | 1,471,476,584 | 100% | ||

| uncorked-reality | 0 | 1,146,654,613 | 30% | ||

| mario04 | 0 | 0 | 100% | ||

| cherute | 0 | 2,945,751,690 | 50% | ||

| svanbo | 0 | 875,576,826 | 3% | ||

| thunderjack | 0 | 3,358,446,873 | 50% | ||

| tristan.todd | 0 | 1,965,458,061 | 8% | ||

| crypt0gnome | 0 | 6,812,194,580 | 2% | ||

| lothbrox | 0 | 2,057,739,528 | 100% | ||

| noctury | 0 | 543,198,010 | 4% | ||

| zeclipse | 0 | 2,873,194,689 | 100% | ||

| ivanov007 | 0 | 0 | 100% | ||

| sagarkothari88 | 0 | 54,442,412,806 | 5% | ||

| blocktunes | 0 | 8,851,334,624 | 10% | ||

| woodathegsd | 0 | 1,898,733,779 | 17.5% | ||

| akkann | 0 | 816,765,526 | 4% | ||

| bacon-dub | 0 | 603,063,485 | 100% | ||

| the-pockets | 0 | 507,157,200 | 50% | ||

| studio3141 | 0 | 663,749,006 | 50% | ||

| luckbound | 0 | 699,091,701 | 50% | ||

| lukasbachofner | 0 | 570,684,845 | 1.6% | ||

| wannatrailwithme | 0 | 1,015,081,868 | 30% | ||

| investinfreedom | 0 | 29,328,933,054 | 51% | ||

| anhdaden146 | 0 | 220,856,019,314 | 3.8% | ||

| blockgolem | 0 | 1,061,887,626 | 8% | ||

| pgm-curator | 0 | 19,612,044,761 | 4% | ||

| dalekma | 0 | 1,572,040,473 | 100% | ||

| incantia | 0 | 732,690,645 | 100% | ||

| shawnnft | 0 | 0 | 100% | ||

| hurtlocker | 0 | 69,974,866,702 | 100% | ||

| heron-ua | 0 | 1,110,048,986 | 100% | ||

| plicc8 | 0 | 2,077,903,518 | 80% | ||

| rondonsleezy | 0 | 1,726,488,476 | 50% | ||

| chechostreet | 0 | 6,873,819,162 | 100% | ||

| resonator | 0 | 16,912,444,017,122 | 51% | ||

| fefe99 | 0 | 626,871,673 | 100% | ||

| theawesononso | 0 | 1,157,091,476 | 4% | ||

| xappreciator | 0 | -130,367,880 | -9% | ||

| xocdb | 0 | -95,380,400 | -7.6% | ||

| mamoti | 0 | 25,518,889,296 | 100% | ||

| sholex94 | 0 | 628,734,993 | 4% | ||

| justbekindtoday | 0 | 140,636,184,714 | 4% | ||

| myegoandmyself | 0 | 122,798,767,524 | 6% | ||

| psyberwhale | 0 | 98,767,778,220 | 25% | ||

| n1t0 | 0 | 0 | 100% | ||

| lepr8 | 0 | 11,518,620,815 | 100% | ||

| cli4d | 0 | 575,341,317 | 4% | ||

| seunny | 0 | 1,853,651,887 | 100% | ||

| jbros | 0 | 505,582,266 | 100% | ||

| twosomesup | 0 | 1,139,557,913 | 3.8% | ||

| ygin2 | 0 | 0 | 100% | ||

| minas-glory | 0 | 8,112,427,361 | 4% | ||

| the-grandmaster | 0 | 6,898,817,629 | 4% | ||

| the-burn | 0 | 8,726,208,528 | 4% | ||

| masterfarmer | 0 | 1,351,778,879 | 100% | ||

| quentincc | 0 | 674,425,972 | 25% | ||

| astralartz | 0 | 0 | 100% | ||

| ascendingorder | 0 | 2,838,731,420 | 8% | ||

| blocktunesdao | 0 | 4,743,432,516 | 10% | ||

| sammyhive | 0 | 1,290,641,571 | 100% | ||

| diodao | 0 | 1,322,847,601 | 100% | ||

| ganjafrmer | 0 | 1,151,638,602 | 35% | ||

| zoxtar | 0 | 0 | 100% | ||

| redparis | 0 | 0 | 100% | ||

| blockchainpan | 0 | 0 | 100% |

Cool art !GIF I LIKE IT

| author | bilpcoinbpc |

|---|---|

| permlink | re-kaliyuga-20221128t117528z |

| category | hive-158694 |

| json_metadata | {"tags":["aiart","stablediffusion","generativeart","experimental","digitalart"],"app":"ecency/3.0.29-vision","format":"markdown+html"} |

| created | 2022-11-28 11:07:06 |

| last_update | 2022-11-28 11:07:06 |

| depth | 1 |

| children | 1 |

| last_payout | 2022-12-05 11:07:06 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 23 |

| author_reputation | 2,134,460,584,956 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,672,518 |

| net_rshares | 0 |

<center>https://media.tenor.com/FGlZJXjxUIEAAAAC/i-like-that-helloiamkate.gif [Via Tenor](https://tenor.com/)</center>

| author | hivegifbot |

|---|---|

| permlink | re-re-kaliyuga-20221128t117528z-20221128t110727z |

| category | hive-158694 |

| json_metadata | "{"app": "beem/0.24.26"}" |

| created | 2022-11-28 11:07:27 |

| last_update | 2022-11-28 11:07:27 |

| depth | 2 |

| children | 0 |

| last_payout | 2022-12-05 11:07:27 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 118 |

| author_reputation | 38,192,557,766,504 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,672,521 |

| net_rshares | 0 |

You have to use Google Pro to use these collabs right? They've been reducing GPUs for free users and this type of training collab would just crash for me last I tried.

| author | cotton88 |

|---|---|

| permlink | re-kaliyuga-rm1iwr |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.11.1"} |

| created | 2022-11-28 04:24:30 |

| last_update | 2022-11-28 04:24:30 |

| depth | 1 |

| children | 1 |

| last_payout | 2022-12-05 04:24:30 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 167 |

| author_reputation | 2,755,364,218,525 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,667,726 |

| net_rshares | 0 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| holoz0r | 0 | 0 | 100% |

If you keep your VRAM usage under 10 gigs (I think that's the free cutoff), you might be able to run this in a free account--I enabled a VRAM-conserving training flag

| author | kaliyuga |

|---|---|

| permlink | re-cotton88-rm1ljt |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.11.1"} |

| created | 2022-11-28 05:21:30 |

| last_update | 2022-11-28 05:21:30 |

| depth | 2 |

| children | 0 |

| last_payout | 2022-12-05 05:21:30 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 166 |

| author_reputation | 243,481,853,306,775 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,668,412 |

| net_rshares | 0 |

Very Nice!

| author | cryptoace33 |

|---|---|

| permlink | re-kaliyuga-rmgna6 |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.11.2"} |

| created | 2022-12-06 08:22:15 |

| last_update | 2022-12-06 08:22:15 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-12-13 08:22:15 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 10 |

| author_reputation | 354,107,382,127 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,890,050 |

| net_rshares | 0 |

Great information - but as well a lot to do to run my own system.

| author | detlev |

|---|---|

| permlink | re-kaliyuga-rnllw5 |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.12.1"} |

| created | 2022-12-28 11:14:27 |

| last_update | 2022-12-28 11:14:27 |

| depth | 1 |

| children | 0 |

| last_payout | 2023-01-04 11:14:27 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 66 |

| author_reputation | 1,712,460,333,914,020 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 119,438,806 |

| net_rshares | 0 |

great tutorial !PIZZA !HBIT

| author | ivanov007 |

|---|---|

| permlink | re-kaliyuga-rt6gok |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2023.4.2"} |

| created | 2023-04-15 22:19:36 |

| last_update | 2023-04-15 22:19:36 |

| depth | 1 |

| children | 0 |

| last_payout | 2023-04-22 22:19:36 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 27 |

| author_reputation | 19,668,361,034 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 122,580,196 |

| net_rshares | 0 |

It's really helpful to have a step-by-step guide on how to use Stable Diffusion V2 and fine-tune it with custom datasets. Your tips on gathering and preparing the input dataset are especially useful. I had tried out the DreamBooth notebook and seen what kind of results I can get. The results are tremendous! 🚀🚀🚀

| author | kedi |

|---|---|

| permlink | re-kaliyuga-rn1np2 |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.11.2"} |

| created | 2022-12-17 16:41:24 |

| last_update | 2022-12-17 16:41:24 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-12-24 16:41:24 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 314 |

| author_reputation | 35,093,878,622,748 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 119,185,543 |

| net_rshares | 0 |

Brilliant!

| author | litguru |

|---|---|

| permlink | re-kaliyuga-rm2byz |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.11.2"} |

| created | 2022-11-28 14:52:12 |

| last_update | 2022-11-28 14:52:12 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-12-05 14:52:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 11 |

| author_reputation | 244,950,647,652,290 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,676,782 |

| net_rshares | 0 |

awesome!!! !PIZZA

| author | n1t0 |

|---|---|

| permlink | re-kaliyuga-ro8weq |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2023.1.1"} |

| created | 2023-01-10 01:06:27 |

| last_update | 2023-01-10 01:06:27 |

| depth | 1 |

| children | 0 |

| last_payout | 2023-01-17 01:06:27 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 19 |

| author_reputation | 1,044,943,615,577 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 119,752,679 |

| net_rshares | 0 |

bruh @avid.serosik mitte

| author | oniemaniego |

|---|---|

| permlink | re-kaliyuga-rn6ufo |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.12.1"} |

| created | 2022-12-20 11:55:00 |

| last_update | 2022-12-20 11:55:00 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-12-27 11:55:00 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 24 |

| author_reputation | 79,934,267,673,603 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 119,250,007 |

| net_rshares | 0 |

<center>PIZZA! The Hive.Pizza team manually curated this post. $PIZZA slices delivered: n1t0 tipped kaliyuga @ivanov007<sub>(2/15)</sub> tipped @kaliyuga <sub>You can now send $PIZZA tips in <a href="https://discord.gg/hivepizza">Discord</a> via tip.cc!</sub></center>

| author | pizzabot |

|---|---|

| permlink | re-training-a-dreambooth-model-using-stable-diffusion-v2-and-very-little-code-20221128t013525z |

| category | hive-158694 |

| json_metadata | "{"app": "beem/0.24.19"}" |

| created | 2022-11-28 01:35:24 |

| last_update | 2023-04-15 22:20:51 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-12-05 01:35:24 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 274 |

| author_reputation | 7,467,658,822,216 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,665,703 |

| net_rshares | 1,405,878,145 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kaliyuga | 0 | 1,405,878,145 | 100% |

https://reddit.com/r/DreamBooth/comments/1108qfd/training_db_sample_images_look_so_much_better/ <sub> The rewards earned on this comment will go directly to the people sharing the post on Reddit as long as they are registered with @poshtoken. Sign up at https://hiveposh.com.</sub>

| author | redditposh | ||||||

|---|---|---|---|---|---|---|---|

| permlink | re-kaliyuga-training-a-dreambooth-model-using-stable-diffusion-1120 | ||||||

| category | hive-158694 | ||||||

| json_metadata | "{"app":"Poshtoken 0.0.2","payoutToUser":[]}" | ||||||

| created | 2023-02-12 06:10:42 | ||||||

| last_update | 2023-02-12 06:10:42 | ||||||

| depth | 1 | ||||||

| children | 0 | ||||||

| last_payout | 2023-02-19 06:10:42 | ||||||

| cashout_time | 1969-12-31 23:59:59 | ||||||

| total_payout_value | 0.000 HBD | ||||||

| curator_payout_value | 0.000 HBD | ||||||

| pending_payout_value | 0.000 HBD | ||||||

| promoted | 0.000 HBD | ||||||

| body_length | 282 | ||||||

| author_reputation | 2,089,956,989,197,756 | ||||||

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" | ||||||

| beneficiaries |

| ||||||

| max_accepted_payout | 1,000,000.000 HBD | ||||||

| percent_hbd | 0 | ||||||

| post_id | 120,705,909 | ||||||

| net_rshares | 0 |

Thanks for this info! I've tried running the colab a few times, but it always errors out when I get to the conversion to ckpt step. It seems like it might be a drive path issue, but I can't seem to track down the problem.(the error I get is: "FileNotFoundError: [Errno 2] No such file or directory: '4000/unet/diffusion_pytorch_model.bin'") I tried doing that step locally, but then when I try to use the converted ckpt in automatic, I just get brown noise, no matter what I prompt. Any ideas as to what I might be missing?

| author | redparis |

|---|---|

| permlink | re-kaliyuga-rm67sh |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.11.2"} |

| created | 2022-11-30 17:12:15 |

| last_update | 2022-11-30 17:12:15 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-12-07 17:12:15 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 524 |

| author_reputation | 0 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,737,608 |

| net_rshares | 0 |

This is lovely, I will give it a trier soon

| author | seunny |

|---|---|

| permlink | re-kaliyuga-rm20zr |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.11.1"} |

| created | 2022-11-28 10:55:18 |

| last_update | 2022-11-28 10:55:18 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-12-05 10:55:18 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 44 |

| author_reputation | 13,959,813,768,590 |

| root_title | "Training a Dreambooth Model Using Stable Diffusion V2 (and Very Little Code)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 118,672,332 |

| net_rshares | 0 |

hiveblocks

hiveblocks