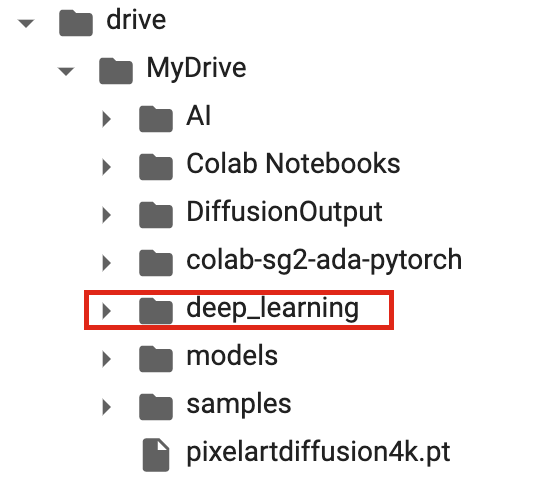

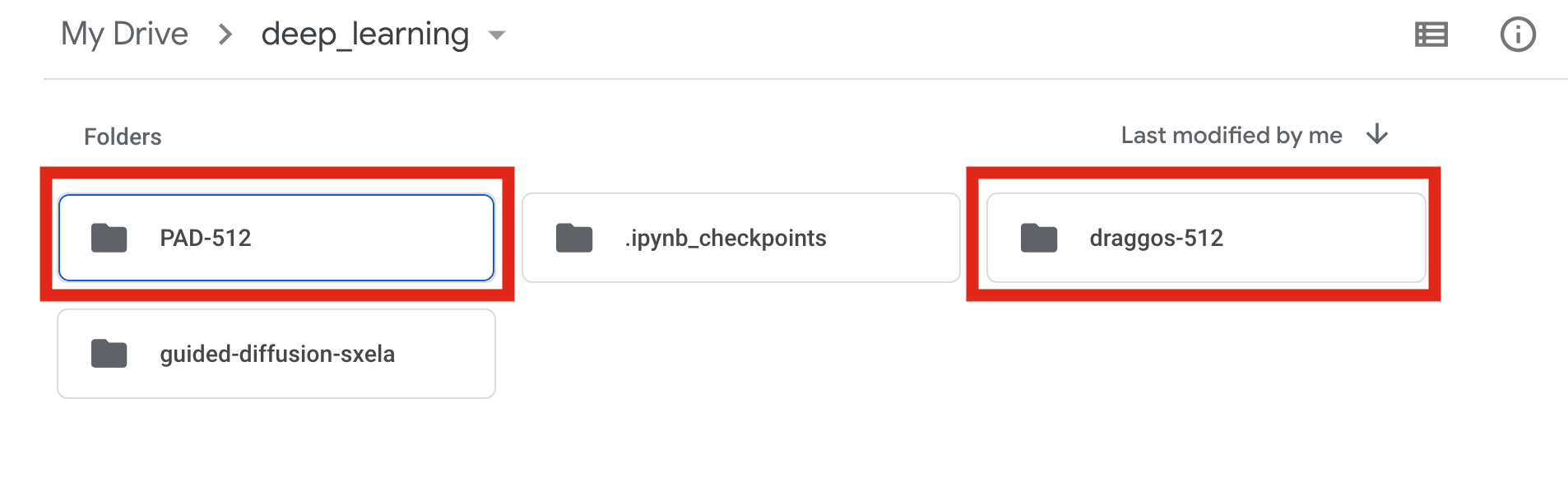

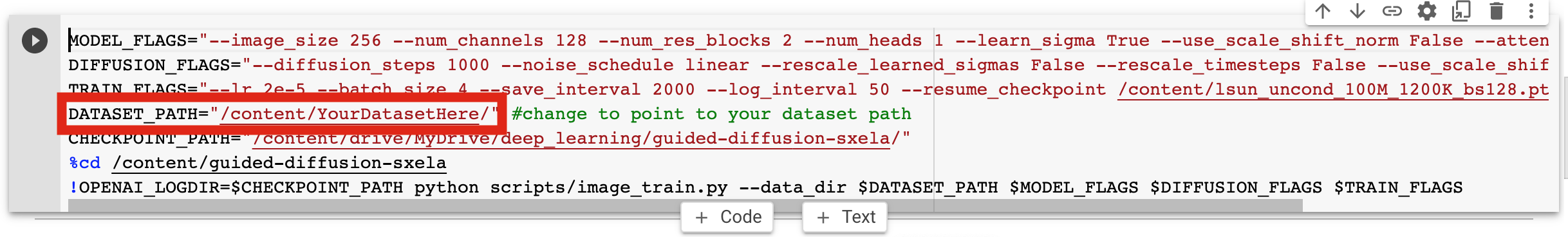

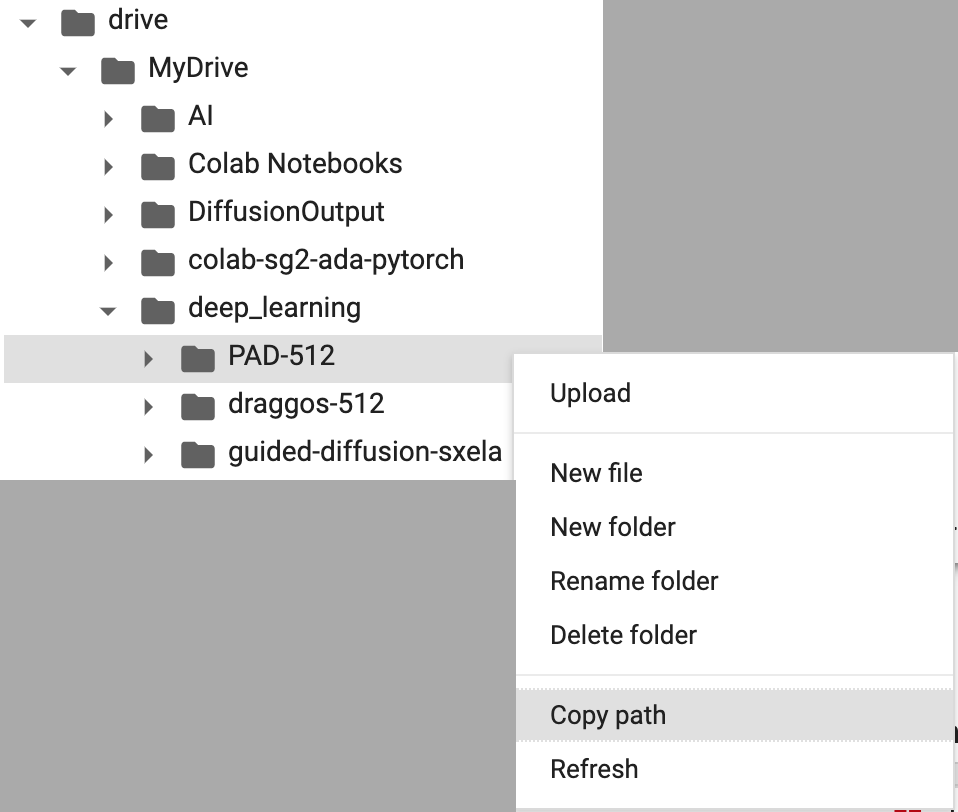

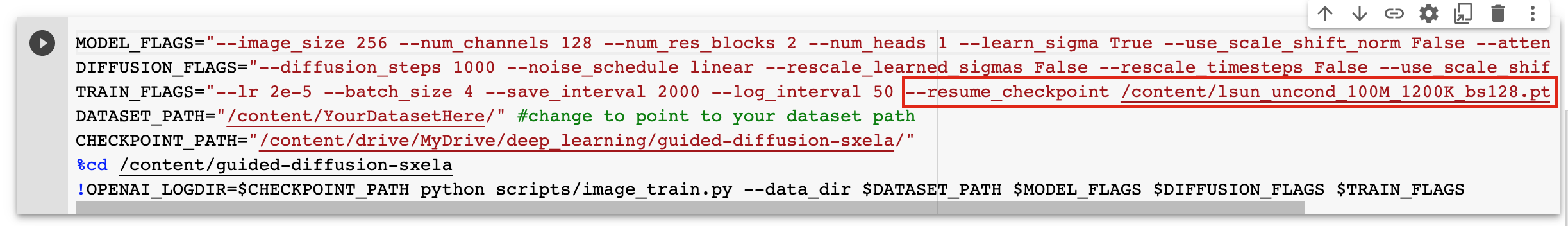

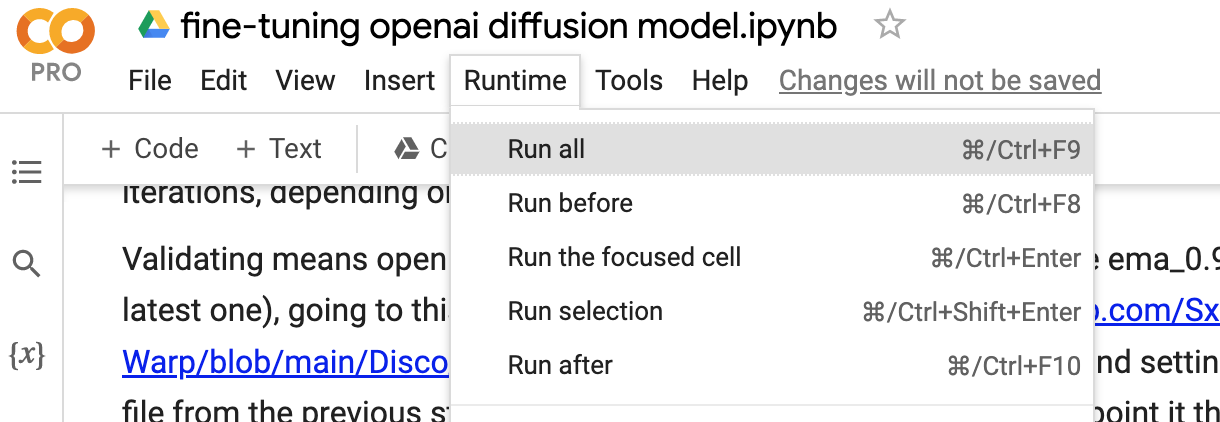

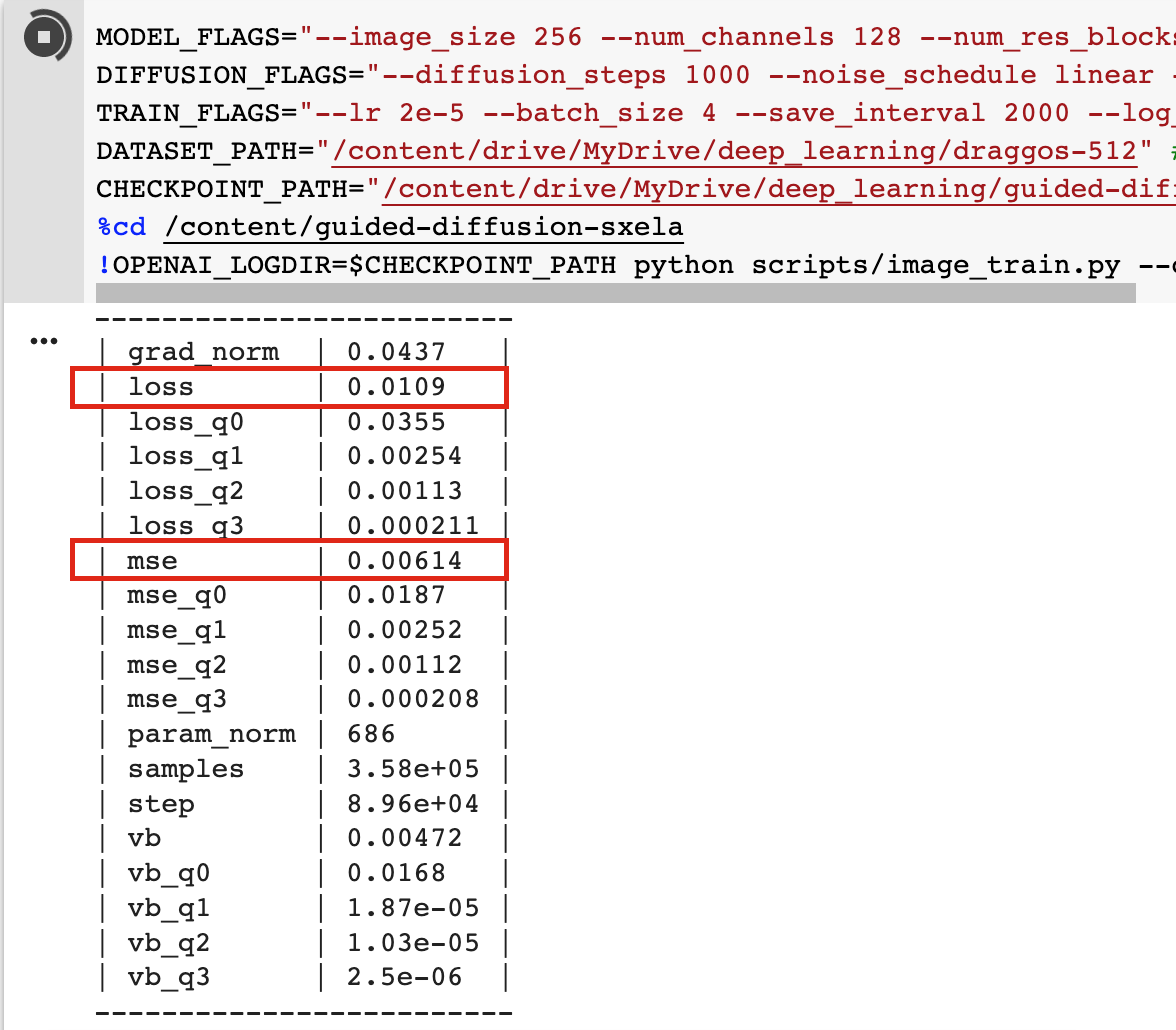

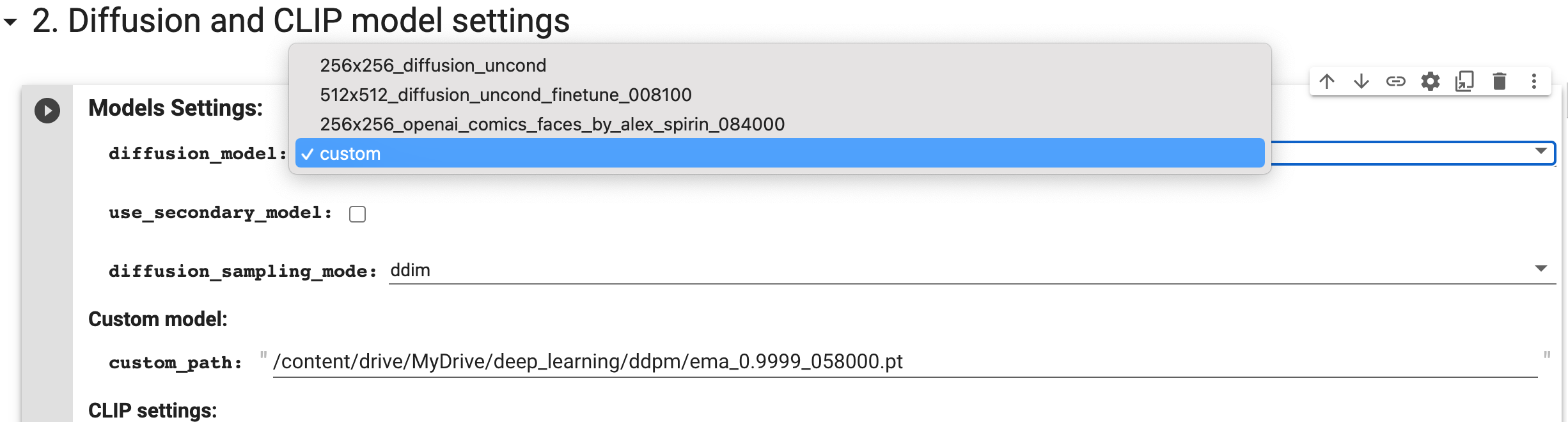

About two years ago, I wrote [my first how-to guide for non-coders](https://peakd.com/@kaliyuga/using-runwayml-to-train-your-own-ai-image-models-without-a-single-line-of-code) on training custom AI models. Back then, GANs were the most cutting-edge form of AI art. Today that title belongs to Clip-guided diffusion models, but a lot of the underlying prep work for creating custom models remains similar and creating custom models remains accessible, even if you can't code at all. In this article, I'll be focusing on guiding you through building an image dataset, and then turning that dataset into a custom **clip-guided diffusion model**. More specifically, your end result will be an ***unconditional*** clip-guided diffusion model. This is a fancy way of saying that all you need to provide to the training notebook is an image dataset, none of the metadata/associated text that would be used to train a conditional model. Due to technological wizardry, an unconditional model is still fully guidable by text inputs! I won't be getting into the differences between GANs and diffusion models or how diffusion models work in this tutorial. If you're new to AI art and the concepts I'm discussing here, the recent [Vox piece](https://www.youtube.com/watch?app=desktop&v=SVcsDDABEkM) on clip-guided diffusion (which I'm actually featured in!) is a really good place to start; I recommend that you watch it first and maybe play around with tools like Disco Diffusion before launching into this. I've tried to make this guide pretty simple, but at the same time, it's definitely written with the expectation that you have some experience with AI art/colab notebooks. --- #### To complete this tutorial, you will need: - A Google Colab Pro account to train your model and navigate the final result - [ImageAssistant](https://chrome.google.com/webstore/detail/imageassistant-batch-imag/dbjbempljhcmhlfpfacalomonjpalpko/related?hl=en), a Chrome extension for bulk-downloading images from websites - [BIRME](https://www.birme.net/?target_width=512&target_height=512&rename=3image-xxx&rename_start=555), a bulk image cropper/resizer accessible from your browser - [Alex Spirin's 256x256 OpenAI diffusion model fine-tuning colab notebook](https://colab.research.google.com/drive/1Xfd5fm4OnhTd6IHPMGcoqw54uhGT3HdF), for training a model based on your dataset - [Custom Model-Enabled Disco Diffusion v 5.2 notebook](https://colab.research.google.com/github/Sxela/DiscoDiffusion-Warp/blob/main/Disco_Diffusion_v5_2_Warp_custom_model.ipynb) for periodically checking in on your model's training progress ------- ## Step 1: **Gathering your dataset** *This section is more or less a direct port from my [2020 piece](https://peakd.com/@kaliyuga/using-runwayml-to-train-your-own-ai-image-models-without-a-single-line-of-code) on training GANS, since dataset gathering and prep is basically the same for diffusion models.* AI models generate new images based upon the data you train the model on. The algorithm's goal is to approximate as closely as possible the content, color, style, and shapes in your input dataset, and to do so in a way that matches the general relationships/angles/sizes of objects in the input images. This means that having a quality dataset collected is vital in developing a successful AI model. If you want a very specific output that closely matches your input, the input has to be fairly uniform. For instance, if you want a bunch of generated pictures of [cats](https://thiscatdoesnotexist.com/), but your dataset includes birds and gerbils, your output will be less catlike overall than it would be if the dataset was made up of cat images only. Angles of the input images matter, too: a dataset of cats in one uniform pose (probably an impossible thing, since cats are never uniform about *anything*) will create an AI model that generates more proportionally-convincing cats. Click through the site linked above to see what happens when a more diverse set of poses is used--the end results are still definitely cats, but while some images are really convincing, others are eldritch horrors.   If you're interested in generating more experimental forms, having a more diverse dataset might make sense, but you don't want to go too wild--if the AI can't find patterns and common shapes in your input, your output likely won't look like much. Another important thing to keep in mind when building your input dataset is that both quality and quantity of images matter. Honestly, the more high-quality images you can find of your desired subject, the better, though the more uniform/simple the inputs, the fewer images seem to be absolutely necessary for the AI to get the picture. Even for uniform inputs, I'd recommend no fewer than 1000 quality images for the best chance of creating a model that gives you recognizable outputs. For more diverse subjects, three or four times that number is closer to the mark, and even that might be too few. Really, just try to get as many good, high res images as you can. But how do you get that number of high-res images without manually downloading every single one? Many AI artists use some form of bulk-downloading or web scraping. Personally, I use a Chrome extension called [ImageAssistant](https://chrome.google.com/webstore/detail/imageassistant-batch-imag/dbjbempljhcmhlfpfacalomonjpalpko/related?hl=en). This extension bulk-downloads all the loaded images on any given webpage into a .zip file. Downsides of ImageAssistant are that it sometimes duplicates images, and it will also extract ad images, especially if you try to bulk download Pinterest boards. There are Mac applications that you can use to scan the download folders for duplicated images, though, and the ImageAssistant interface makes getting rid of unwanted ad images fairly easy, and it's WAY faster than downloading thousands of images by hand. Images that are royalty-free are obviously the best choice to download from a copyright perspective. AI outputs based on datasets with copyrighted material are a somewhat grey area legally. That being said, it does seem to me that Creative Commons laws should cover such outputs, especially when the copyrighted material is not at all in evidence in the end product. I'm no lawyer, though, so use your discretion when choosing what to download. A safe, high-quality bet would be to search on Getty images for royalty-free images of whatever you're building an AI model to duplicate, and then bulk-download the results. Sometimes, in spite of exhaustive web searches, you just won't have quite enough images. In cases like that, a little [data augmentation](https://heartbeat.fritz.ai/research-guide-data-augmentation-for-deep-learning-7f141fcc191c) is called for. By bulk-flipping your images horizontally, you can double the size of your dataset without compromising its diversity. I generally practice this on all my datasets, even if I have a reasonably-sized dataset to start with. ------- ## Step 2: Preprocessing Your Dataset This is where we get all of our images nice and cropped/uniform so that the training notebook (which only processes square images) doesn't squash rectangular images into 1:1 aspect ratios. For this step, head over to [BIRME](https://www.birme.net/?target_width=512&target_height=512&rename=3image-xxx&rename_start=555) (**B**ulk **I**mage **R**esizing **M**ade **E**asy) and drag/drop the file you've saved your dataset in. Once all your images upload (might take a minute, depending on the number of images), you'll see that all but a square portion of the images you've uploaded are greyed out. The link I've provided should have "autodetect focal point" enabled, which will save you a ton of time manually choosing what you want included in the square, but you can also do your selections by hand, if you wish. When you're satisfied with all the images you've selected, click "*save as Zip*." We're choosing to save images as 512x512 squares instead of 256x256 squares because even though our model outputs will be 256x256, the training model doesn't care what size the square images it's provided are. Saving our dataset as 512x512 images means that, should we decide to train a 512x512 model in the future, we don't have to re-preprocess our dataset. ------- ## Step 3: Training your Model Head over to the [fine-tuning colab notebook](https://colab.research.google.com/drive/1Xfd5fm4OnhTd6IHPMGcoqw54uhGT3HdF) and connect to Google Drive. Once it's connected, you'll see a new folder has been added to your Drive: "**deep-learning**."  Now hop over to your Google Drive and place the unzipped folder containing your cropped dataset in the deep-learning folder (**NOT** in any of the subfolders within deep-learning). Name it something descriptive if you want. In the example below are two of my dataset files, PAD-512 (my current [Pixel Art Diffusion](https://colab.research.google.com/github/KaliYuga-ai/Pixel-Art-Diffusion/blob/main/Pixel_Art_Diffusion_v3_0_(With_Disco_Symmetry).ipynb) dataset) and draggos-512, which is for a custom dragon pixel art model I'm currently tweaking.  Once your images finish uploading to Drive, head back over to the fine-tuner and have a look at the cell with red text. You'll only need to touch one of the things in this cell right now--your **dataset path**.  This is the direct path within Drive that leads to the folder containing the dataset of images we just uploaded. To find it, click on the drop-down menu beneath deep-learning in your file browser, find your dataset folder with the descriptive name that we just made, right-click, and select "*copy path*."  From there, just go back to the cell with the red text and paste your path where it specifies that you should. We're now ready to begin training **if this is a new model**. Restarting models that have stopped training from a saved checkpoint takes one more step, but is still really easy. All you'll need to do is replace the default checkpoint (LSUN's bedroom model which--yup--generates bedrooms) with your most recent saved .pt file.  The hardest part of this is making sure you choose the right saved file, as the fine-tuner will save three types every 2000 steps. For restarting from a saved .pt, you'll want you most recent *model* .pt file. See the below gif for where these are located. https://i.imgur.com/Vr879WI.gif Copy the path to the .pt file like we did for our dataset, and paste it in place of the LSUN .pt file. Your training should now resume from where you left off when you start your run. To start the run, simply head up to the top of the notebook, select "Runtime," and then click "run all."  Your training has now begun!!! ---- ## Step 4: Monitoring Your Model While It Trains Once your training starts, you'll notice that, every 50 training steps, a new log appears below the cell with the red text. This is your training log, and it's the information that gets saved in the log(n).txt files. There's a lot of values in this log, and I'm not even going to pretend I know what all of them mean. I just focus on two values: Loss and MSE. Again, I don't pretend to be able to explain what these values measure, exactly (you can google it if you're curious and a math person), but the basic goal of training is to get each of these numbers as close to 0 as possible without over-training your model. Over-training is what happens when your model fits too well to the dataset and starts spitting out images very close to or identical to images it trained on. ***We do not want this***. What we want is a model that produces novel images that *could have come* from the training data. The LSUN checkpoint that we begin training from is used because it has a really low score already, meaning that, hopefully, you'll have less work getting your model to this point. When your model starts, the Loss/MSE will be around .02. In the example below, I've gotten each down to a much lower number.  Numbers are all well and good, but sometimes *seeing* what your model is capable of producing is the most fun--and helpful. To do this, we'll use Alex Spirin's [fork of Disco Diffusion v 5.2](https://colab.research.google.com/github/Sxela/DiscoDiffusion-Warp/blob/main/Disco_Diffusion_v5_2_Warp_custom_model.ipynb?), which allows us to load a custom model from Drive into Disco. To load your custom model into the notebook, make sure you connect to the same Google drive account as you did with the fine-tuner. Once connected, you'll want to copy the path of your most recent ema checkpoint (NOT model, like we did above when restarting from a saved .pt). Scroll down to "**2. Diffusion and CLIP model settings**", expand the cell, select "custom" from the drop-down menu, paste your checkpoint path in placee of the default one, and your model should load when you run the notebook!  You can use this to check on your new .pts as they're created, and before you know it, you'll have your own, fully-trained diffusion model! ----------- That's really all there is to training your own custom diffusion model! It's really not as daunting a task as it may seem from the outset; that being said, if you have questions or if you run into issues, don't hesitate to reach out. I can't wait to see what everyone creates! If you found this guide useful, please feel free (but in no way obligated) to sign up for my [Patreon](https://www.patreon.com/kaliyuga_ai) or follow me on [Twitter](https://twitter.com/KaliYuga_ai) if you haven't already--I'm always up to some sort of AI art stuff on both!

| author | kaliyuga |

|---|---|

| permlink | training-your-own-unconditional-diffusion-model-with-minimal-coding |

| category | hive-158694 |

| json_metadata | {"app":"peakd/2022.05.9","format":"markdown","tags":["tutorial","aiart","experimental","digitalart","generativeart"],"users":["kaliyuga"],"image":["https://files.peakd.com/file/peakd-hive/kaliyuga/sqYph4bg-image.png","https://files.peakd.com/file/peakd-hive/kaliyuga/R0y6VINf-image.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23w3EiEvXeMpagVo9s4yZPRXqKbAKFGmj6buV8qLtSaPnoKeMcdwS9nYNaUEbPCzGv2Lk.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23vsrS5qCw9i2zHPyhitFc6rKBdxsadUnz2WhqDcJ2gUbVYQSW7pRVZtXKiQTeNnZRf1y.png","https://files.peakd.com/file/peakd-hive/kaliyuga/EoeLEgRrkN3q18sgP44wenmXua8bRypVp2CBTgByxYGi1j58pMK3FRL7xqGUGPgx2gq.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23yxAKcUkw9NtAnN2Wqi3xDwCsZPPUtv8WrqH8kTxWuyBnqirWaBZrk5rqkSZn37DmE9t.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23xLHSzsaSqyYQv2pE7DUkFku9aEkWQDvuJQSA6HQGQxDzi4TxRjmdhx6U1LhmRYYuf2w.png","https://i.imgur.com/Vr879WI.gif","https://files.peakd.com/file/peakd-hive/kaliyuga/23tw8KpnQhZCnKtnJGuYicEgaLBr9zddrm9bAFBeXUyuQ4C4hXd9nXnfUKGT7beqq3SL2.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23tcNa7AW8MYShPb4wmzQkLb9mTc8J71rEUjaX8AYAf8niQnfaTJ6VTmJc1nxUYei8eE9.png","https://files.peakd.com/file/peakd-hive/kaliyuga/23xViir55SWyZgfyPPEbwSoge9MU8784rJTEpJ88uZ9BXgAMr6eoj5PczfAupbRwQPwqW.png"]} |

| created | 2022-06-29 02:41:57 |

| last_update | 2022-06-29 02:41:57 |

| depth | 0 |

| children | 9 |

| last_payout | 2022-07-06 02:41:57 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 17.174 HBD |

| curator_payout_value | 17.140 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 15,154 |

| author_reputation | 243,481,853,306,775 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 114,413,714 |

| net_rshares | 58,405,782,609,273 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| pfunk | 0 | 8,603,582,772,895 | 100% | ||

| stellabelle | 0 | 0 | 100% | ||

| mammasitta | 0 | 4,386,188,859 | 1.3% | ||

| roelandp | 0 | 117,978,844,945 | 3.25% | ||

| ausbitbank | 0 | 1,963,364,082,086 | 100% | ||

| fiveboringgames | 0 | 739,577,136 | 13% | ||

| akipponn | 0 | 648,852,203 | 6.5% | ||

| leoplaw | 0 | 0 | 100% | ||

| clayboyn | 0 | 31,428,518,200 | 25% | ||

| vannour | 0 | 202,771,897,026 | 100% | ||

| steemcultures | 0 | 1,357,435,571 | 100% | ||

| glamourpro | 0 | 514,321,693 | 100% | ||

| steemworld | 0 | 5,252,048,809 | 100% | ||

| steemphoto | 0 | 503,921,961 | 100% | ||

| creat | 0 | 1,507,962,180 | 100% | ||

| mapesa | 0 | 2,070,890,488 | 50% | ||

| oleg326756 | 0 | 1,539,238,013 | 3.25% | ||

| cisah | 0 | 1,485,548,678 | 100% | ||

| yadamaniart | 0 | 5,665,101,715 | 6.5% | ||

| tarotbyfergus | 0 | 517,390,751,017 | 100% | ||

| justinw | 0 | 17,565,765,764 | 4.07% | ||

| walterjay | 0 | 46,487,445,514 | 3.9% | ||

| pedir-museum | 0 | 3,346,095,351 | 100% | ||

| askari | 0 | 14,458,233,011 | 13% | ||

| da-dawn | 0 | 0 | 23% | ||

| aceh | 0 | 1,664,942,382 | 100% | ||

| davidgermano | 0 | 0 | 100% | ||

| detlev | 0 | 19,259,540,294 | 1.95% | ||

| munzir | 0 | 1,646,708,793 | 100% | ||

| batman0916 | 0 | 891,424,863 | 6.17% | ||

| rt395 | 0 | 2,135,027,407 | 2.5% | ||

| rawselectmusic | 0 | 2,553,558,771 | 6.5% | ||

| drwom | 0 | 8,232,554,931 | 25% | ||

| tracer-paulo | 0 | 16,183,955,100 | 100% | ||

| chinito | 0 | 16,336,623,565 | 27% | ||

| vachemorte | 0 | 20,384,940,162 | 25% | ||

| joeyarnoldvn | 0 | 497,188,509 | 1.47% | ||

| juliakponsford | 0 | 379,586,076,821 | 50% | ||

| auditoryorgasms | 0 | 733,010,384 | 25% | ||

| diegoameerali | 0 | 1,095,533,077 | 3.9% | ||

| dante31 | 0 | 772,288,704 | 6.5% | ||

| sannur | 0 | 2,191,471,995 | 100% | ||

| ocd | 0 | 1,679,686,338,110 | 13% | ||

| macchiata | 0 | 26,613,795,407 | 6.5% | ||

| aquaculture | 0 | 621,528,753 | 100% | ||

| redrica | 0 | 4,541,109,600 | 6.79% | ||

| overunitydotcom | 0 | 12,292,910,966 | 75% | ||

| jlsplatts | 0 | 21,289,069,043 | 2% | ||

| bigdizzle91 | 0 | 1,521,947,305 | 6.5% | ||

| hanggggbeeee | 0 | 1,118,992,901 | 6.5% | ||

| castleberry | 0 | 431,652,856,791 | 100% | ||

| hectgranate | 0 | 625,782,589 | 1.95% | ||

| eonwarped | 0 | 100,500,218,760 | 3.9% | ||

| zoltarian | 0 | 685,790,253 | 13% | ||

| tomatom | 0 | 1,209,878,088 | 6.5% | ||

| r00sj3 | 0 | 22,961,725,337 | 6.5% | ||

| anikekirsten | 0 | 629,498,633 | 6.5% | ||

| jim888 | 0 | 0 | 22% | ||

| ronpurteetv | 0 | 888,195,689 | 6.5% | ||

| gabrielatravels | 0 | 1,946,698,004 | 5.2% | ||

| jmotip | 0 | 46,309,657,264 | 50% | ||

| awuahbenjamin | 0 | 0 | 100% | ||

| soufianechakrouf | 0 | 2,761,165,707 | 20% | ||

| gokhan83kurt | 0 | 1,664,281,854 | 50% | ||

| ricardo993 | 0 | 1,906,769,665 | 7.8% | ||

| warpedpoetic | 0 | 1,118,681,062 | 6.17% | ||

| ocd-witness | 0 | 15,290,310,462 | 13% | ||

| agememnon | 0 | 2,122,237,482 | 80% | ||

| almi | 0 | 0 | 25% | ||

| miroslavrc | 0 | 3,271,391,259 | 6.5% | ||

| peri | 0 | 1,241,069,961 | 100% | ||

| indigoocean | 0 | 2,919,475,071 | 6.5% | ||

| veteranforcrypto | 0 | 1,748,657,594 | 3.9% | ||

| inyoursteem | 0 | 0 | 100% | ||

| rozku | 0 | 59,495,499,879 | 29% | ||

| bushradio | 0 | 2,813,682,005 | 25% | ||

| nateaguila | 0 | 175,357,244,721 | 5% | ||

| cabalcoffers | 0 | 84,962,649,497 | 100% | ||

| konradxxx3 | 0 | 602,195,655 | 6.5% | ||

| haccolong | 0 | 3,741,468,165 | 6.5% | ||

| nathyortiz | 0 | 2,540,199,302 | 6.5% | ||

| ocdb | 0 | 42,316,691,007,875 | 12.35% | ||

| lifeskills-tv | 0 | 6,568,226,667 | 6.17% | ||

| bigbos99 | 0 | 584,672,417 | 100% | ||

| aljif7 | 0 | 135,402,688,416 | 100% | ||

| hoaithu | 0 | 1,383,957,232 | 5.52% | ||

| anhvu | 0 | 985,789,529 | 5.2% | ||

| rayshiuimages | 0 | 515,808,261 | 6.5% | ||

| pradeepdee6 | 0 | 6,172,891,531 | 5.2% | ||

| javyeslava.photo | 0 | 2,321,148,451 | 5.85% | ||

| athunderstruck | 0 | 2,299,297,194 | 6.5% | ||

| maonx | 0 | 3,004,374,136 | 6.17% | ||

| cars-art | 0 | 0 | 100% | ||

| multifacetas | 0 | 823,309,414 | 6.5% | ||

| devann | 0 | 5,738,269,555 | 4% | ||

| steem-on-2020 | 0 | 0 | 88% | ||

| variedades | 0 | 1,749,312,248 | 4.94% | ||

| nftshowroom | 0 | 27,624,679,677 | 50% | ||

| bcarolan639 | 0 | 0 | 100% | ||

| alchemystones | 0 | 20,627,451,806 | 50% | ||

| photographercr | 0 | 5,310,573,855 | 2.47% | ||

| squareonefarms | 0 | 1,326,125,183 | 6.5% | ||

| yiobri | 0 | 1,615,817,725 | 6.5% | ||

| poliwalt10 | 0 | 1,804,429,759 | 3.08% | ||

| beerlover | 0 | 2,324,763,910 | 1.95% | ||

| peachymod | 0 | 1,900,279,172 | 50% | ||

| icetea | 0 | 2,844,962,334 | 60% | ||

| rootdraws | 0 | 6,917,117,159 | 50% | ||

| zeesh | 0 | 2,683,614,232 | 6.5% | ||

| bilpcoinbpc | 0 | 912,118,354 | 6.99% | ||

| julesquirin | 0 | 1,588,822,469 | 10% | ||

| dpend.active | 0 | 932,255,647 | 2.6% | ||

| shinoxl | 0 | 0 | 100% | ||

| stormbourne45 | 0 | 0 | 100% | ||

| alienarthive | 0 | 1,769,920,098 | 50% | ||

| x9ed1732b | 0 | 5,126,946,373 | 50% | ||

| eve66 | 0 | 11,189,984,680 | 100% | ||

| iameden | 0 | 525,710,267 | 6.5% | ||

| woelfchen | 0 | 94,675,367,148 | 100% | ||

| actioncats | 0 | 16,367,796,160 | 11.7% | ||

| gabolegends | 0 | 911,612,609 | 3.25% | ||

| olaunlimited | 0 | 9,049,778,649 | 5.2% | ||

| anafae | 0 | 540,788,212 | 1.3% | ||

| vicnzia | 0 | 1,214,451,497 | 9.09% | ||

| kawsar8035 | 0 | 3,176,585,833 | 3.7% | ||

| aemile-kh | 0 | 5,878,570,822 | 100% | ||

| paolazun | 0 | 665,038,328 | 6.5% | ||

| rarereden | 0 | 0 | 100% | ||

| tempertantric | 0 | 121,445,974,535 | 37% | ||

| chubb149 | 0 | 633,968,591 | 2.6% | ||

| underscoreparty | 0 | 65,790,683 | 10% | ||

| meritocracy | 0 | 669,659,621,449 | 6.17% | ||

| tehox | 0 | 0 | 100% | ||

| hiveart | 0 | 497,855,474 | 6.5% | ||

| dcrops | 0 | 36,642,015,913 | 6.17% | ||

| atexoras | 0 | 550,582,344 | 6.5% | ||

| charleshart16 | 0 | 0 | 100% | ||

| an-man | 0 | 11,840,592,676 | 30% | ||

| zdigital222 | 0 | 0 | 100% | ||

| limn | 0 | 6,592,373,752 | 21% | ||

| happyfrog420-new | 0 | 0 | 100% | ||

| fauxsophisticate | 0 | 0 | 100% | ||

| hexagono6 | 0 | 794,115,137 | 6.17% | ||

| leveluplifestyle | 0 | 2,797,563,182 | 6.5% | ||

| heskay | 0 | 20,788,280,255 | 50% | ||

| chimp.ceo | 0 | 677,011,905 | 9.75% | ||

| maykk | 0 | 528,204,410 | 6.5% | ||

| brujita18 | 0 | 1,596,742,822 | 6.5% | ||

| krrizjos18 | 0 | 554,352,173 | 6.5% | ||

| mattbrown.art | 0 | 2,046,322,581 | 25% | ||

| partiesjohall | 0 | 2,126,310,786 | 13% | ||

| nfthypesquad | 0 | 617,738,811 | 10% | ||

| jessicaossom | 0 | 9,384,083,917 | 6.5% | ||

| carlos13 | 0 | 7,813,649,265 | 100% | ||

| aprasad2325 | 0 | 2,154,753,538 | 6.17% | ||

| lavista | 0 | 12,252,930,049 | 100% | ||

| vankarma | 0 | 0 | 16% | ||

| abstrads | 0 | 6,548,535,906 | 100% | ||

| lsdmercyy | 0 | 0 | 100% | ||

| ivycrafts | 0 | 1,602,493,364 | 6.5% | ||

| nyche | 0 | 0 | 100% | ||

| rauti | 0 | 0 | 100% | ||

| subidu | 0 | 0 | 100% | ||

| lucianaabrao | 0 | 0 | 100% | ||

| matons | 0 | 0 | 100% | ||

| gehenna08 | 0 | 1,640,163,934 | 50% | ||

| potsuperiya | 0 | 0 | 100% | ||

| xclie | 0 | 0 | 100% | ||

| dafusa | 0 | 0 | 100% | ||

| eylz619 | 0 | 542,871,050 | 6.17% | ||

| splintercell-01 | 0 | 2,509,462,459 | 50% | ||

| yorguis | 0 | 0 | 100% | ||

| lauti | 0 | 0 | 100% | ||

| mario03 | 0 | 0 | 100% | ||

| topsouls | 0 | 0 | 100% | ||

| nick-nk596 | 0 | 0 | 100% | ||

| uncorked-reality | 0 | 3,499,820,933 | 30% | ||

| mario04 | 0 | 0 | 100% | ||

| asdfghjkiraaa | 0 | 0 | 100% | ||

| noctury | 0 | 552,254,963 | 6.5% | ||

| femcy-willcy | 0 | 835,404,704 | 10% | ||

| mariano123 | 0 | 5,916,117,149 | 100% | ||

| bosveld1 | 0 | 0 | 100% | ||

| happy.men | 0 | 0 | 100% | ||

| str33tl1f3 | 0 | 725,581,034 | 13% | ||

| wannatrailwithme | 0 | 1,108,417,810 | 30% | ||

| killerwot | 0 | 691,974,359 | 6.5% | ||

| pooky | 0 | 123,693,953 | 50% | ||

| lmcolor | 0 | 0 | 100% | ||

| taniqnam | 0 | 0 | 100% | ||

| gonrank | 0 | 0 | 100% | ||

| kaiizo | 0 | 0 | 100% | ||

| tagmout | 0 | 0 | 100% | ||

| prosocialise | 0 | 6,320,820,579 | 6.5% | ||

| ramon2024 | 0 | 0 | 100% | ||

| rzazo24 | 0 | 0 | 100% | ||

| kanmar | 0 | 0 | 100% | ||

| azamsohrabi | 0 | 6,965,912,246 | 100% | ||

| ivanrg3 | 0 | 0 | 100% | ||

| caracasprin | 0 | 0 | 100% | ||

| maw89 | 0 | 0 | 100% | ||

| kaiggue | 0 | 0 | 100% | ||

| theawesononso | 0 | 725,888,885 | 6.5% | ||

| xocdb | 0 | -185,321,822 | -12.35% | ||

| franzpaulie | 0 | 717,829,198 | 100% | ||

| ign1te | 0 | 2,270,227,730 | 100% | ||

| mamoti | 0 | 0 | 50% | ||

| plutodefender66 | 0 | 0 | 100% | ||

| spearhead1976 | 0 | 0 | 100% | ||

| koleso | 0 | 0 | 100% | ||

| biyaawnur | 0 | 0 | 38.21% | ||

| wesleyr | 0 | 0 | 100% | ||

| supamalaman | 0 | 0 | 100% | ||

| wasined | 0 | 0 | 100% | ||

| ygin2 | 0 | 0 | 100% | ||

| penguin.jpg | 0 | 0 | 100% | ||

| nauyoga | 0 | 0 | 100% | ||

| yerick | 0 | 0 | 5% | ||

| agrante | 0 | 0 | 100% | ||

| jonahlyn | 0 | 0 | 100% | ||

| karakoc | 0 | 0 | 100% | ||

| lienric | 0 | 0 | 100% | ||

| ownnice | 0 | 0 | 100% | ||

| daxitomax | 0 | 0 | 100% | ||

| nuriddin | 0 | 0 | 100% | ||

| sania-rana | 0 | 0 | 87% | ||

| cryptonewzcazter | 0 | 0 | 100% | ||

| kimtram2203 | 0 | 0 | 100% | ||

| blockchainpan | 0 | 0 | 100% |

| author | aemile-kh |

|---|---|

| permlink | re-kaliyuga-202271t105258811z |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694","tutorial","aiart","experimental","digitalart","generativeart"],"app":"ecency/3.0.30-mobile","format":"markdown+html"} |

| created | 2022-07-01 07:53:00 |

| last_update | 2022-07-01 07:53:00 |

| depth | 1 |

| children | 1 |

| last_payout | 2022-07-08 07:53:00 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 12 |

| author_reputation | 9,214,669,185,943 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 114,473,347 |

| net_rshares | 651,495,145 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kaliyuga | 0 | 651,495,145 | 100% | ||

| kanmar | 0 | 0 | 100% |

| author | kaliyuga |

|---|---|

| permlink | re-aemile-kh-red7kz |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.05.9"} |

| created | 2022-07-01 23:22:12 |

| last_update | 2022-07-01 23:22:12 |

| depth | 2 |

| children | 0 |

| last_payout | 2022-07-08 23:22:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 12 |

| author_reputation | 243,481,853,306,775 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 114,492,961 |

| net_rshares | 5,467,573,723 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| aemile-kh | 0 | 5,467,573,723 | 100% | ||

| kanmar | 0 | 0 | 100% |

| author | eve66 |

|---|---|

| permlink | re-kaliyuga-reb7f1 |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.05.9"} |

| created | 2022-06-30 21:23:24 |

| last_update | 2022-06-30 21:23:24 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-07-07 21:23:24 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 90 |

| author_reputation | 198,743,008,077,166 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 114,460,459 |

| net_rshares | 600,199,249 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kaliyuga | 0 | 600,199,249 | 100% | ||

| kanmar | 0 | 0 | 100% |

| author | lavista |

|---|---|

| permlink | re-kaliyuga-re8ca7 |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.05.9"} |

| created | 2022-06-29 08:15:45 |

| last_update | 2022-06-29 08:15:45 |

| depth | 1 |

| children | 1 |

| last_payout | 2022-07-06 08:15:45 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 44 |

| author_reputation | 27,413,614,589,649 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 114,418,337 |

| net_rshares | 5,765,915,759 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| urbanist | 0 | 0 | 100% | ||

| aemile-kh | 0 | 5,765,915,759 | 100% | ||

| kanmar | 0 | 0 | 100% |

Good question! There's no hard-and-fast rule. I'd say stop when things look good enough :)

| author | kaliyuga |

|---|---|

| permlink | re-lavista-re8csf |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.05.9"} |

| created | 2022-06-29 08:26:42 |

| last_update | 2022-06-29 08:26:42 |

| depth | 2 |

| children | 0 |

| last_payout | 2022-07-06 08:26:42 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 90 |

| author_reputation | 243,481,853,306,775 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 114,418,584 |

| net_rshares | 12,130,474,750 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| lavista | 0 | 12,130,474,750 | 100% | ||

| kanmar | 0 | 0 | 100% |

I would like to add one thing. If your dataset consists of images in 256x256 or 512x512 format only, when you try to generate an image higher than this resolution, there are tiling issues. I ran into this with my first trained model. So, with my second model, I kept higher resolution files as they are, did not resize them. And the tiling issues disappeared. On a side note, I tried generating images in rectangular proportions, all working well. No tiling issues. So, I think it is best to train with the highest image sizes we can afford to train with, in square formats....

| author | lavista |

|---|---|

| permlink | re-kaliyuga-re8epv |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.05.9"} |

| created | 2022-06-29 09:08:21 |

| last_update | 2022-06-29 09:08:21 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-07-06 09:08:21 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 579 |

| author_reputation | 27,413,614,589,649 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 114,419,266 |

| net_rshares | 5,652,453,615 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| aemile-kh | 0 | 5,652,453,615 | 100% | ||

| kanmar | 0 | 0 | 100% |

Hello, great tutorial! Do you suggest using a large dataset? I have a dataset contains about 150k images. And I couldn't make loss and mse low enough. Should I try to train more steps or make the dataset smaller? Thanks.

| author | penguin.jpg |

|---|---|

| permlink | re-kaliyuga-rghf01 |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.07.1"} |

| created | 2022-08-12 03:00:06 |

| last_update | 2022-08-12 03:00:06 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-08-19 03:00:06 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 220 |

| author_reputation | 0 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 115,648,682 |

| net_rshares | 0 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kanmar | 0 | 0 | 100% |

Great tutorial! just one question, i'm training with a set of cartoon charactors, will CLIP know who they are or at least what they are? so i can use the name in the text prompts for Disco Diffusion to generate a new character?

| author | sean-art-visual |

|---|---|

| permlink | re-kaliyuga-rgucw1 |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.07.1"} |

| created | 2022-08-19 02:43:21 |

| last_update | 2022-08-19 02:43:21 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-08-26 02:43:21 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 227 |

| author_reputation | 0 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 115,842,918 |

| net_rshares | 0 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kanmar | 0 | 0 | 100% |

Can the models learned in this process be used in stable diffusion? (disco diffusion's generation time is slow.. 😢)

| author | yamkaz |

|---|---|

| permlink | re-kaliyuga-rgzefu |

| category | hive-158694 |

| json_metadata | {"tags":["hive-158694"],"app":"peakd/2022.07.1"} |

| created | 2022-08-21 20:04:42 |

| last_update | 2022-08-21 20:04:42 |

| depth | 1 |

| children | 0 |

| last_payout | 2022-08-28 20:04:42 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 115 |

| author_reputation | 0 |

| root_title | "Training Your Own Unconditional Diffusion Model (With Minimal Coding)" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 115,915,415 |

| net_rshares | 0 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kanmar | 0 | 0 | 100% |

hiveblocks

hiveblocks