Every day I create a hundred or more images in Midjourney as part of my Daily Echoes project. I have a structure for how I create the images that has become pretty much routine.

Midjourney has just made version 7 the default version and has redone their style references. I love the style references and especially —sref random which churns up a variety of style codes for my images.

Today I decided to try something different. I started with the prompt for one of my images and reconstructed it, one step at a time. I didn’t get the original image but I expected that. I was surprised how close it came though.

Here’s how it went.

I structure all my prompts as:

*colour elements | style elements | visual elements | parameters*

Here’s the prompt I started with:

> Dawn pink, golden mist, pale blue, mountain shadow | Luminous natural surrealism with symbolic mirroring | As the sun crests a distant mountain, it casts a glow across two figures just about to kiss; the landscape mimics their movement--two peaks leaning inward, branches touching, light held like breath | --s 600

<center>

</center>

I started with just the style parameter to create my base image. I love using the personality profiles. Midjourney has both a personal profile and moodboards as part of their personalization.

The personal profile is created by ranking sets of two images. Everytime I rank more images, the profile code will change. I have several of them.

I’ve found the moodboards a lot more fun to create.

When I want to create a moodboard I open a new one and add images to it. I can upload images from various sources or select images from my existing gallery of images.

This means that I can control the styles of the images the board is created from.

I create a sample of my personalization codes using a set prompt and parameters. Then I have ChatGPT provide me with an analysis based on the aesthetics in the sample. It gives me input based on:

- Colour Palette

- Lighting and Texture

- Composition and Form

- Emotional Feel

- Stylistic Hints

This gives me a guide when I’m selecting which codes I’m going to use. When I’m doing my Daily Echoes I use a personal profile code and at least one moodboard code in the prompts.

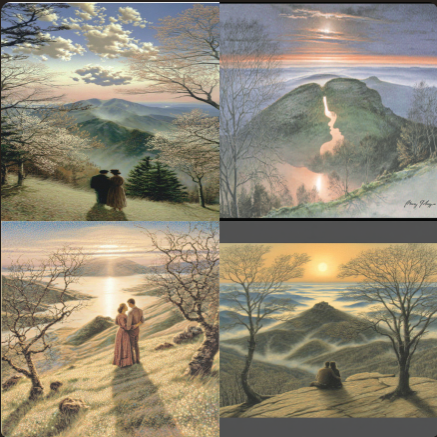

For this next image set I added my personal profile code: **--s 600 --p bqmkh8s**

<center>

</center>

I do like the images in this set. I’ve noticed that even though I get presented with a wide range of images in pairs to rank, my personal style code doesn’t change very much.

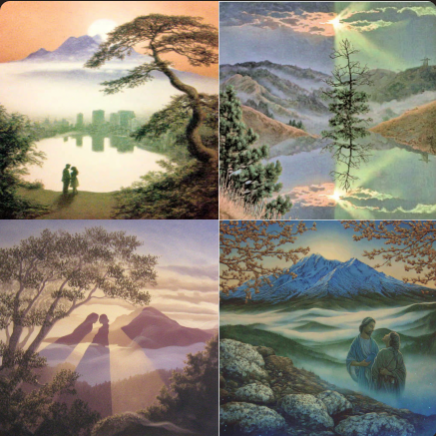

Next I’m going to add my choice of moodboard: **--s 600 --p bqmkh8s 3tp1vwl**

<center>

</center>

The image in the header of this post is the image I started with. If you look at the panels I’ve generated so far, there is at least one image in each panel that sort of resembles the image.

Next I’m going to add the chaos parameter.

This one takes values from 1 to 100. The larger thee value, the more variation it will put into the image: **--s 600 --c 45 --p bqmkh8s 3tp1vwl**

<center>

</center>

Well this certainly added some chaos. None of the images in the panel really resemble the starting image. There’s more to add yet.

This next parameter I’ve been experimenting with but don’t really know what it’s supposed to do.

Midjourney introduced it in the Alpha version of version 7 and vaguely explained it as influencing the aesthetics of the image. Sometimes I use it, sometimes I leave it out: **--s 600 --c 45 --exp 5 --p bqmkh8s 3tp1vwl**

<center>

</center>

As you can see, using it has brought some resemblance to the original image back to a couple of the pictures in the panel.

Now I start to introduce the style reference parameter.

Well, actually, I had introduced it previously.

I do a set of prompts using a base set of parameters and then I do them again adding **—sref random** which will generate a different style code for each prompt.

I select some of the codes I like the best and apply them to all of the prompts. With version 7 and the newly revised style reference system. I can have the codes use either version 6.1 by using **—v 4** or let it default to version 7 or use **—v 6**.

Using the code that was used for this image, I used version 7 to see what it would give me: **--s 600 --c 45 --exp 5 --p bqmkh8s 3tp1vwl --sref 519241046**

<center>

</center>

I had read the new style reference was very different. The resemblance to the original image is very minimal at best here.

Now, let’s try the version 6.1 style reference that I actually used: **--s 600 --c 45 --exp 5 --p bqmkh8s 3tp1vwl --sref 519241046 --sv 4**

<center>

</center>

Well that brought back one image that started to resemble the original image.

Finally, I add the style weight parameter.

This has values from 0 to 1000 with the higher values applying the style reference stronger.

I used 350 on this set: **--s 600 --c 45 --exp 5 --p bqmkh8s 3tp1vwl --sref 519241046 --sv 4 --sw 350**

<center>

</center>

Only one of the images in that panel came close to the original. That really didn’t surprise me. One of the things I like about the style reference parameter is the number of ways I can use it.

I can use a link to the original image as a style reference alongside the style code I used. I did this and raised the style weight to 550. Just for fun, I tried it using both versions of Midjourney.

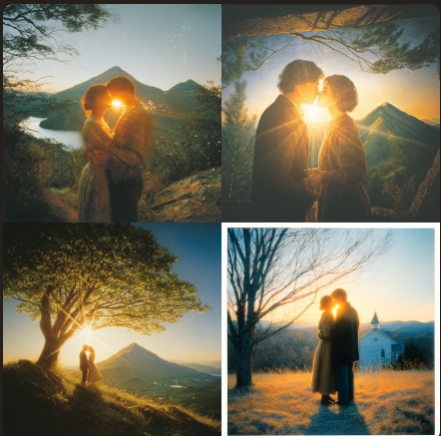

Here is for version 6.1: **--s 600 --c 45 --exp 5 --p bqmkh8s 3tp1vwl --sref 519241046 https://s.mj.run/1Kq94HQ5FE8 --sv 4 --sw 550**

<center>

</center>

Two of the images came out very similar to the original.

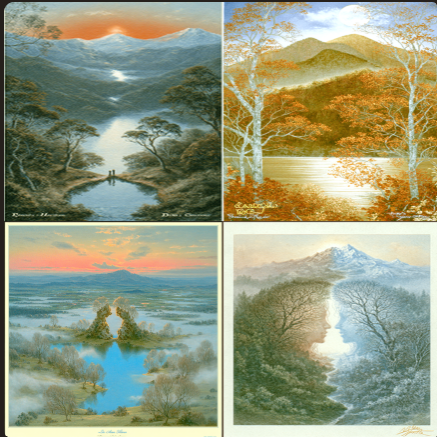

Now, let’s try it for version 7: **--s 600 --c 45 --exp 5 --p bqmkh8s 3tp1vwl --sref 519241046 https://s.mj.run/1Kq94HQ5FE8 --sv 6 --sw 550**

<center>

</center>

There’s three images in this page very similar to the original. Likely, if I went even higher on the style weight it would get closer

I do like the sharper, crisper lines on ths version 7.

I think I’m going to have some fun testing out the new style reference system in version 7. I have a bunch of moodboards to explore to see what they will look like in the new version.

In case you’re curious, the Echo image I was working with was created to represent the word “kissing”. That was one of the words I wasn’t sure where it would go when I started.

hiveblocks

hiveblocks