<div class="text-justify">

<center>

[Brasilcode](https://www.brasilcode.com.br/13-motivos-para-aprender-python/)</center>

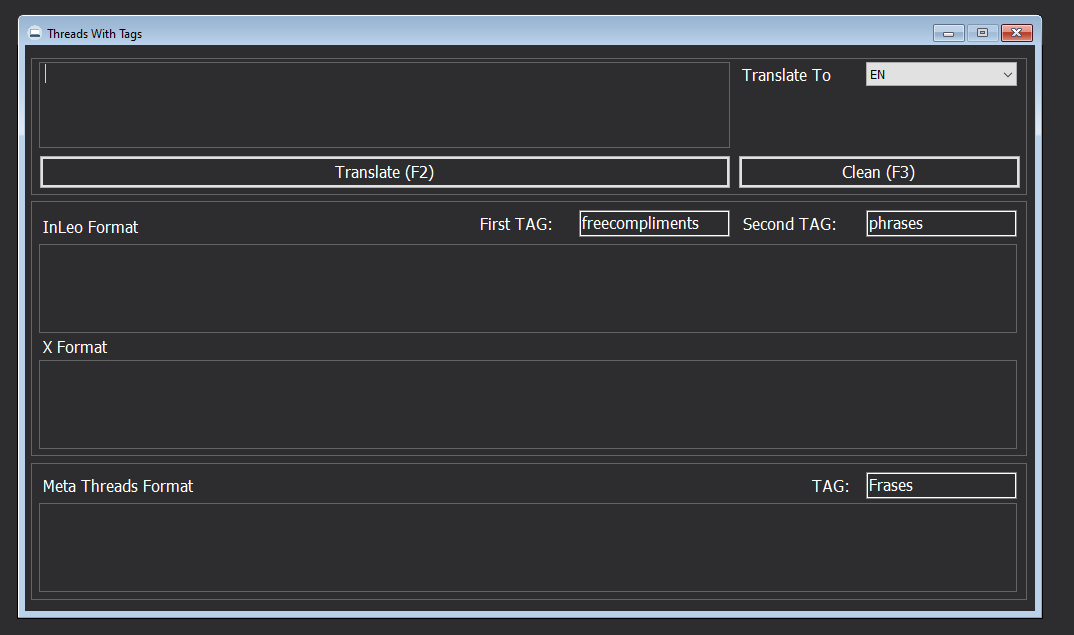

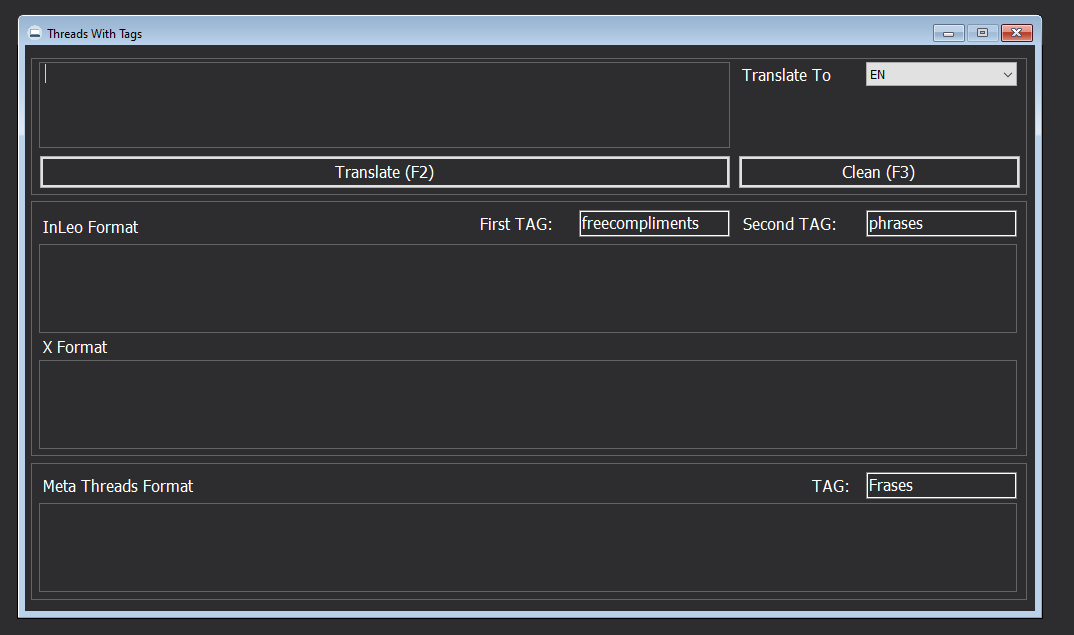

It's been a while since I've programmed anything in code to help me or just play around with Hive. My last project was an offline project that formatted small texts for the inleo standard, threads (from meta) and for X. Each one with its own way of using tags or text formatting commands.

In addition, it already translated from Brazilian Portuguese to English, making it easier to copy and paste wherever needed. I stopped working on the project because I ended up losing the source code along with one of my SSDs, but at the moment I can even do better, after all, this program called "Free Translator" was practically offline, it just accessed an API or URL (I don't remember) that did the translation.

<center></center>

Taking advantage of today's holiday, I wanted to play around a bit and create something I've been wanting to do for a while: access to HiveSQL and Hive Engine to get my total dividends (everything that came in as Hive tokens) and, along with that, calculate the APR on top of the L2 tokens.

This was crazy, but now I'm more relaxed, because I finally managed to do it! Just 3 Python files, a lot of ChatGPT and help from two great friends: @mengao and @gwajnberg and with that, I can already say that I have a first version done.

<center></center>

As I said, there is no turning back when it comes to using artificial intelligence. I probably would never have done this just by studying and searching on Google. So, I didn't keep track of the hours, but I probably spent about 3 hours last night, from 11 pm to 2 am messing around with no success. And today probably another 7 or 8 hours, starting at 9 am and finishing now around 3 pm, I didn't even have lunch to tell you the truth haha.

I started by doing:

```

pip install hiveengine

pip install beem

pip install nectarengine

```

And nothing, none of them would install, the result was a problem with OpenSSL, which I had already installed in version 3.5 and everything else. Oddly enough, I had to use ChatGPT with all my strength and that's how I discovered that I needed version 1.1.1 of OpenSSL to be able to install any of these packages/libraries.

After that, phew, I took a deep breath and was left with just this command:

```

pip install hiveengine

```

With that, everything was very simple, a main file to start and call the functions and then print the file in the terminal:

```

import Conexao as conexao

import CmdHiveEngine as hiveengine

from tabulate import tabulate

# Mapeamento de perfil_envio para token

perfil_para_token = {

"armero": "ARMERO",

#"bee.drone": "BEE",

"beeswap.fees": "BXT",

"dab-treasury": "DAB",

"dcityfund": "SIM",

"duo-sales": "DUO",

"eds-pay": "EDSI",

"lgndivs": "LGN",

"pakx": "PAKX",

"tokenpimp": "PIMP",

"vetfunding": "CAV"

}

try:

Campos = "UPPER([FROM]) AS perfil_envio," + "\r\n"

Campos += "SUM(amount) AS week_income" + "\r\n"

Tabelas = "TxTransfers tt" + "\r\n"

Criterio = "[TO] = 'shiftrox'" + "\r\n"

Criterio += "AND [type] NOT IN ('transfer_from_savings','transfer_to_savings')" + "\r\n"

Criterio += "AND tt.[timestamp] >= DATEADD(day, -7, GETDATE())" + "\r\n"

Criterio += "AND tt.amount_symbol = 'HIVE'" + "\r\n"

Criterio += "AND tt.[FROM] <> 'shiftrox'" + "\r\n"

Criterio += "GROUP BY [FROM]" + "\r\n"

Ordem = "UPPER([FROM])"+ "\r\n"

rsResultado = conexao.RetReg(Campos, Tabelas, Criterio, Ordem)

if rsResultado and isinstance(rsResultado[0], dict):

dblMenorPreco : float = 0.0

dblTotal : float = 0.0

intQtdeSemanas : int = 52

# Extrair os cabeçalhos (nomes das colunas) do primeiro dicionário

headers = list(rsResultado[0].keys()) # Converte dict_keys para lista

# Adicionar a nova coluna que será manipulada manualmente

headers.append("HOLDING")

headers.append("HIVE_PRICE")

headers.append("HIVE_VALUE")

headers.append("APR")

for item in rsResultado:

dblTokenBalance = 0

dblMenorPreco = 0

#buscando holding, stake e menor preco

token = perfil_para_token.get(item["perfil_envio"].lower(), "nenhum")

if token != "nenhum":

dblTokenBalance = hiveengine.get_token_balance("shiftrox", token)

dblMenorPreco = hiveengine.obter_menor_preco_venda(token)

else:

dblTokenBalance = 0

#buscando holding e stake

# Calcular valores numéricos

holding = float(dblTokenBalance)

hive_price = float(dblMenorPreco)

hive_value = holding * hive_price

# Guardar valores numéricos para cálculos

item["HOLDING"] = holding

item["HIVE_PRICE"] = hive_price

item["HIVE_VALUE"] = hive_value

item["APR"] = ((float(item["week_income"]) * intQtdeSemanas) / hive_value) * 100 if hive_value != 0 else 0.0

# Agora formatar os valores apenas para exibição (ex: tabulate)

item["HOLDING"] = f"{holding:,.8f}"

item["HIVE_PRICE"] = f"{hive_price:,.8f}"

item["HIVE_VALUE"] = f"{hive_value:,.8f}"

item["APR"] = f"{item['APR']:.2f}%"

dblTotal += float(item["week_income"])

# Criar linha de total

total_row = {

"perfil_envio": "Total:",

"week_income": f"{dblTotal:.3f}", # formatado com 3 casas decimais

"APR": ""

}

# Adicionar a linha ao final da lista

rsResultado.append(total_row)

print(tabulate(rsResultado, headers="keys", tablefmt="grid"))

else:

print("Nenhum resultado retornado ou formato não suportado.")

except Exception as erro:

print("Erro Principal =>", erro)

```

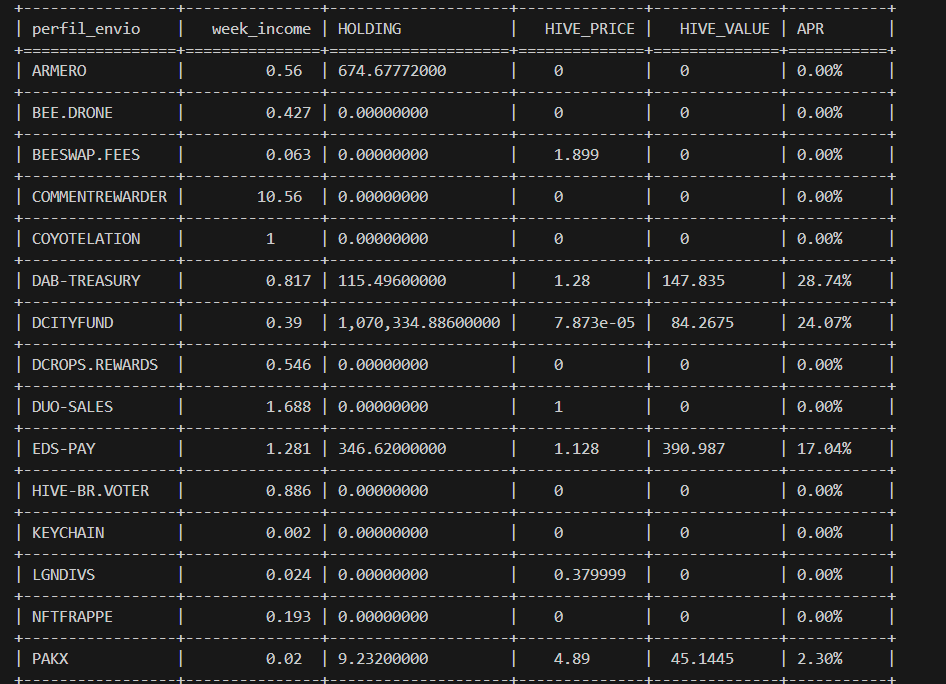

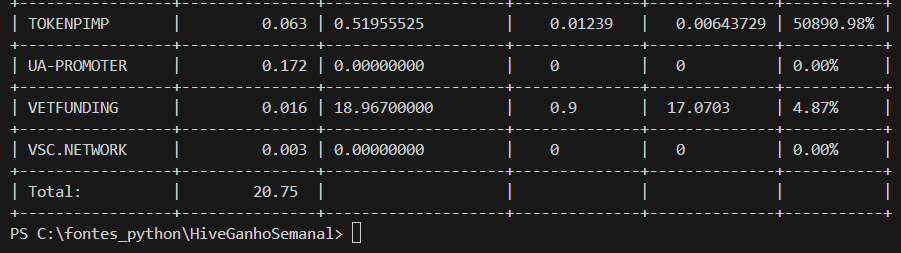

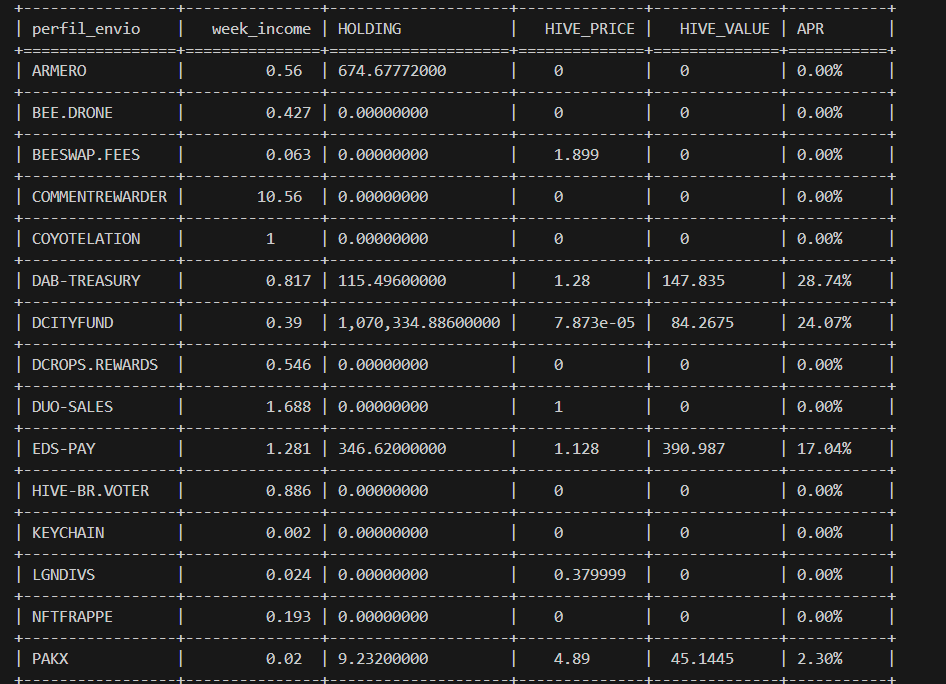

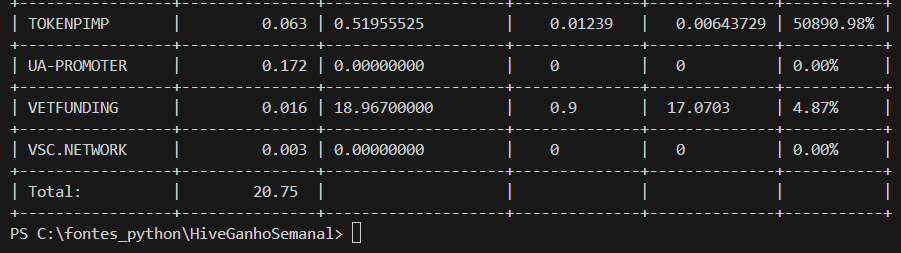

And so some APRs were calculated. I thought it was great to finally be able to access more layers of Hive to create a program that will help me a lot to understand how my dividend gains are going. Of course, I still need to improve it, it only searches for tokens that are liquid and some are calculated only with them in stake, so the next step will be to improve this.

Some things related to formatting also need to be improved, I want to put everything with 8 decimal places, which is the maximum that appears in the portfolios, and thus align it as much as possible with them. I also need to translate everything into English to make it more eye-catching and improve some small points.

<center></center>

There is also a totalizer, so in this case in the last 7 days I earned a total of 20.75 Hive just with dividends and transfers from other profiles. I am not interested in setting up something in HBD at the moment, especially because none of the tokens I have pays in HBD.

One thing that has always left me in doubt is regarding the filter date. In my mind I should always take 7 days, but I don't think I should count today, but I took a look at https://hivestats.io/@shiftrox and saw that their 7-day option filters exactly (Today - 7 days), so they take for example everything that happened on 04/25/2025 until today, 05/01/2025).

```

Criterion += "AND tt.[timestamp] >= DATEADD(day, -7, GETDATE())" + "\r\n"

```

I don't know if this is good for dividends, maybe I'll change it to consider (yesterday - 7 days), that is, work with days that are already "closed", but anyway, there's still a lot to improve.

Finally, the code to find the amount of tokens I have and their lowest market value was:

```

import requests

from hiveengine.api import Api

#from hiveengine.market import Market

def obter_menor_preco_venda(token_symbol):

try:

api = Api()

sell_orders = api.find("market", "sellBook", query={"symbol": token_symbol})

if not sell_orders:

#return f"Não há ordens de venda para o token {token_symbol} no momento."

return 0

menor_preco = min(sell_orders, key=lambda x: float(x['price']))

dblMenorPreco = str(menor_preco['price'])

return dblMenorPreco

except Exception as e:

print(f"Ocorreu um erro: {e}")

return 0

def get_token_balance(usuario: str, token: str) -> float:

url = "https://api.hive-engine.com/rpc/contracts"

payload = {

"jsonrpc": "2.0",

"id": 1,

"method": "find",

"params": {

"contract": "tokens",

"table": "balances",

"query": {

"account": usuario,

"symbol": token.upper()

},

"limit": 1

}

}

try:

response = requests.post(url, json=payload)

response.raise_for_status()

result = response.json().get("result", [])

if result:

return float(result[0].get("balance", 0.0))

else:

return 0.0

except requests.RequestException as e:

print(f"Erro ao acessar a API: {e}")

return 0.0

```

get_token_balance() came out as a URL search, then I want to see if I can find it via the hiveengine library, just like the get_lowest_sale_price() function was created, to centralize everything using the API.

Anyway, the first steps have been taken, now it's time to burn neurons to do more! First you start and then you improve!

<center>

[Brasilcode](https://www.brasilcode.com.br/13-motivos-para-aprender-python/)</center>

Já faz um bom tempo que não programo nada em código para me auxiliar ou apenas brincar juntamente com a Hive. Meu ultimo projeto foi um offline que formatava pequenos textos para o padrão da inleo, threads (da meta) e para o X. Cada um com sua respectiva forma de usar tags ou comandos de formatação de texto.

Além de que ele já fazia a tradução do português do Brasil para o inglês, facilitando assim poder copiar e colar aonde fosse preciso. Parei de mexer no projeto porque acabei perdendo o código fonte juntamente com um SSD meu, mas no momento posso até fazer melhor, afinal, esse programa chamado de "Tradutor Livre" era praticamente offline, apenas acessava uma API ou URL (não lembro) que fazia a tradução.

<center></center>

Aproveitando o feriado de hoje, quis brincar um pouco e criar algo que sentia necessidade de fazer há algum tempo: acesso ao HiveSQL e Hive Engine, para buscar o meu total de dividendos (tudo que entrou de token Hive) e juntamente com isso, calcular o APR em cima dos tokens da L2.

Muito maluco isso, mas agora, estou mais tranquilo, pois finalmente consegui fazer! Apenas 3 arquivos em Python, muito ChatGPT e ajuda de dois grandes amigos: @mengao e @gwajnberg e com isso, já posso dizer que tem uma primeira versão feita.

<center></center>

É como digo, não tem mais volta no uso de inteligência artificial. Eu provavelmente nunca teria feito isso aqui somente com estudo e buscando no google. Então, não marquei as horas, mas provavelmente gastei umas 3 horas ontem a noite, fiquei das 11 da noite até as 2 da manhã mexendo e sem sucesso. E hoje provavelmente mais umas 7 ou 8 horas, começando as 9 da manhã e finalizando agora por volta das 3 da tarde, eu nem almocei para falar a verdade haha.

Comecei fazendo:

```

pip install hiveengine

pip install beem

pip install nectarengine

```

E nada, nenhum deles instalava, o retorno era problema com OpenSSL que já havia instalado na versão 3.5 e tudo mais. Por incrível que pareça, precisei o usar o ChatGPT com todas as forças e assim descobri que precisava da versão 1.1.1 do OpenSSL para poder instalar qualquer um destes pacotes/bibliotecas.

Depois disso, ufa, respirei fundo e fiquei apenas com este comando:

```

pip install hiveengine

```

Com isso era tudo bem simples, um arquivo principal para iniciar e chamar as funções e depois realizar o print no terminal:

```

import Conexao as conexao

import CmdHiveEngine as hiveengine

from tabulate import tabulate

# Mapeamento de perfil_envio para token

perfil_para_token = {

"armero": "ARMERO",

#"bee.drone": "BEE",

"beeswap.fees": "BXT",

"dab-treasury": "DAB",

"dcityfund": "SIM",

"duo-sales": "DUO",

"eds-pay": "EDSI",

"lgndivs": "LGN",

"pakx": "PAKX",

"tokenpimp": "PIMP",

"vetfunding": "CAV"

}

try:

Campos = "UPPER([FROM]) AS perfil_envio," + "\r\n"

Campos += "SUM(amount) AS week_income" + "\r\n"

Tabelas = "TxTransfers tt" + "\r\n"

Criterio = "[TO] = 'shiftrox'" + "\r\n"

Criterio += "AND [type] NOT IN ('transfer_from_savings','transfer_to_savings')" + "\r\n"

Criterio += "AND tt.[timestamp] >= DATEADD(day, -7, GETDATE())" + "\r\n"

Criterio += "AND tt.amount_symbol = 'HIVE'" + "\r\n"

Criterio += "AND tt.[FROM] <> 'shiftrox'" + "\r\n"

Criterio += "GROUP BY [FROM]" + "\r\n"

Ordem = "UPPER([FROM])"+ "\r\n"

rsResultado = conexao.RetReg(Campos, Tabelas, Criterio, Ordem)

if rsResultado and isinstance(rsResultado[0], dict):

dblMenorPreco : float = 0.0

dblTotal : float = 0.0

intQtdeSemanas : int = 52

# Extrair os cabeçalhos (nomes das colunas) do primeiro dicionário

headers = list(rsResultado[0].keys()) # Converte dict_keys para lista

# Adicionar a nova coluna que será manipulada manualmente

headers.append("HOLDING")

headers.append("HIVE_PRICE")

headers.append("HIVE_VALUE")

headers.append("APR")

for item in rsResultado:

dblTokenBalance = 0

dblMenorPreco = 0

#buscando holding, stake e menor preco

token = perfil_para_token.get(item["perfil_envio"].lower(), "nenhum")

if token != "nenhum":

dblTokenBalance = hiveengine.get_token_balance("shiftrox", token)

dblMenorPreco = hiveengine.obter_menor_preco_venda(token)

else:

dblTokenBalance = 0

#buscando holding e stake

# Calcular valores numéricos

holding = float(dblTokenBalance)

hive_price = float(dblMenorPreco)

hive_value = holding * hive_price

# Guardar valores numéricos para cálculos

item["HOLDING"] = holding

item["HIVE_PRICE"] = hive_price

item["HIVE_VALUE"] = hive_value

item["APR"] = ((float(item["week_income"]) * intQtdeSemanas) / hive_value) * 100 if hive_value != 0 else 0.0

# Agora formatar os valores apenas para exibição (ex: tabulate)

item["HOLDING"] = f"{holding:,.8f}"

item["HIVE_PRICE"] = f"{hive_price:,.8f}"

item["HIVE_VALUE"] = f"{hive_value:,.8f}"

item["APR"] = f"{item['APR']:.2f}%"

dblTotal += float(item["week_income"])

# Criar linha de total

total_row = {

"perfil_envio": "Total:",

"week_income": f"{dblTotal:.3f}", # formatado com 3 casas decimais

"APR": ""

}

# Adicionar a linha ao final da lista

rsResultado.append(total_row)

print(tabulate(rsResultado, headers="keys", tablefmt="grid"))

else:

print("Nenhum resultado retornado ou formato não suportado.")

except Exception as erro:

print("Erro Principal =>", erro)

```

E assim calculado alguns APR. Achei sensacional isso de conseguir finalmente acessar mais camadas da Hive para fazer um programa que irá me ajudar bastante a entender como está os meus ganhos de dividendos. Claro que ainda preciso melhorar, está buscando apenas os tokens que estão líquidos e alguns são calculados apenas com eles em stake, então o próximo passo vai ser melhorar isso.

Algumas coisas relacionadas a formatação também precisam ser melhoradas, quero colocar tudo com 8 casas decimais que é o máximo que aparece nas carteiras e com isso já alinhar o máximo possível com elas. Falta passar tudo para o inglês também para ficar mais chamativo e melhorar alguns pequenos pontos.

<center></center>

Já tem um totalizador também, então neste caso nos últimos 7 dias eu ganhei um total de 20,75 Hive apenas com dividendos e transferências de outros perfis. Não tenho interesse no momento de montar algo em HBD, até porque nenhum token que eu tenho paga em HBD.

Uma coisa que sempre me deixou em dúvidas é em relação a data de filtro. Na minha mente devo pegar sempre 7 dias, mas acho que não deveria contar o dia de hoje, mas dei uma olhada no https://hivestats.io/@shiftrox e vi que a opção de 7 dias deles filtra exatamente (Hoje - 7 dias), então eles pegam por exemplo tudo o que aconteceu no dia 25/04/2025 até hoje, 01/05/2025).

```

Criterio += "AND tt.[timestamp] >= DATEADD(day, -7, GETDATE())" + "\r\n"

```

Não sei se para dividendos isso é bom, talvez mude para considerar (ontem - 7 dias), ou seja, trabalhar com dias já "fechados", mas enfim, tem muita coisa por aí ainda para melhorar.

Por fim o código para buscar a quantidade de token que tenho e o seu menor valor de mercado foi:

```

import requests

from hiveengine.api import Api

#from hiveengine.market import Market

def obter_menor_preco_venda(token_symbol):

try:

api = Api()

sell_orders = api.find("market", "sellBook", query={"symbol": token_symbol})

if not sell_orders:

#return f"Não há ordens de venda para o token {token_symbol} no momento."

return 0

menor_preco = min(sell_orders, key=lambda x: float(x['price']))

dblMenorPreco = str(menor_preco['price'])

return dblMenorPreco

except Exception as e:

print(f"Ocorreu um erro: {e}")

return 0

def get_token_balance(usuario: str, token: str) -> float:

url = "https://api.hive-engine.com/rpc/contracts"

payload = {

"jsonrpc": "2.0",

"id": 1,

"method": "find",

"params": {

"contract": "tokens",

"table": "balances",

"query": {

"account": usuario,

"symbol": token.upper()

},

"limit": 1

}

}

try:

response = requests.post(url, json=payload)

response.raise_for_status()

result = response.json().get("result", [])

if result:

return float(result[0].get("balance", 0.0))

else:

return 0.0

except requests.RequestException as e:

print(f"Erro ao acessar a API: {e}")

return 0.0

```

get_token_balance() saiu como busca por URL, depois quero ver se consigo encontrar via a biblioteca do hiveengine, assim como foi feita a função obter_menor_preco_venda(), para centralizar tudo no uso da API.

Enfim, primeiros passos dados, agora é queimar neurônio para fazer mais! Primeiro você começa e depois você melhora!

<center>[](https://discord.com/invite/kg6ee3vtrp)</center>

___

<center><div>

<center>***Follow me on [X (Formerly Twitter)](https://twitter.com/YanPatrick_)***</center>

🔹Hive Games🔹

**🔸[Splinterlands](https://splinterlands.com?ref=shiftrox)🔸[Holozing](https://holozing.com?ref=shiftrox)🔸[Terracore](https://www.terracoregame.com/?ref=shiftrox)🔸[dCrops](https://www.dcrops.com/?ref=shiftrox)🔸[Rising Star](https://www.risingstargame.com?referrer=shiftrox)🔸**

</div></center>

</div>| author | shiftrox | ||||||

|---|---|---|---|---|---|---|---|

| permlink | enpt-br-python-on-holiday-and-joy-in-advancing-even-further-in-programming-with-hive | ||||||

| category | hive-139531 | ||||||

| json_metadata | {"app":"peakd/2025.4.6","format":"markdown","image":["https://files.peakd.com/file/peakd-hive/shiftrox/23uQP16uRLtTXkoTUiCauF7UuvNFDqGC3deZRaRD53WSwX1Tp86FNrT2iRLuq1RCoxs4d.png","https://files.peakd.com/file/peakd-hive/shiftrox/23x1ckYW5T1yBx8C6Rd18NYtNsYsNdTGDvWitn6Bsn1q5DyNyNy6dyVhLcGh4zUN1vwui.jpeg","https://files.peakd.com/file/peakd-hive/shiftrox/EobzjubTQXb55Eibc8rYHmsniX6NTHoxvucDDLAkMUMHcGv9WmWfMrhatzuZfMYe2dm.png","https://files.peakd.com/file/peakd-hive/shiftrox/23swibVedaRCRaXyDguxPykaaL8sbxcbmZskYLqef7CjT6cN5fHWLv9QF3Ey4TmwWBZGr.png","https://files.peakd.com/file/peakd-hive/shiftrox/EnyoXowYFPrm7hVQLp5XrK8mTW9zev7PYiMC3jhX76AC6iY2TcYHDPyexodeSbZh7de.png","https://images.hive.blog/DQmXEDLx7R7Jy8fHF1tA7sPyb4A9QBvmj3y4FjFhephcPCS/sun_divisor.webp","https://images.ecency.com/DQmcTb42obRrjKQYdtH2ZXjyQb1pn7HNgFgMpTeC6QKtPu4/banner_hiver_br_01.png"],"tags":["neoxian","code","python","hivebr","pob","cent","hive-engine","hive","programming","bbh"],"users":["mengao","gwajnberg","shiftrox"]} | ||||||

| created | 2025-05-01 18:51:12 | ||||||

| last_update | 2025-05-01 18:54:39 | ||||||

| depth | 0 | ||||||

| children | 13 | ||||||

| last_payout | 2025-05-08 18:51:12 | ||||||

| cashout_time | 1969-12-31 23:59:59 | ||||||

| total_payout_value | 4.650 HBD | ||||||

| curator_payout_value | 4.852 HBD | ||||||

| pending_payout_value | 0.000 HBD | ||||||

| promoted | 0.000 HBD | ||||||

| body_length | 21,141 | ||||||

| author_reputation | 705,778,977,632,279 | ||||||

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" | ||||||

| beneficiaries |

| ||||||

| max_accepted_payout | 1,000,000.000 HBD | ||||||

| percent_hbd | 10,000 | ||||||

| post_id | 142,443,976 | ||||||

| net_rshares | 29,461,886,415,991 | ||||||

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| leprechaun | 0 | 2,979,177,827 | 25% | ||

| good-karma | 0 | 10,789,234,320 | 1% | ||

| vortac | 0 | 3,983,920,656,910 | 49.5% | ||

| dwinblood | 0 | 5,046,204,301 | 40% | ||

| dudutaulois | 0 | 27,494,755,376 | 100% | ||

| richardcrill | 0 | 28,523,352,510 | 50% | ||

| seckorama | 0 | 98,057,758,954 | 20% | ||

| verbal-d | 0 | 3,272,158,453 | 10% | ||

| mangos | 0 | 3,203,252,179,905 | 48% | ||

| funnel | 0 | 13,715,266,565 | 20% | ||

| esteemapp | 0 | 2,747,650,441 | 1% | ||

| stefanialexis | 0 | 873,654,802 | 20% | ||

| bigtakosensei | 0 | 2,246,529,679 | 2% | ||

| thereikiforest | 0 | 1,484,577,454 | 10% | ||

| frankydoodle | 0 | 545,860,617 | 4% | ||

| underground | 0 | 2,992,738,550 | 10.45% | ||

| grider123 | 0 | 18,453,730,768 | 49.5% | ||

| forykw | 0 | 277,771,943,958 | 30.5% | ||

| bitcoinman | 0 | 4,597,211,253 | 2.5% | ||

| improv | 0 | 55,365,931,203 | 100% | ||

| guiltyparties | 0 | 167,315,991,188 | 20% | ||

| anacristinasilva | 0 | 7,926,755,281 | 25% | ||

| joeyarnoldvn | 0 | 452,933,705 | 1.47% | ||

| vikbuddy | 0 | 29,730,605,681 | 44.4% | ||

| rafalski | 0 | 820,775,856 | 54.9% | ||

| pixelfan | 0 | 52,771,692,655 | 5.9% | ||

| jeanlucsr | 0 | 559,611,712 | 1% | ||

| stinawog | 0 | 2,383,736,322 | 100% | ||

| silverstackeruk | 0 | 8,491,789,983 | 22% | ||

| felander | 0 | 369,824,711,823 | 61% | ||

| yogacoach | 0 | 14,114,704,258 | 61% | ||

| pixresteemer | 0 | 38,719,976,708 | 11% | ||

| brazilians | 0 | 145,313,421,964 | 100% | ||

| sayee | 0 | 552,253,838 | 0.9% | ||

| esteem.app | 0 | 332,316,937 | 1% | ||

| tobetada | 0 | 926,334,881,157 | 20% | ||

| coolguy123 | 0 | 5,907,605,485 | 3% | ||

| coyotelation | 0 | 17,920,881,457 | 25% | ||

| felipejoys | 0 | 21,571,123,802 | 100% | ||

| mytechtrail | 0 | 5,138,684,199 | 4% | ||

| sneakyninja | 0 | 1,378,300,909 | 2.1% | ||

| vempromundo | 0 | 113,992,738,005 | 100% | ||

| wiseagent | 0 | 323,859,786,084 | 25% | ||

| californiacrypto | 0 | 869,718,745 | 50% | ||

| phgnomo | 0 | 17,972,298,992 | 50% | ||

| matheusggr | 0 | 305,717,141,392 | 100% | ||

| roger.remix | 0 | 796,152,355 | 61% | ||

| unconditionalove | 0 | 8,196,023,802 | 30.5% | ||

| alinequeiroz | 0 | 588,977,342 | 100% | ||

| oredebby | 0 | 929,821,386 | 4% | ||

| ericburgoyne | 0 | 2,441,551,920 | 4% | ||

| anderssinho | 0 | 6,562,363,570 | 25% | ||

| scooter1010 | 0 | 776,057,895 | 50% | ||

| heruvim78 | 0 | 988,370,857 | 4% | ||

| bhattg | 0 | 6,036,064,115 | 1% | ||

| russellstockley | 0 | 39,907,813,932 | 30% | ||

| neopch | 0 | 1,800,305,943 | 17% | ||

| onepercentbetter | 0 | 83,287,586,323 | 50% | ||

| barmbo | 0 | 403,605,689,906 | 100% | ||

| gadrian | 0 | 31,462,183,957 | 2.2% | ||

| steemexperience | 0 | 69,664,663,280 | 50% | ||

| lologom | 0 | 7,545,710,283 | 100% | ||

| sbi3 | 0 | 242,292,760,629 | 14.3% | ||

| mynotsofitlife | 0 | 1,083,700,722 | 10% | ||

| slobberchops | 0 | 1,345,077,938,397 | 16.43% | ||

| meins0815 | 0 | 11,047,439,675 | 23% | ||

| mariale07 | 0 | 5,546,447,274 | 99% | ||

| crimo | 0 | 2,724,488,831 | 50% | ||

| tuisada | 0 | 775,952,572 | 2.4% | ||

| kirstin | 0 | 632,520,022,195 | 100% | ||

| truthtrader | 0 | 2,361,255,305 | 50% | ||

| gabrielrr17 | 0 | 570,061,502 | 10% | ||

| achim03 | 0 | 221,437,832,859 | 10% | ||

| thedailysneak | 0 | 1,894,967,155 | 2.1% | ||

| haegar85 | 0 | 11,429,723,298 | 100% | ||

| mastersa | 0 | 3,962,075,528 | 60% | ||

| pardinus | 0 | 45,194,190,404 | 10% | ||

| georgeknowsall | 0 | 57,147,272,387 | 100% | ||

| smartvote | 0 | 134,784,591,388 | 6.2% | ||

| fiberfrau | 0 | 1,060,075,768 | 4% | ||

| leodelara | 0 | 2,619,629,522 | 100% | ||

| pixiepost | 0 | 1,287,802,989 | 10% | ||

| upvoteshares | 0 | 92,118,110,850 | 17% | ||

| mk992039 | 0 | 590,827,250 | 4% | ||

| guurry123 | 0 | 1,636,920,201 | 2.4% | ||

| harpreetjanda | 0 | 28,212,538,912 | 50% | ||

| nonsowrites | 0 | 1,120,615,984 | 25% | ||

| marianaemilia | 0 | 2,862,610,288 | 20% | ||

| cwow2 | 0 | 122,596,396,205 | 38% | ||

| aiuna | 0 | 8,349,299,410 | 100% | ||

| gudnius.comics | 0 | 15,003,513,961 | 100% | ||

| coffeebuds | 0 | 15,554,912,520 | 50% | ||

| kakakk | 0 | 14,680,881,879 | 100% | ||

| aksurevm89 | 0 | 86,237,831,620 | 100% | ||

| gomster | 0 | 944,547,969 | 10% | ||

| perfilbrasil | 0 | 4,617,306,491 | 10% | ||

| libertycrypto27 | 0 | 225,156,950,387 | 20% | ||

| solairitas | 0 | 297,390,452,943 | 50% | ||

| imagenius | 0 | 508,791,500 | 61% | ||

| anjanida | 0 | 9,909,464,988 | 100% | ||

| wanker | 0 | 5,900,531,962 | 100% | ||

| bastter | 0 | 1,788,723,017 | 20% | ||

| maxgolden | 0 | 7,205,336,116 | 100% | ||

| thisisawesome | 0 | 38,307,781,828 | 15% | ||

| crimcrim | 0 | 515,431,246 | 37.5% | ||

| spinvest | 0 | 346,332,268,214 | 20.9% | ||

| milu-the-dog | 0 | 738,237,910 | 61% | ||

| ricardocobian | 0 | 808,535,428 | 99% | ||

| umuk | 0 | 82,879,029,206 | 100% | ||

| obelus | 0 | 2,513,143,100 | 50% | ||

| binodkatuwal | 0 | 2,040,027,844 | 100% | ||

| maddogmike | 0 | 8,404,917,742 | 6.4% | ||

| lisamgentile1961 | 0 | 16,923,771,529 | 6% | ||

| sbi-tokens | 0 | 3,089,645,034 | 4.21% | ||

| yorra | 0 | 525,345,915 | 50% | ||

| curtawakening | 0 | 671,388,653 | 8% | ||

| iamraincrystal | 0 | 3,712,382,964 | 4% | ||

| successchar | 0 | 2,878,440,437 | 11% | ||

| elianaicgomes | 0 | 4,192,134,884 | 2.5% | ||

| bradleyarrow | 0 | 11,839,630,432 | 8% | ||

| cageon360 | 0 | 8,952,035,992 | 100% | ||

| levi-miron | 0 | 68,357,710,211 | 100% | ||

| gloriaolar | 0 | 6,463,327,833 | 6% | ||

| chmoen | 0 | 1,367,690,509 | 4% | ||

| hirohurl | 0 | 1,907,170,824 | 8% | ||

| steem-holder | 0 | 5,852,316,009 | 13% | ||

| solairibot | 0 | 48,228,611,591 | 50% | ||

| davidlionfish | 0 | 11,060,862,016 | 100% | ||

| grindle | 0 | 302,736,693,512 | 50% | ||

| adcreatordesign | 0 | 563,105,093 | 4% | ||

| ecobanker | 0 | 98,330,785,411 | 99% | ||

| treasure.hoard | 0 | 51,176,435,454 | 49.5% | ||

| dpend.active | 0 | 4,614,621,153 | 12.2% | ||

| ykretz | 0 | 1,373,850,986 | 15% | ||

| fabulousfurlough | 0 | 1,156,181,707 | 100% | ||

| dcityrewards | 0 | 2,115,811,603,607 | 61% | ||

| sketching | 0 | 4,110,941,835 | 30.5% | ||

| ecency | 0 | 413,855,080,510 | 1% | ||

| iamalivechalleng | 0 | 685,726,177 | 10% | ||

| forkyishere | 0 | 4,066,979,267 | 30.5% | ||

| diego1306 | 0 | 820,102,036 | 100% | ||

| dannewton | 0 | 56,807,056,661 | 8.8% | ||

| archon-gov | 0 | 15,874,358,555 | 10% | ||

| jilt | 0 | 4,318,077,015 | 11% | ||

| ecency.stats | 0 | 354,726,460 | 1% | ||

| earthsea | 0 | 3,319,930,571 | 8.8% | ||

| xvlad | 0 | 51,698,780,705 | 49.5% | ||

| aslehansen | 0 | 2,881,058,172 | 8% | ||

| goliathus | 0 | 818,594,530 | 10% | ||

| hive-world | 0 | 1,120,947,156 | 10% | ||

| ctpsb | 0 | 108,793,228,204 | 10% | ||

| eddie-earner | 0 | 1,017,220,017,285 | 20.9% | ||

| alokkumar121.ctp | 0 | 393,894,878 | 100% | ||

| elricmoonslayer | 0 | 838,498,568 | 50% | ||

| dhedge | 0 | 8,375,248,748 | 1.6% | ||

| dalz.shorts | 0 | 757,288,671 | 50% | ||

| heruvim1978 | 0 | 5,019,721,062 | 4% | ||

| meritocracy | 0 | 777,732,141,618 | 6% | ||

| hive-112281 | 0 | 1,411,926,606 | 4% | ||

| carlosro | 0 | 505,702,966 | 20% | ||

| brucolac | 0 | 3,361,024,972 | 12% | ||

| tfranzini | 0 | 4,703,281,679 | 99% | ||

| georgia11 | 0 | 5,135,926,907 | 100% | ||

| playbyhive | 0 | 10,468,404,320 | 100% | ||

| paultactico2 | 0 | 2,510,545,073 | 100% | ||

| emsenn0 | 0 | 842,875,032 | 4.4% | ||

| vaipraonde | 0 | 35,636,359,858 | 19.8% | ||

| liotes | 0 | 589,871,953 | 10% | ||

| proofofbrainio | 0 | 37,617,962,897 | 50% | ||

| liotes.voter | 0 | 474,729,761,772 | 10% | ||

| imno | 0 | 67,907,956,541 | 60% | ||

| thomashnblum | 0 | 2,984,162,436 | 49.5% | ||

| onetrueself | 0 | 2,250,327,905 | 8% | ||

| soltecno | 0 | 45,051,515,583 | 100% | ||

| photo-hive-five | 0 | 965,220,665 | 61% | ||

| aliveandsocial | 0 | 970,987,227 | 10% | ||

| ifarmgirl | 0 | 25,122,710,752 | 4% | ||

| shanhenry | 0 | 1,395,192,780 | 37.5% | ||

| mauriciolimax | 0 | 50,961,401,323 | 99% | ||

| d35tr0 | 0 | 676,322,091 | 49.5% | ||

| ayesha-malik | 0 | 6,095,910,591 | 50% | ||

| dubble | 0 | 349,189,031,786 | 100% | ||

| rimurutempest | 0 | 4,567,725,555 | 99% | ||

| youarealive | 0 | 22,678,806,328 | 10% | ||

| flamistan | 0 | 1,214,404,315 | 50% | ||

| zallin | 0 | 8,201,679,570 | 100% | ||

| legalizabrazil | 0 | 793,977,131 | 99% | ||

| sstdd | 0 | 1,086,901,362 | 100% | ||

| danokoroafor | 0 | 914,378,697 | 4% | ||

| trashyomen | 0 | 1,285,431,078 | 49.5% | ||

| masterzarlyn28 | 0 | 1,362,832,080 | 28.5% | ||

| vitoragnelli | 0 | 445,176,643 | 100% | ||

| nnn1jls | 0 | 485,429,555 | 30% | ||

| mervinthepogi | 0 | 907,538,832 | 30% | ||

| adulruna | 0 | 619,553,910 | 20% | ||

| elderdark | 0 | 47,142,598,117 | 69.3% | ||

| rzc24-nftbbg | 0 | 3,275,629,108 | 1.6% | ||

| jarmeson | 0 | 5,143,919,827 | 49.5% | ||

| tub3r0 | 0 | 761,264,041 | 10% | ||

| emd012 | 0 | 546,504,806 | 10% | ||

| frazfrea | 0 | 1,141,307,233 | 30% | ||

| alovely088 | 0 | 901,713,094 | 1.6% | ||

| xykorlz | 0 | 2,197,027,300 | 15% | ||

| logen9f | 0 | 1,104,759,190 | 0.8% | ||

| hkinuvaime | 0 | 1,007,430,665 | 10% | ||

| lincemarrom | 0 | 26,845,428,490 | 74.25% | ||

| keimo | 0 | 563,766,634 | 30% | ||

| qyses | 0 | 467,037,136 | 18% | ||

| kojiri | 0 | 649,686,742 | 10% | ||

| mustachio12 | 0 | 674,961,107 | 30% | ||

| jaopalas | 0 | 1,810,624,262 | 49.5% | ||

| eolianpariah2 | 0 | 114,644,140,167 | 50% | ||

| luizeba | 0 | 7,531,939,108 | 99% | ||

| mengao | 0 | 756,585,455,369 | 100% | ||

| johnripper | 0 | 474,899,614 | 5% | ||

| lobaobh | 0 | 3,458,856,087 | 49.5% | ||

| jpleron | 0 | 772,955,112 | 20% | ||

| syel25 | 0 | 10,414,978,206 | 49.5% | ||

| chaosmagic23 | 0 | 476,129,110 | 4% | ||

| heutorybr | 0 | 2,025,498,730 | 64.35% | ||

| elfino28 | 0 | 400,203,523 | 14% | ||

| khoola | 0 | 2,635,073,137 | 50% | ||

| bteim | 0 | 8,501,396,458 | 50% | ||

| kaibagt | 0 | 82,576,720,523 | 100% | ||

| trostparadox.vyb | 0 | 1,114,050,997 | 50% | ||

| vyb.pob | 0 | 2,254,198,824 | 50% | ||

| crazyphantombr | 0 | 200,525,147,408 | 100% | ||

| lolz.cent | 0 | 15,700,936 | 100% | ||

| alohaed | 0 | 4,221,094,430 | 4% | ||

| saboin.pob | 0 | 277,055,250 | 50% | ||

| highfist | 0 | 887,481,589 | 10% | ||

| vyb.curation | 0 | 1,294,065,405 | 50% | ||

| hoosie | 0 | 33,285,278,852 | 11% | ||

| hiro.juegos | 0 | 1,618,149,678 | 4% | ||

| falcout | 0 | 998,135,791 | 99% | ||

| ak08 | 0 | 7,637,985,522 | 40% | ||

| dd1100 | 0 | 8,891,808,407 | 100% | ||

| hivepakistan | 0 | 560,059,241,462 | 40% | ||

| gwajnberg | 0 | 190,652,798,125 | 100% | ||

| sam9999 | 0 | 1,997,421,100 | 20% | ||

| thorlock | 0 | 24,236,884,644 | 20.9% | ||

| dub-c | 0 | 833,592,199 | 100% | ||

| pobscholarship | 0 | 541,831,948 | 50% | ||

| amakauz | 0 | 951,751,679 | 4% | ||

| ryosai | 0 | 4,892,133,544 | 24% | ||

| vyb.fund | 0 | 1,052,171,487 | 50% | ||

| prosocialise | 0 | 48,332,962,120 | 10% | ||

| beststart | 0 | 14,549,214,805 | 5% | ||

| heartbeatonhive | 0 | 12,433,380,321 | 10% | ||

| amazot2 | 0 | 10,523,892,047 | 100% | ||

| arthursiq5 | 0 | 870,245,231 | 49.5% | ||

| lolodens | 0 | 535,161,040 | 30% | ||

| joaophelip | 0 | 865,413,914 | 49.5% | ||

| mighty-thor | 0 | 1,592,199,035 | 20.9% | ||

| sabajfa | 0 | 625,507,402 | 4% | ||

| bings-cards | 0 | 960,366,580 | 100% | ||

| crazygirl777 | 0 | 5,863,833,346 | 50% | ||

| eunice9200 | 0 | 2,479,599,150 | 20% | ||

| quduus1 | 0 | 5,380,430,740 | 12% | ||

| genepoolcardlord | 0 | 28,731,246,878 | 10% | ||

| thewallet | 0 | 911,062,688 | 85% | ||

| abreusplinter | 0 | 4,108,356,579 | 49.5% | ||

| josiva | 0 | 8,602,300,038 | 49.5% | ||

| hive-br | 0 | 353,585,837,860 | 99% | ||

| splinterwhale | 0 | 971,939,437 | 20.9% | ||

| faiza34 | 0 | 1,509,795,536 | 20% | ||

| fashtioluwa | 0 | 4,566,453,348 | 20% | ||

| deggial | 0 | 6,510,403,938 | 20% | ||

| ifarmgirl-leo | 0 | 1,797,181,883 | 15% | ||

| mishkatfatima | 0 | 466,456,640 | 20% | ||

| bvrlordona | 0 | 617,245,809 | 30% | ||

| katiebeth | 0 | 453,213,517 | 40% | ||

| cli4d | 0 | 560,778,590 | 20% | ||

| mypathtofire2 | 0 | 2,008,309,867 | 50% | ||

| aprilgillian | 0 | 2,981,384,940 | 15% | ||

| vocup | 0 | 7,631,519,667 | 2.4% | ||

| matheusggr.leo | 0 | 1,439,338,896 | 100% | ||

| ukrajpoot | 0 | 3,256,391,760 | 20% | ||

| winanda | 0 | 3,748,212,178 | 10% | ||

| saboin.ctp | 0 | 33,196,508 | 10% | ||

| nftfrappe | 0 | 172,527,739,899 | 50% | ||

| ninjakitten | 0 | 15,978,955,727 | 100% | ||

| aphiel | 0 | 3,050,634,386 | 99% | ||

| empressjay | 0 | 859,554,521 | 4% | ||

| team-philippines | 0 | 591,226,398,971 | 100% | ||

| pof.cent | 0 | 1,901,095,863 | 100% | ||

| countrysid | 0 | 1,424,801,826 | 100% | ||

| hajime711 | 0 | 685,515,474 | 100% | ||

| tengolotodo.leo | 0 | 509,738,469 | 4% | ||

| spshards | 0 | 3,683,186,530 | 100% | ||

| decrystals | 0 | 6,607,578,750 | 100% | ||

| lasort | 0 | 61,754,351,843 | 50% | ||

| rifting | 0 | 6,664,440,970 | 100% | ||

| susieisclever | 0 | 1,882,119,916 | 8% | ||

| flowtrader | 0 | 7,973,658,014 | 100% | ||

| parasomnia | 0 | 2,255,102,348 | 100% | ||

| preciouz-01 | 0 | 1,164,222,568 | 49.5% | ||

| foodchunk | 0 | 13,169,814,464 | 12% | ||

| tahastories1 | 0 | 2,143,324,892 | 12% | ||

| javedkhan1989 | 0 | 2,071,838,670 | 20% | ||

| hive-br.voter | 0 | 1,092,528,176,435 | 99% | ||

| zallin.pob | 0 | 128,557,264 | 100% | ||

| les90 | 0 | 1,592,893,498 | 20% | ||

| eds-vote | 0 | 1,693,795,023,282 | 22% | ||

| lemurians | 0 | 5,948,435,261 | 99% | ||

| ismartboy | 0 | 970,070,359 | 20% | ||

| ecency.waves | 0 | 0 | 1% | ||

| dailydab | 0 | 347,439,165,301 | 13.2% | ||

| zallin.cent | 0 | 127,520,752 | 100% | ||

| ijebest | 0 | 961,279,298 | 10% | ||

| hivedrip | 0 | 12,773,883,314 | 20.9% | ||

| fredaa | 0 | 1,502,545,548 | 20% | ||

| flourishandflora | 0 | 2,123,003,258 | 20% | ||

| captainman | 0 | 7,119,997,868 | 49.5% | ||

| scentsgalore | 0 | 2,684,515,748 | 100% | ||

| ativosgarantem | 0 | 949,196,540 | 99% | ||

| bulliontools | 0 | 917,837,650 | 5.5% | ||

| clubvote | 0 | 6,000,739,060 | 2% | ||

| jkatrina | 0 | 592,060,669 | 99% | ||

| hadianoor | 0 | 10,893,226,902 | 40% | ||

| hive-193566 | 0 | 6,727,703,710 | 25% | ||

| ifhy | 0 | 369,951,143 | 4% | ||

| xlety | 0 | 1,894,147,409 | 19.8% | ||

| kaveira | 0 | 857,992,521 | 49.5% | ||

| khantaimur | 0 | 838,533,667 | 4% | ||

| spi-store | 0 | 11,266,939,200 | 20.9% | ||

| alienpunklord | 0 | 1,877,998,936 | 50% | ||

| pixbee | 0 | 3,724,995,036 | 100% | ||

| partytime.inleo | 0 | 9,005,218,136 | 20% | ||

| calebmarvel24 | 0 | 1,202,611,352 | 10% | ||

| duo-curator | 0 | 621,114,050,037 | 50% | ||

| dab-vote | 0 | 142,964,561,716 | 10% | ||

| eujotave | 0 | 536,006,552 | 49.5% | ||

| devferri | 0 | 2,974,765,300 | 49.5% | ||

| indeedly | 0 | 802,125,034 | 4% | ||

| pakx | 0 | 181,524,064,327 | 9.21% | ||

| dook4good | 0 | 4,088,559,551 | 50% | ||

| calebmarvel01 | 0 | 459,396,195 | 5% | ||

| tippythecapy | 0 | 2,705,703,925 | 100% | ||

| okarun | 0 | 529,602,323 | 1% | ||

| michael561 | 0 | 1,152,809,698 | 4.4% | ||

| gifufaithful | 0 | 32,860,365,210 | 1.67% | ||

| finpulse | 0 | 101,968,332,142 | 100% | ||

| avila.diego2 | 0 | 629,616,217 | 99% | ||

| aftabirshad | 0 | 5,020,576,653 | 100% | ||

| mcbro | 0 | 0 | 100% |

Well done 👍. Great Job.

| author | aftabirshad |

|---|---|

| permlink | svo05z |

| category | hive-139531 |

| json_metadata | {"app":"hiveblog/0.1"} |

| created | 2025-05-03 02:56:27 |

| last_update | 2025-05-03 02:56:27 |

| depth | 1 |

| children | 3 |

| last_payout | 2025-05-10 02:56:27 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 23 |

| author_reputation | 7,981,297,348,079 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,475,847 |

| net_rshares | 0 |

Thanks my friend, now it's time to develop more things hehe

| author | shiftrox |

|---|---|

| permlink | re-aftabirshad-svo0t0 |

| category | hive-139531 |

| json_metadata | {"tags":["hive-139531"],"app":"peakd/2025.4.6","image":[],"users":[]} |

| created | 2025-05-03 03:10:12 |

| last_update | 2025-05-03 03:10:12 |

| depth | 2 |

| children | 2 |

| last_payout | 2025-05-10 03:10:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 59 |

| author_reputation | 705,778,977,632,279 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,475,967 |

| net_rshares | 0 |

Okay. Keep working like this, success will touch your feet with excellent results and it is waiting for you. **Well done**

| author | aftabirshad |

|---|---|

| permlink | svo0zl |

| category | hive-139531 |

| json_metadata | {"app":"hiveblog/0.1"} |

| created | 2025-05-03 03:14:12 |

| last_update | 2025-05-03 03:14:12 |

| depth | 3 |

| children | 1 |

| last_payout | 2025-05-10 03:14:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 122 |

| author_reputation | 7,981,297,348,079 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,476,025 |

| net_rshares | 0 |

<center>This post was curated by @hive-br team!</center> <center></center> <center>Delegate your HP to the [hive-br.voter](https://ecency.com/@hive-br.voter/wallet) account and earn Hive daily!</center> | | | | | | |----|----|----|----|----| |<center>[50 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hive-br.voter&vesting_shares=50%20HP)</center>|<center>[100 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hive-br.voter&vesting_shares=100%20HP)</center>|<center>[200 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hive-br.voter&vesting_shares=200%20HP)</center>|<center>[500 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hive-br.voter&vesting_shares=500%20HP)</center>|<center>[1000 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hive-br.voter&vesting_shares=1000%20HP)</center>| <center>🔹 Follow our [Curation Trail](https://hive.vote/dash.php?i=1&trail=hive-br.voter) and don't miss voting! 🔹</center>

| author | hive-br.voter | ||||||

|---|---|---|---|---|---|---|---|

| permlink | hivebr-8o62eh5jl48 | ||||||

| category | hive-139531 | ||||||

| json_metadata | {} | ||||||

| created | 2025-05-01 18:51:36 | ||||||

| last_update | 2025-05-01 18:51:36 | ||||||

| depth | 1 | ||||||

| children | 0 | ||||||

| last_payout | 2025-05-08 18:51:36 | ||||||

| cashout_time | 1969-12-31 23:59:59 | ||||||

| total_payout_value | 0.000 HBD | ||||||

| curator_payout_value | 0.000 HBD | ||||||

| pending_payout_value | 0.000 HBD | ||||||

| promoted | 0.000 HBD | ||||||

| body_length | 1,126 | ||||||

| author_reputation | 618,842,255,502 | ||||||

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" | ||||||

| beneficiaries |

| ||||||

| max_accepted_payout | 10,000.000 HBD | ||||||

| percent_hbd | 10,000 | ||||||

| post_id | 142,443,986 | ||||||

| net_rshares | 0 |

Congratulations @shiftrox! You received a personal badge! <table><tr><td>https://images.hive.blog/70x70/https://hivebuzz.me/badges/pud.s3.png?202505020006</td><td>You powered-up at least 100 HP on Hive Power Up Day! This entitles you to a level 3 badge<br>Participate in the next Power Up Day and try to power-up more HIVE to get a bigger Power-Bee.<br>May the Hive Power be with you!</td></tr></table> <sub>_You can view your badges on [your board](https://hivebuzz.me/@shiftrox) and compare yourself to others in the [Ranking](https://hivebuzz.me/ranking)_</sub> **Check out our last posts:** <table><tr><td><a href="/hive-122221/@hivebuzz/pum-202504-result"><img src="https://images.hive.blog/64x128/https://i.imgur.com/mzwqdSL.png"></a></td><td><a href="/hive-122221/@hivebuzz/pum-202504-result">Hive Power Up Month Challenge - April 2025 Winners List</a></td></tr><tr><td><a href="/hive-122221/@hivebuzz/pum-202505"><img src="https://images.hive.blog/64x128/https://i.imgur.com/M9RD8KS.png"></a></td><td><a href="/hive-122221/@hivebuzz/pum-202505">Be ready for the May edition of the Hive Power Up Month!</a></td></tr><tr><td><a href="/hive-122221/@hivebuzz/pud-202505"><img src="https://images.hive.blog/64x128/https://i.imgur.com/805FIIt.jpg"></a></td><td><a href="/hive-122221/@hivebuzz/pud-202505">Hive Power Up Day - May 1st 2025</a></td></tr></table>

| author | hivebuzz |

|---|---|

| permlink | notify-1746145507 |

| category | hive-139531 |

| json_metadata | {"image":["https://hivebuzz.me/notify.t6.png"]} |

| created | 2025-05-02 00:25:06 |

| last_update | 2025-05-02 00:25:06 |

| depth | 1 |

| children | 0 |

| last_payout | 2025-05-09 00:25:06 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 1,365 |

| author_reputation | 369,402,100,587,838 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,450,014 |

| net_rshares | 0 |

Congratulations @shiftrox! You received a personal badge! <table><tr><td>https://images.hive.blog/70x70/https://hivebuzz.me/badges/puh.png?202505020009</td><td>You made another user happy by powering him up some HIVE on Hive Power Up Day and got awarded this Power Up Helper badge.</td></tr></table> <sub>_You can view your badges on [your board](https://hivebuzz.me/@shiftrox) and compare yourself to others in the [Ranking](https://hivebuzz.me/ranking)_</sub> **Check out our last posts:** <table><tr><td><a href="/hive-122221/@hivebuzz/pum-202504-result"><img src="https://images.hive.blog/64x128/https://i.imgur.com/mzwqdSL.png"></a></td><td><a href="/hive-122221/@hivebuzz/pum-202504-result">Hive Power Up Month Challenge - April 2025 Winners List</a></td></tr><tr><td><a href="/hive-122221/@hivebuzz/pum-202505"><img src="https://images.hive.blog/64x128/https://i.imgur.com/M9RD8KS.png"></a></td><td><a href="/hive-122221/@hivebuzz/pum-202505">Be ready for the May edition of the Hive Power Up Month!</a></td></tr><tr><td><a href="/hive-122221/@hivebuzz/pud-202505"><img src="https://images.hive.blog/64x128/https://i.imgur.com/805FIIt.jpg"></a></td><td><a href="/hive-122221/@hivebuzz/pud-202505">Hive Power Up Day - May 1st 2025</a></td></tr></table>

| author | hivebuzz |

|---|---|

| permlink | notify-1746145677 |

| category | hive-139531 |

| json_metadata | {"image":["https://hivebuzz.me/notify.t6.png"]} |

| created | 2025-05-02 00:27:57 |

| last_update | 2025-05-02 00:27:57 |

| depth | 1 |

| children | 2 |

| last_payout | 2025-05-09 00:27:57 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 1,262 |

| author_reputation | 369,402,100,587,838 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,450,090 |

| net_rshares | 0 |

Thanks!!!

| author | shiftrox |

|---|---|

| permlink | re-hivebuzz-svmz3b |

| category | hive-139531 |

| json_metadata | {"tags":["hive-139531"],"app":"peakd/2025.4.6","image":[],"users":[]} |

| created | 2025-05-02 13:35:36 |

| last_update | 2025-05-02 13:35:36 |

| depth | 2 |

| children | 1 |

| last_payout | 2025-05-09 13:35:36 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 10 |

| author_reputation | 705,778,977,632,279 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,461,005 |

| net_rshares | 0 |

Congratulations @shiftrox! You're doing your part to make Hive stronger. Keep up the great work! 💪<div><a href="https://engage.hivechain.app"></a></div>

| author | hivebuzz |

|---|---|

| permlink | re-1746199681000 |

| category | hive-139531 |

| json_metadata | {"app":"engage"} |

| created | 2025-05-02 15:28:00 |

| last_update | 2025-05-02 15:28:00 |

| depth | 3 |

| children | 0 |

| last_payout | 2025-05-09 15:28:00 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 188 |

| author_reputation | 369,402,100,587,838 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,463,585 |

| net_rshares | 0 |

<center>**Curious about HivePakistan? Join us on [Discord](https://discord.gg/3FzxCqFYyG)!**</center> <center>Delegate your HP to the [Hivepakistan](https://peakd.com/@hivepakistan/wallet) account and earn 90% of curation rewards in liquid hive!<br><br><center><table><tr><td><center>[50 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hivepakistan&vesting_shares=50%20HP)</center></td><td><center>[100 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hivepakistan&vesting_shares=100%20HP)</center></td><td><center>[200 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hivepakistan&vesting_shares=200%20HP)</center></td><td><center>[500 HP (Supporter Badge)](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hivepakistan&vesting_shares=500%20HP)</center></td><td><center>[1000 HP](https://hivesigner.com/sign/delegateVestingShares?&delegatee=hivepakistan&vesting_shares=1000%20HP)</center></td></tr></table></center> <center>Follow our [Curation Trail](https://hive.vote/dash.php?i=1&trail=hivepakistan) and don't miss voting!</center> ___ <center>**Additional Perks: Delegate To @ [pakx](https://peakd.com/@pakx) For Earning $PAKX Investment Token**</center> <center><img src="https://files.peakd.com/file/peakd-hive/dlmmqb/23tkn1F4Yd2BhWigkZ46jQdMmkDRKagirLr5Gh4iMq9TNBiS7anhAE71y9JqRuy1j77qS.png"></center><hr><center><b>Curated by <a href="/@gwajnberg">gwajnberg</a></b></center>

| author | hivepakistan |

|---|---|

| permlink | re-shiftrox-1746125867 |

| category | hive-139531 |

| json_metadata | "{"tags": ["hive-139531"], "app": "HiveDiscoMod"}" |

| created | 2025-05-01 18:57:45 |

| last_update | 2025-05-01 18:57:45 |

| depth | 1 |

| children | 0 |

| last_payout | 2025-05-08 18:57:45 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 1,446 |

| author_reputation | 123,729,133,383,197 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,444,113 |

| net_rshares | 0 |

Obrigado por promover a comunidade Hive-BR em suas postagens. Vamos seguir fortalecendo a Hive <div class='text-right'><sup>Metade das recompensas dessa resposta serão destinadas ao autor do post.</sup></div><hr/><h4><center><a href='https://vote.hive.uno/@perfilbrasil'>Vote no @perfilbrasil para Testemunha Hive.</a></center></h4>

| author | perfilbrasil | ||||||

|---|---|---|---|---|---|---|---|

| permlink | re-enpt-br-python-on-holiday-and-joy-in-advancing-even-further-in-programming-with-hive | ||||||

| category | hive-139531 | ||||||

| json_metadata | "{"tags": ["pt"], "description": "Obrigado por postar"}" | ||||||

| created | 2025-05-01 18:55:06 | ||||||

| last_update | 2025-05-01 18:55:06 | ||||||

| depth | 1 | ||||||

| children | 0 | ||||||

| last_payout | 2025-05-08 18:55:06 | ||||||

| cashout_time | 1969-12-31 23:59:59 | ||||||

| total_payout_value | 0.000 HBD | ||||||

| curator_payout_value | 0.000 HBD | ||||||

| pending_payout_value | 0.000 HBD | ||||||

| promoted | 0.000 HBD | ||||||

| body_length | 334 | ||||||

| author_reputation | 12,952,025,475,701 | ||||||

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" | ||||||

| beneficiaries |

| ||||||

| max_accepted_payout | 10.000 HBD | ||||||

| percent_hbd | 0 | ||||||

| post_id | 142,444,044 | ||||||

| net_rshares | 0 |

Bzzzrrr, que legal que você está de volta ao mundo do programação! Fico feliz que tenha conseguido superar os problemas e agora tenha uma versão funcional do Free Translator. Parabéns pelo esforço e pela paciência! #hivebr <sub>*AI generated content* Commands: !pixbee stop | !pixbee start | !pixbee price</sub>

| author | pixbee | ||||||

|---|---|---|---|---|---|---|---|

| permlink | re-shiftrox-enpt-br-python-on-holiday-and-joy-in-advancing-even-further-in-programming-with-hive-20250501t190421626z | ||||||

| category | hive-139531 | ||||||

| json_metadata | {"app":"PixBee/1.3.7","tags":""} | ||||||

| created | 2025-05-01 19:04:24 | ||||||

| last_update | 2025-05-01 19:04:24 | ||||||

| depth | 1 | ||||||

| children | 0 | ||||||

| last_payout | 2025-05-08 19:04:24 | ||||||

| cashout_time | 1969-12-31 23:59:59 | ||||||

| total_payout_value | 0.000 HBD | ||||||

| curator_payout_value | 0.000 HBD | ||||||

| pending_payout_value | 0.000 HBD | ||||||

| promoted | 0.000 HBD | ||||||

| body_length | 313 | ||||||

| author_reputation | 4,469,331,421,926 | ||||||

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" | ||||||

| beneficiaries |

| ||||||

| max_accepted_payout | 1,000,000.000 HBD | ||||||

| percent_hbd | 10,000 | ||||||

| post_id | 142,444,245 | ||||||

| net_rshares | 0 |

!hbits !hiqvote

| author | shiftrox |

|---|---|

| permlink | re-shiftrox-svlja1 |

| category | hive-139531 |

| json_metadata | {"tags":["hive-139531"],"app":"peakd/2025.4.6","image":[],"users":[]} |

| created | 2025-05-01 18:56:27 |

| last_update | 2025-05-01 18:56:27 |

| depth | 1 |

| children | 0 |

| last_payout | 2025-05-08 18:56:27 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 15 |

| author_reputation | 705,778,977,632,279 |

| root_title | "[EN/PT-BR] Python on holiday and joy in advancing even further in programming with Hive!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 142,444,075 |

| net_rshares | 0 |

hiveblocks

hiveblocks