Given a data frame with certain rows having missing data in certain columns, though they belong to the same with similar A values, I want to prioritize rows with full records when there are multiple occurrences with the same value in column A to retain them.

```

# Create a sample dataframe

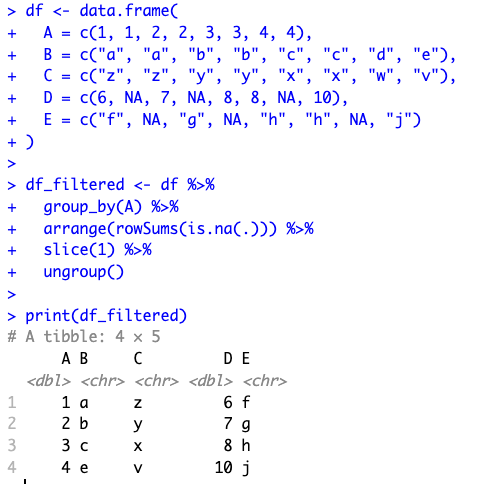

df <- data.frame(

A = c(1, 1, 2, 2, 3, 3, 4, 4),

B = c("a", "a", "b", "b", "c", "c", "d", "e"),

C = c("z", "z", "y", "y", "x", "x", "w", "v"),

D = c(6, NA, 7, NA, 8, 8, NA, 10),

E = c("f", NA, "g", NA, "h", "h", NA, "j")

)

#filter it this way

df_filtered <- df %>%

group_by(A) %>%

arrange(rowSums(is.na(.))) %>%

slice(1) %>%

ungroup()

```

The filtering process is as follows:

*Group by column A.*

*For each group:

a. First, arrange by the number of NA values in descending order (so rows with fewer NA values come first). **arrange(rowSums(is.na(.)))**

b. Then, slice to pick the first row.*

*Finally, ungroup.*

| author | snippets |

|---|---|

| permlink | r-to-prioritize-rows-with-full-records-when-there-are-multiple-occurrences-with-the-same-value-in-column-a-to-retain-them |

| category | hive-138200 |

| json_metadata | {"app":"peakd/2023.9.2","format":"markdown","tags":["coding","programming","development","proofofbrain","stemgeeks","datascience","rstats","stem-social"],"users":[],"image":["https://files.peakd.com/file/peakd-hive/snippets/AKNMuVqPrNWuLjdyxwLdzm99obv1dcnZWufeJdBoWwecd9UBvGxERepophy4Epu.png","https://files.peakd.com/file/peakd-hive/snippets/23tHbkj8J5pGkWZre8gBhk721vrFwYpaKtznDJaT6xuXnBqxRa7zpvimvqdh8LGnZ2ZrJ.png"]} |

| created | 2023-10-10 07:46:51 |

| last_update | 2023-10-11 11:54:18 |

| depth | 0 |

| children | 1 |

| last_payout | 2023-10-17 07:46:51 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.054 HBD |

| curator_payout_value | 0.054 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 1,236 |

| author_reputation | 801,725,525,485 |

| root_title | "R: To prioritize rows with full records when there are multiple occurrences with the same value in column A to retain them " |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 127,860,651 |

| net_rshares | 272,406,186,927 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| bluesniper | 0 | 3,115,443,772 | 100% | ||

| holovision.stem | 0 | 2,164,844,957 | 100% | ||

| cryptothesis | 0 | 267,125,898,198 | 100% |

Congratulations @snippets! You have completed the following achievement on the Hive blockchain And have been rewarded with New badge(s) <table><tr><td><img src="https://images.hive.blog/60x70/http://hivebuzz.me/@snippets/upvoted.png?202310110607"></td><td>You received more than 10 upvotes.<br>Your next target is to reach 50 upvotes.</td></tr> </table> <sub>_You can view your badges on [your board](https://hivebuzz.me/@snippets) and compare yourself to others in the [Ranking](https://hivebuzz.me/ranking)_</sub> <sub>_If you no longer want to receive notifications, reply to this comment with the word_ `STOP`</sub>

| author | hivebuzz |

|---|---|

| permlink | notify-snippets-20231011t061754 |

| category | hive-138200 |

| json_metadata | {"image":["http://hivebuzz.me/notify.t6.png"]} |

| created | 2023-10-11 06:17:54 |

| last_update | 2023-10-11 06:17:54 |

| depth | 1 |

| children | 0 |

| last_payout | 2023-10-18 06:17:54 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 623 |

| author_reputation | 369,399,744,016,272 |

| root_title | "R: To prioritize rows with full records when there are multiple occurrences with the same value in column A to retain them " |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 127,889,208 |

| net_rshares | 0 |

hiveblocks

hiveblocks