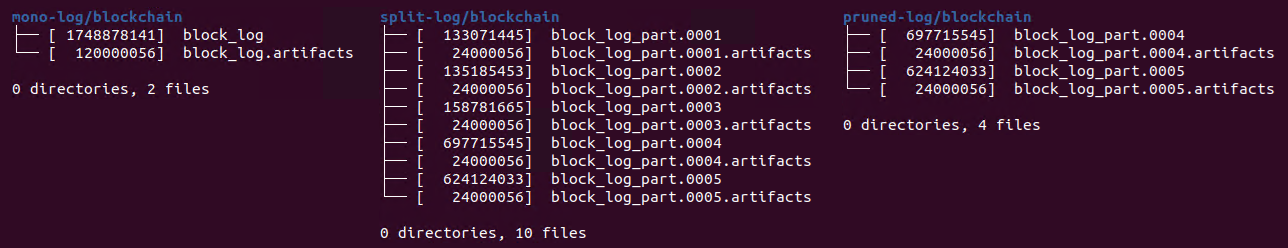

## Dude, where did my block go? It's official now, you can configure your hived node to get rid of some of the 490+ gigabytes of compressed block log burden. You can even shed all of it, but do you really want to and what if you change your mind? ### The time of no choice is over Since the beginning of Hive blockchain (and its predecessor) there was that huge single file named block_log, mandatory for all nodes. A single file with a size of over 490 gigabytes now, requiring continuous disk space of its size. The block log revolution that comes into force with `1.27.7rc0` tag brings following improvements: - Multiple one-million-block-each block log files can be used instead of a legacy single monolithic file. - You can keep all of the files or only a number of most recent ones. - Complete wipeout of block log is possible too, leaving you with one last block only kept in memory. ### The pros and cons Let's examine the new modes in detail: - Split mode - keeps each full block from genesis, hence provides full functionality of e.g. block API & **allows blockchain replay**. At the same time its 1M-blocks part files of block log may be physically distributed to different filesystems using symlinks which allows to e.g. keep only the latest part files on fast storage. Good for API node. - Pruned mode - a variation of split mode, which keeps only several latest part files of block log. **Replay is no longer guaranteed**. Provides only partial functionality of block API & others - handles requests for not-yet-pruned blocks, e.g. serves latest several months of blocks through `block_api`. Good for transaction broadcaster. - Memory-only mode - the ultimate pruning - no block log files at all, only single latest irreversible block held in memory. **Unable to replay** obviously. Unable to provide past blocks through block API & similar. ### The summary of block log modes | mode name | blocks kept | replayable | the value of `block-log-split` option in config.ini | | --- | --- | --- | --- | | legacy | all | yes | -1 | | split | all | yes | 9999 (default value now) | | pruned | last n millions | sometimes | n > 0 | | no-file | last 1 | no | 0 | Wait a minute, you may say, the split mode number (9999) meets the condition of pruned one (> 0), there must be a mistake here. Let me explain in detail then - positive value of `block-log-split` option defines how many full millions of last irreversible blocks are to be kept in block log files. It means that when you set it to e.g. 90, all blocks will be kept for the time being, because Hive's got a little over 89 millions of blocks now. Thus for the time being the block log is not _effectively pruned_. After a while however, when the threshold of 90 millions is crossed, the file containing oldest (first) million of blocks will be pruned (deleted) and from that moment the block log will be _effectively pruned_. As you can see the boundary between split & pruned modes is blurred, but setting it to the biggest possible number (9999) means that your block log won't be pruned for the next 950+ years. Now we're getting to the question why replay is available _sometimes_ in pruned mode. Full replay (from block #1) requires **all** blocks to be present in block log, therefore it can be performed as long as block log is **not** _effectively pruned_ due to combination of `block-log-split` value in configuration and current head block of the blockchain. After the oldest part file containing initial 1 million blocks is removed, the block log is _effectively pruned_ and full replay is no longer possible.  <center>Block log files of nodes configured with different values of block-log-split option. Note the file size differences.</center> ### Tips & tricks - There are two ways to obtain split block log files from legacy monolithic one - a) Using `block_log_util`'s new `--split` option or b) running hived configured to have split block log with legacy monolithic one provided in its `blockchain` directory, which triggers built-in auto-split mechanism. The former is recommended as it allows to generate the 490+ GB of split files into output directory other than the source one (possibly on different disk space). - All files of split/pruned block log, except the head one (the latest one, with highest number in filename) can be made read-only as they won't be modified anymore. The head file needs to be writable as it's where the new blocks are applied to. - Split block log allows to scatter its part files over several disk spaces and symlink them all in hived's `blockchain` directory. Not only can smaller disk volumes be used, you can even consider placing older parts (i.e. the ones rarely used by hived) onto slower drives. - The names of split/pruned block log files follow the pattern `block_log_part.????` where `????` stands for consecutive numbers beginning with `0001` followed by `0002`, etc. Since each one contains up to a million of blocks, `block_log_part.0001` contains blocks numbered `1` to `1 000 000`, while `block_log_part.0002` contains blocks numbered `1 000 001` to `2 000 000` and so on. Hived recognizes the block log files by their names, so don't change them or it becomes lost. ### Links and resources - Source code version containing the improvements - https://gitlab.syncad.com/hive/hive/-/tags/1.27.7rc0 ### Your feedback is invaluable and always welcome.

| author | thebeedevs | ||||||

|---|---|---|---|---|---|---|---|

| permlink | core-services-the-block-log-revolution-is-here | ||||||

| category | hive-139531 | ||||||

| json_metadata | "{"format":"markdown+html","summary":"Description of revolutionary improvements to block log configuration of hive applications. Explanation of new config option block-log-split.","app":"@hiveio/wax/1.27.6-rc4-240917114249","tags":["hive","dev","hived","block-log","pruning"],"image":["https://images.hive.blog/DQmVMQw23UEz8FhnzRQ2QKhCvA854VcviLTm6pDGSXYdocE/ComparisonOfBlockLogDirectoryContents.png"],"author":"zgredek"}" | ||||||

| created | 2024-11-08 13:35:30 | ||||||

| last_update | 2024-11-08 13:35:30 | ||||||

| depth | 0 | ||||||

| children | 8 | ||||||

| last_payout | 2024-11-15 13:35:30 | ||||||

| cashout_time | 1969-12-31 23:59:59 | ||||||

| total_payout_value | 45.198 HBD | ||||||

| curator_payout_value | 90.339 HBD | ||||||

| pending_payout_value | 0.000 HBD | ||||||

| promoted | 0.000 HBD | ||||||

| body_length | 5,603 | ||||||

| author_reputation | 214,413,890,391,020 | ||||||

| root_title | "Core services - the block log revolution is here!" | ||||||

| beneficiaries |

| ||||||

| max_accepted_payout | 1,000,000.000 HBD | ||||||

| percent_hbd | 10,000 | ||||||

| post_id | 138,341,018 | ||||||

| net_rshares | 547,564,382,447,271 | ||||||

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| blocktrades | 0 | 184,426,489,241,149 | 100% | ||

| xeldal | 0 | 19,742,074,410,385 | 50% | ||

| adol | 0 | 4,124,547,693,739 | 50% | ||

| enki | 0 | 10,575,003,767,478 | 50% | ||

| abit | 0 | 21,655,117,449,461 | 100% | ||

| adm | 0 | 23,153,672,200,205 | 100% | ||

| flemingfarm | 0 | 41,412,004,068 | 6.75% | ||

| acidyo | 0 | 13,487,628,778,301 | 100% | ||

| leprechaun | 0 | 5,161,146,260 | 26% | ||

| churdtzu | 0 | 23,703,324,389 | 33% | ||

| paradise-paradox | 0 | 1,313,077,373 | 100% | ||

| gtg | 0 | 52,196,838,863,824 | 100% | ||

| good-karma | 0 | 11,995,783,767 | 1% | ||

| roelandp | 0 | 517,995,008,658 | 25% | ||

| neopatriarch | 0 | 1,957,944,120 | 50% | ||

| bryanj4 | 0 | 595,277,988 | 25% | ||

| ausbitbank | 0 | 549,548,480,536 | 10.4% | ||

| arcange | 0 | 4,181,417,578,174 | 20% | ||

| bowess | 0 | 1,946,484,400,018 | 100% | ||

| kaykunoichi | 0 | 1,029,884,527 | 50% | ||

| laoyao | 0 | 59,937,159,245 | 100% | ||

| midnightoil | 0 | 211,899,962,960 | 100% | ||

| xiaohui | 0 | 18,623,327,776 | 100% | ||

| velourex | 0 | 424,683,140 | 100% | ||

| oflyhigh | 0 | 4,033,838,036,865 | 100% | ||

| vandres | 0 | 25,572,849,705 | 100% | ||

| borran | 0 | 1,183,959,078,282 | 100% | ||

| treaphort | 0 | 698,432,618 | 15% | ||

| rmach | 0 | 718,965,501 | 4.5% | ||

| helene | 0 | 1,347,251,347,740 | 100% | ||

| elamental | 0 | 1,277,209,643 | 13% | ||

| stevescoins | 0 | 1,300,390,945 | 13% | ||

| catherinebleish | 0 | 11,056,454,576 | 50% | ||

| gduran | 0 | 26,078,242,621 | 100% | ||

| brightstar | 0 | 5,401,677,488 | 15% | ||

| penguinpablo | 0 | 229,565,711,024 | 14% | ||

| wakeupnd | 0 | 129,221,266,236 | 50% | ||

| tftproject | 0 | 662,944,841 | 3.9% | ||

| jimbobbill | 0 | 1,763,753,989 | 15% | ||

| doitvoluntarily | 0 | 9,940,984,697 | 100% | ||

| seckorama | 0 | 312,736,503,915 | 71% | ||

| discernente | 0 | 13,005,927,384 | 100% | ||

| funnyman | 0 | 1,562,364,351 | 5.6% | ||

| ssekulji | 0 | 139,923,104,186 | 100% | ||

| ura-soul | 0 | 46,094,937,620 | 26% | ||

| moretea | 0 | 3,798,281,831 | 10% | ||

| techslut | 0 | 330,909,424,900 | 50% | ||

| makinstuff | 0 | 67,092,966,919 | 50% | ||

| esteemapp | 0 | 2,644,907,842 | 1% | ||

| justinw | 0 | 175,752,053,255 | 33% | ||

| humanearl | 0 | 2,329,987,266 | 80% | ||

| v4vapid | 0 | 461,261,343,586 | 2.86% | ||

| davidgermano | 0 | 0 | 100% | ||

| shermanedwards | 0 | 719,518,539 | 50% | ||

| thereikiforest | 0 | 1,225,558,315 | 10% | ||

| evildeathcore | 0 | 6,819,912,173 | 100% | ||

| fronttowardenemy | 0 | 139,043,012,132 | 50% | ||

| trafalgar | 0 | 45,412,520,695,687 | 100% | ||

| detlev | 0 | 96,258,217,245 | 4.5% | ||

| haileyscomet | 0 | 755,781,149 | 20% | ||

| raindrop | 0 | 702,822,013,527 | 100% | ||

| passion-fruit | 0 | 9,935,509,128 | 92% | ||

| fortune-master | 0 | 9,817,233,540 | 92% | ||

| frankydoodle | 0 | 894,885,736 | 6.5% | ||

| dreamon | 0 | 502,165,840 | 11.1% | ||

| kingkinslow | 0 | 819,538,230 | 100% | ||

| whatamidoing | 0 | 1,268,859,745 | 4% | ||

| trayan | 0 | 27,808,424,175 | 100% | ||

| ribalinux | 0 | 91,093,823,747 | 100% | ||

| rt395 | 0 | 7,767,545,031 | 5% | ||

| kennyroy | 0 | 461,522,731 | 100% | ||

| uruiamme | 0 | 1,279,003,528 | 11% | ||

| newsflash | 0 | 49,260,726,215 | 0.71% | ||

| exec | 0 | 1,745,644,321,514 | 100% | ||

| eval | 0 | 3,555,754,328,831 | 100% | ||

| alphacore | 0 | 7,279,440,707 | 7.12% | ||

| joeyarnoldvn | 0 | 459,870,949 | 1.47% | ||

| m31 | 0 | 876,775,647,308 | 100% | ||

| st3llar | 0 | 5,798,929,875 | 25% | ||

| bluemist | 0 | 23,200,367,440 | 9% | ||

| unyimeetuk | 0 | 1,189,621,029 | 50% | ||

| lenasveganliving | 0 | 835,335,519 | 4.5% | ||

| felt.buzz | 0 | 39,823,597,671 | 6.5% | ||

| heart-to-heart | 0 | 12,643,331,294 | 20% | ||

| amymya | 0 | 619,415,101 | 10% | ||

| appreciator | 0 | 34,857,339,625,522 | 9% | ||

| zonguin | 0 | 1,371,464,193 | 6.5% | ||

| resiliencia | 0 | 18,505,936,475 | 100% | ||

| pocketrocket | 0 | 13,315,439,355 | 100% | ||

| francosteemvotes | 0 | 1,865,935,155 | 13% | ||

| jasonbu | 0 | 3,931,864,870 | 10% | ||

| holisticmom | 0 | 1,454,391,166 | 3% | ||

| idx | 0 | 24,129,304,309 | 100% | ||

| aafeng | 0 | 244,875,139,920 | 25% | ||

| zyx066 | 0 | 68,635,241,340 | 30% | ||

| tribesteemup | 0 | 189,666,275,266 | 100% | ||

| etblink | 0 | 1,071,232,795,667 | 100% | ||

| santigs | 0 | 883,482,353,695 | 100% | ||

| floatinglin | 0 | 6,761,690,487 | 100% | ||

| gray00 | 0 | 917,211,343 | 100% | ||

| evildido | 0 | 731,760,584 | 6.5% | ||

| holbein81 | 0 | 56,394,892,969 | 100% | ||

| aidefr | 0 | 2,288,001,706 | 10.4% | ||

| cconn | 0 | 1,188,362,249 | 9% | ||

| solarsupermama | 0 | 2,178,808,021 | 11% | ||

| tomiscurious | 0 | 269,585,152,877 | 47.8% | ||

| sorin.cristescu | 0 | 2,697,921,257 | 0.5% | ||

| tomwafula | 0 | 1,631,942,655 | 50% | ||

| taskmaster4450 | 0 | 1,735,023,190,029 | 100% | ||

| fatman | 0 | 9,140,488,232 | 2% | ||

| votehero | 0 | 26,569,190,803 | 5.6% | ||

| insanityisfree | 0 | 485,702,600 | 26% | ||

| diosarich | 0 | 5,389,295,347 | 50% | ||

| rakkasan84 | 0 | 3,527,825,839 | 100% | ||

| sku77-poprocks | 0 | 2,588,699,991 | 100% | ||

| risemultiversity | 0 | 1,896,390,446 | 13% | ||

| msp-foundation | 0 | 145,551,204 | 100% | ||

| canadianrenegade | 0 | 10,444,492,172 | 5% | ||

| apanamamama | 0 | 167,396,825,683 | 100% | ||

| esteem.app | 0 | 311,592,208 | 1% | ||

| silasvogt | 0 | 996,600,793 | 50% | ||

| kieranpearson | 0 | 1,735,788,055 | 33% | ||

| the-gate-keeper | 0 | 773,399,308 | 100% | ||

| afterglow | 0 | 2,211,324,089 | 20% | ||

| petrolinivideo | 0 | 3,929,755,164 | 50% | ||

| dejan.vuckovic | 0 | 16,236,959,396 | 15% | ||

| informationwar | 0 | 102,720,770,596 | 26% | ||

| small.minion | 0 | 4,670,235,056 | 100% | ||

| sunsea | 0 | 13,971,294,341 | 4.5% | ||

| emrebeyler | 0 | 12,342,540,767,239 | 100% | ||

| libertyepodcast | 0 | 568,516,898 | 50% | ||

| dbddv01 | 0 | 929,605,468 | 6.5% | ||

| trucklife-family | 0 | 145,363,441,755 | 30% | ||

| belleamie | 0 | 5,417,949,344 | 25% | ||

| traf | 0 | 3,814,204,766,049 | 100% | ||

| robotics101 | 0 | 3,983,582,329 | 13% | ||

| lpv | 0 | 868,518,034 | 2.6% | ||

| iptrucs | 0 | 14,926,314,790 | 25% | ||

| breelikeatree | 0 | 226,326,504,726 | 100% | ||

| wiseagent | 0 | 13,483,088,057 | 25% | ||

| cryptonized | 0 | 236,856,996 | 14% | ||

| tomatom | 0 | 10,316,510,398 | 50% | ||

| eaglespirit | 0 | 9,714,582,311 | 5% | ||

| jim888 | 0 | 769,907,556,038 | 22% | ||

| for91days | 0 | 27,539,782,358 | 30% | ||

| sagescrub | 0 | 1,425,938,227 | 25% | ||

| gabrielatravels | 0 | 84,396,233,424 | 50% | ||

| gaborockstar | 0 | 103,852,415,507 | 50% | ||

| moxieme | 0 | 1,374,765,012 | 20% | ||

| roadstories | 0 | 5,105,889,181 | 20% | ||

| hallmann | 0 | 1,352,451,098,878 | 50% | ||

| adventuroussoul | 0 | 2,179,608,118 | 10% | ||

| criptomaster | 0 | 5,043,933,532 | 100% | ||

| empress-eremmy | 0 | 3,587,816,031 | 13% | ||

| aagabriel | 0 | 2,169,589,572 | 65% | ||

| auracraft | 0 | 3,099,102,604 | 100% | ||

| fundacja | 0 | 536,322,725,039 | 50% | ||

| atongis | 0 | 32,452,529,406 | 10% | ||

| tobias-g | 0 | 9,478,246,633 | 5% | ||

| kkarenmp | 0 | 5,273,696,441 | 4.5% | ||

| neeqi | 0 | 464,019,401 | 100% | ||

| tonysayers33 | 0 | 13,475,523,604 | 33% | ||

| openmind3000 | 0 | 2,524,081,461 | 50% | ||

| minerspost | 0 | 2,304,210,544 | 50% | ||

| aperterikk | 0 | 573,384,737 | 50% | ||

| tomhall | 0 | 378,182,346,737 | 100% | ||

| hempress | 0 | 1,004,388,338 | 11% | ||

| condeas | 0 | 1,780,717,055,478 | 100% | ||

| rubelynmacion | 0 | 48,442,116,128 | 100% | ||

| kirito-freud | 0 | 5,990,346,365 | 50% | ||

| darkpylon | 0 | 522,445,486 | 9% | ||

| nataboo | 0 | 1,277,721,573 | 50% | ||

| asgarth | 0 | 2,755,180,696,131 | 100% | ||

| vegan.niinja | 0 | 4,528,984,794 | 22% | ||

| happymichael | 0 | 2,628,099,079 | 100% | ||

| bertrayo | 0 | 4,861,571,291 | 4.5% | ||

| eugenekul | 0 | 922,035,740 | 22% | ||

| crowbarmama | 0 | 4,515,145,256 | 20% | ||

| lisfabian | 0 | 10,694,473,337 | 100% | ||

| homestead-guru | 0 | 39,004,452,076 | 50% | ||

| lesiopm | 0 | 1,265,672,706,027 | 100% | ||

| gabbyg86 | 0 | 21,879,667,681 | 35% | ||

| originalmrspice | 0 | 13,653,056,430 | 50% | ||

| oadissin | 0 | 12,292,447,771 | 4.5% | ||

| miosha | 0 | 593,713,821,762 | 100% | ||

| jeronimorubio | 0 | 10,634,230,719 | 100% | ||

| andablackwidow | 0 | 61,062,608,065 | 100% | ||

| bil.prag | 0 | 248,794,515,553 | 25% | ||

| celestialcow | 0 | 5,338,529,684 | 22% | ||

| ravenmus1c | 0 | 9,511,063,835 | 0.45% | ||

| sanderjansenart | 0 | 11,652,410,787 | 5% | ||

| louis88 | 0 | 2,393,278,822,886 | 100% | ||

| zaku | 0 | 25,807,818,104 | 9% | ||

| mrchef111 | 0 | 21,088,743,256 | 25% | ||

| racibo | 0 | 56,029,818,331 | 50% | ||

| darkfuseion | 0 | 1,146,217,187 | 100% | ||

| gisi | 0 | 4,453,872,503 | 10% | ||

| gadrian | 0 | 739,809,377,673 | 50% | ||

| illuminationst8 | 0 | 6,346,716,840 | 25% | ||

| inciter | 0 | 6,664,808,737 | 9% | ||

| manuelmusic | 0 | 785,095,522 | 5.4% | ||

| springlining | 0 | 37,777,319,815 | 100% | ||

| reinmar | 0 | 509,217,927 | 50% | ||

| achimmertens | 0 | 33,864,093,137 | 10% | ||

| brainpod | 0 | 1,024,532,029 | 25% | ||

| kgakakillerg | 0 | 20,996,521,205 | 10% | ||

| joeytechtalks | 0 | 1,583,605,922 | 50% | ||

| camuel | 0 | 4,265,920,573 | 10% | ||

| steemer-x | 0 | 519,852,532 | 50% | ||

| el-dee-are-es | 0 | 4,525,308,185 | 10% | ||

| we-are-one | 0 | 1,076,683,072 | 100% | ||

| thomaskatan | 0 | 1,690,296,608 | 70% | ||

| indigoocean | 0 | 25,284,275,226 | 50% | ||

| browery | 0 | 514,125,437,611 | 100% | ||

| glodniwiedzy | 0 | 18,331,740,395 | 50% | ||

| smacommunity | 0 | 977,219,872 | 50% | ||

| jancharlest | 0 | 271,117,121 | 50% | ||

| fw206 | 0 | 8,970,155,091,649 | 100% | ||

| techcoderx | 0 | 480,582,527,484 | 100% | ||

| brettblue | 0 | 823,413,499 | 50% | ||

| reality-variance | 0 | 888,997,436 | 20% | ||

| angatt | 0 | 1,754,052,222 | 25% | ||

| annemariemay | 0 | 479,188,539 | 50% | ||

| carilinger | 0 | 410,806,912,288 | 50% | ||

| alfrednoyed | 0 | 18,837,561,835 | 100% | ||

| onelovedtube | 0 | 13,776,606,250 | 100% | ||

| thefoundation | 0 | 26,660,602,561 | 52.4% | ||

| cambridgeport90 | 0 | 13,422,088,548 | 50% | ||

| commonlaw | 0 | 5,421,724,585 | 35% | ||

| steempeak | 0 | 1,327,949,401,192 | 100% | ||

| kstop1 | 0 | 13,536,029,207 | 100% | ||

| toddmck | 0 | 2,489,469,653 | 100% | ||

| gregorypatrick | 0 | 583,956,553 | 33% | ||

| the.rocket.panda | 0 | 11,246,878,809 | 100% | ||

| marblely | 0 | 10,437,962,504 | 9% | ||

| haccolong | 0 | 612,598,885 | 6.5% | ||

| newsnownorthwest | 0 | 485,773,263 | 3.9% | ||

| gaottantacinque | 0 | 961,819,950 | 100% | ||

| mannacurrency | 0 | 20,197,285,963 | 10% | ||

| xves | 0 | 20,277,480,903 | 50% | ||

| pignys | 0 | 23,134,841,457 | 50% | ||

| revueh | 0 | 718,027,473 | 13% | ||

| qila | 0 | 11,290,996,951 | 100% | ||

| lynxialicious | 0 | 1,954,326,901 | 25% | ||

| phillyc | 0 | 1,772,133,534 | 50% | ||

| melor9 | 0 | 13,161,683,510 | 50% | ||

| icepee | 0 | 16,114,275,786 | 50% | ||

| xara | 0 | 16,693,469,341 | 50% | ||

| bigbos99 | 0 | 586,971,866 | 100% | ||

| hoaithu | 0 | 1,947,638,845 | 5.52% | ||

| aconsciousness | 0 | 2,491,888,799 | 95% | ||

| deepdives | 0 | 96,914,935,267 | 26% | ||

| gasaeightyfive | 0 | 1,125,027,659 | 100% | ||

| bezkresu | 0 | 2,694,751,527 | 50% | ||

| dlike | 0 | 418,767,878,025 | 100% | ||

| anhvu | 0 | 1,234,610,473 | 5.2% | ||

| engrave | 0 | 4,268,472,381,221 | 50% | ||

| gubbatv | 0 | 44,214,730,052 | 100% | ||

| marcocasario | 0 | 87,989,273,693 | 14.23% | ||

| bdvoter | 0 | 5,694,820,555,232 | 9% | ||

| voter002 | 0 | 26,710,475,392 | 68% | ||

| cribbio | 0 | 2,632,129,698 | 100% | ||

| velmafia | 0 | 1,402,177,113 | 50% | ||

| thevil | 0 | 1,036,391,120,441 | 100% | ||

| riskneutral | 0 | 3,007,751,618 | 26% | ||

| czekolada | 0 | 4,736,767,485 | 50% | ||

| krolestwo | 0 | 28,733,971,128 | 50% | ||

| merlin7 | 0 | 81,267,246,163 | 40% | ||

| thrasher666 | 0 | 2,513,877,544 | 60% | ||

| peakmonsters | 0 | 494,539,182,879 | 100% | ||

| linuxbot | 0 | 28,740,217,903 | 100% | ||

| nmcdougal94 | 0 | 1,500,000,155 | 10% | ||

| raoufwilly | 0 | 812,307,486 | 30% | ||

| bia.birch | 0 | 7,373,338,182 | 50% | ||

| starrouge | 0 | 1,048,492,524 | 50% | ||

| wherein | 0 | 25,131,564,252 | 100% | ||

| cowboysblog | 0 | 3,084,734,285 | 100% | ||

| shainemata | 0 | 92,715,250,906 | 20% | ||

| zerofive | 0 | 872,902,987 | 50% | ||

| jacuzzi | 0 | 780,081,957 | 1.4% | ||

| cnstm | 0 | 122,836,207,636 | 100% | ||

| likuang007 | 0 | 651,543,308 | 100% | ||

| josehany | 0 | 158,586,220,709 | 100% | ||

| lianjingmedia | 0 | 982,925,398 | 100% | ||

| pl-travelfeed | 0 | 68,524,255,010 | 50% | ||

| hungrybear | 0 | 618,409,613 | 14% | ||

| sofiag | 0 | 435,787,476 | 36% | ||

| thelogicaldude | 0 | 647,074,237 | 1.8% | ||

| denizcakmak | 0 | 585,462,041 | 50% | ||

| russia-btc | 0 | 307,982,433,261 | 100% | ||

| alenox | 0 | 504,956,934 | 4.5% | ||

| maxsieg | 0 | 4,625,878,450 | 26% | ||

| oneloveipfs | 0 | 13,229,685,622 | 100% | ||

| photographercr | 0 | 519,011,729 | 20% | ||

| iamangierose | 0 | 530,608,702 | 50% | ||

| deeanndmathews | 0 | 71,120,906,637 | 100% | ||

| beerlover | 0 | 6,047,011,921 | 4.5% | ||

| clownworld | 0 | 1,184,962,827 | 13% | ||

| davidtron | 0 | 5,326,570,440 | 85% | ||

| denisdenis | 0 | 84,854,047,199 | 100% | ||

| imbartley | 0 | 795,651,092 | 25% | ||

| kgswallet | 0 | 2,899,308,795 | 50% | ||

| empoderat | 0 | 1,285,662,433,147 | 50% | ||

| farm1 | 0 | 905,183,563,197 | 100% | ||

| freedomring | 0 | 5,166,542,569 | 100% | ||

| logiczombie | 0 | 1,424,061,930 | 25% | ||

| urun | 0 | 11,186,276,327 | 100% | ||

| bilpcoinbot | 0 | 2,373,320,855 | 50% | ||

| roadstories.trib | 0 | 466,336,625 | 50% | ||

| oratione | 0 | 854,136,711 | 100% | ||

| dpoll.witness | 0 | 2,754,663,573 | 100% | ||

| gigel2 | 0 | 1,094,462,141,986 | 100% | ||

| bilpcoin.pay | 0 | 544,155,607 | 10% | ||

| tatiana21 | 0 | 897,295,186 | 100% | ||

| fenngen | 0 | 736,605,825 | 15% | ||

| lnakuma | 0 | 54,729,474,774 | 100% | ||

| delilhavores | 0 | 705,862,255 | 9% | ||

| roamingsparrow | 0 | 1,310,870,386 | 50% | ||

| inigo-montoya-jr | 0 | 609,306,565 | 22.1% | ||

| marblesz | 0 | 586,992,157 | 9% | ||

| julesquirin | 0 | 1,975,427,945 | 20% | ||

| keys-defender | 0 | 12,843,667,607 | 100% | ||

| hive-134220 | 0 | 34,824,555,609 | 100% | ||

| shinoxl | 0 | 8,465,677,275 | 100% | ||

| peakd | 0 | 9,120,689,962,572 | 100% | ||

| hivequebec | 0 | 890,733,137 | 13% | ||

| laruche | 0 | 20,064,335,974 | 13% | ||

| abundance.tribe | 0 | 7,118,099,816 | 100% | ||

| waiviolabs | 0 | 2,131,611,723 | 36% | ||

| softworld | 0 | 629,491,761,778 | 100% | ||

| kyleana | 0 | 2,081,674,285 | 50% | ||

| sadvil | 0 | 1,852,661,515 | 100% | ||

| hivelist | 0 | 4,313,375,869 | 2.7% | ||

| ninnu | 0 | 586,446,155 | 50% | ||

| ecency | 0 | 419,246,415,363 | 1% | ||

| cybercity | 0 | 1,022,326,344,457 | 100% | ||

| woelfchen | 0 | 385,547,121,879 | 100% | ||

| ghaazi | 0 | 6,796,588,825 | 100% | ||

| discoveringarni | 0 | 17,699,605,809 | 15% | ||

| devpress | 0 | 612,752,189 | 50% | ||

| noelyss | 0 | 1,848,482,443 | 4.5% | ||

| balvinder294 | 0 | 2,217,870,076 | 20% | ||

| lesiopm2 | 0 | 18,523,210,731 | 100% | ||

| jagged71 | 0 | 682,089,342 | 13% | ||

| ykroys | 0 | 4,626,290,634 | 100% | ||

| lucianav | 0 | 1,956,741,042 | 4.5% | ||

| patronpass | 0 | 1,422,344,521 | 9% | ||

| gabilan55 | 0 | 613,044,376 | 4.5% | ||

| olaunlimited | 0 | 585,125,856 | 45% | ||

| ecency.stats | 0 | 350,305,833 | 1% | ||

| guest4test1 | 0 | 0 | 100% | ||

| sylmarill | 0 | 618,413,353 | 100% | ||

| adedayoolumide | 0 | 838,797,197 | 50% | ||

| noalys | 0 | 1,311,917,060 | 4.5% | ||

| rima11 | 0 | 47,996,035,087 | 1.8% | ||

| danzocal | 0 | 5,434,961,308 | 100% | ||

| enrico2gameplays | 0 | 963,683,672 | 100% | ||

| mismo | 0 | 23,171,392,104 | 50% | ||

| kattycrochet | 0 | 77,723,903,159 | 50% | ||

| alex-rourke | 0 | 2,756,308,982,964 | 100% | ||

| merthin | 0 | 23,089,963,883 | 25% | ||

| ronasoliva1104 | 0 | 3,628,488,564 | 50% | ||

| pimpstudio-cash | 0 | 769,494,953 | 100% | ||

| cielitorojo | 0 | 3,331,398,411 | 6.3% | ||

| esmeesmith | 0 | 472,348,221 | 0.5% | ||

| elgatoshawua | 0 | 1,509,532,748 | 4.5% | ||

| creodas | 0 | 2,907,917,290 | 75% | ||

| rendrianarma | 0 | 2,450,064,945 | 50% | ||

| hexagono6 | 0 | 856,297,924 | 4.5% | ||

| leveluplifestyle | 0 | 2,567,149,736 | 50% | ||

| jane1289 | 0 | 109,562,714,096 | 50% | ||

| deeserauz | 0 | 3,150,023,479 | 100% | ||

| hive-defender | 0 | 470,873,276 | 100% | ||

| power-kappe | 0 | 629,711,753 | 9% | ||

| reidenling90 | 0 | 6,125,762,160 | 100% | ||

| key-defender.shh | 0 | 26,129,007 | 100% | ||

| okluvmee | 0 | 23,794,671,738 | 13% | ||

| louis.pay | 0 | 1,754,584,298 | 100% | ||

| hive.friends | 0 | 857,310,977 | 50% | ||

| egistar | 0 | 2,490,428,997 | 10% | ||

| shanhenry | 0 | 3,919,833,238 | 100% | ||

| mariaser | 0 | 3,160,664,934 | 6.3% | ||

| fotomaglys | 0 | 3,774,625,317 | 4.5% | ||

| seryi13 | 0 | 1,225,742,813 | 6.3% | ||

| rpren | 0 | 738,427,544 | 50% | ||

| lpa | 0 | 7,327,838,741 | 100% | ||

| godslove123 | 0 | 480,464,133 | 4.5% | ||

| irenicus30 | 0 | 1,107,164,226,404 | 100% | ||

| aprasad2325 | 0 | 1,701,465,369 | 4.5% | ||

| celeste413 | 0 | 1,242,682,787 | 100% | ||

| delver | 0 | 12,051,317,030 | 26% | ||

| nopasaran72 | 0 | 1,662,708,911 | 13% | ||

| pishio | 0 | 459,718,784,607 | 10% | ||

| panosdada.sports | 0 | 506,292,937 | 100% | ||

| iamleicester | 0 | 462,856,247 | 80% | ||

| altryx | 0 | 28,533,147,580 | 100% | ||

| mozzie5 | 0 | 17,942,317,397 | 100% | ||

| acantoni | 0 | 14,292,245,261 | 50% | ||

| astrocat-3663 | 0 | 910,986,023 | 50% | ||

| malhy | 0 | 1,988,367,204 | 4.5% | ||

| eolianpariah2 | 0 | 1,697,406,268 | 0.6% | ||

| dsky | 0 | 9,559,138,237,505 | 100% | ||

| greenkid11 | 0 | 11,912,379,886 | 100% | ||

| hylene74 | 0 | 101,464,081,088 | 100% | ||

| maroone | 0 | 9,407,125,967 | 100% | ||

| utymarvel | 0 | 7,621,990,671 | 100% | ||

| tillmea | 0 | 692,568,433 | 100% | ||

| gaposchkin | 0 | 10,198,670,415 | 100% | ||

| gezellig | 0 | 1,969,375,018 | 50% | ||

| meltysquid | 0 | 839,368,209 | 100% | ||

| ssebasv | 0 | 2,524,349,815 | 100% | ||

| crypto-shots | 0 | 228,284,808 | 50% | ||

| wellingt556 | 0 | 135,119,408,014 | 100% | ||

| sagarkothari88 | 0 | 10,999,332,660 | 0.5% | ||

| poliac | 0 | 65,534,016,745 | 100% | ||

| heteroclite | 0 | 32,283,456,763 | 50% | ||

| strawberrry | 0 | 1,830,145,092 | 100% | ||

| taradraz1 | 0 | 3,723,307,955 | 100% | ||

| anhdaden146 | 0 | 406,824,291,259 | 9% | ||

| leemah1 | 0 | 1,117,092,662 | 10% | ||

| cryptoshots.nft | 0 | 1,678,044,537 | 100% | ||

| eyesthewriter | 0 | 1,107,210,635 | 100% | ||

| poprzeczka | 0 | 688,029,457 | 5% | ||

| yisusth | 0 | 43,003,998,780 | 100% | ||

| empo.witness | 0 | 44,328,393,384 | 100% | ||

| serpent7776 | 0 | 813,908,110 | 100% | ||

| resonator | 0 | 8,045,673,886,503 | 26% | ||

| caelum1infernum | 0 | 1,427,320,459 | 10% | ||

| aletoalonewolf | 0 | 2,794,163,432 | 25% | ||

| franzpaulie | 0 | 44,035,117,702 | 100% | ||

| panosdada.tip | 0 | 641,030,886 | 100% | ||

| castri-ja | 0 | 876,986,606 | 2.25% | ||

| dutchchemist | 0 | 467,047,193 | 100% | ||

| cryptoshots.play | 0 | 0 | 10% | ||

| saranegi | 0 | 7,318,312,180 | 100% | ||

| oniriusz | 0 | 9,079,897,511 | 50% | ||

| coldbeetrootsoup | 0 | 951,737,387,951 | 100% | ||

| helloisalbani | 0 | 637,911,663 | 2.7% | ||

| tzae | 0 | 699,448,764 | 100% | ||

| njn | 0 | 32,364,209,905 | 100% | ||

| hive.aid | 0 | 41,164,021,150 | 50% | ||

| idksamad78699 | 0 | 2,208,306,510 | 4.5% | ||

| peak.open | 0 | 31,533,752,928 | 100% | ||

| pinkchic | 0 | 747,417,198 | 2.25% | ||

| queercoin | 0 | 99,536,656,403 | 50% | ||

| yeni82 | 0 | 16,677,567,735 | 100% | ||

| cryptoshotsdoom | 0 | 0 | 10% | ||

| hive-132595 | 0 | 1,653,366,506 | 100% | ||

| fredaig | 0 | 1,218,969,241 | 10% | ||

| brain71 | 0 | 569,696,290 | 13% | ||

| rubilu | 0 | 6,362,293,069 | 50% | ||

| fin-economist | 0 | 0 | 100% | ||

| mariolisrnaranjo | 0 | 11,532,452,764 | 100% | ||

| luvinlyf | 0 | 253,791,387,575 | 86% | ||

| beauty197 | 0 | 3,801,666,185 | 50% | ||

| ibbtammy | 0 | 150,216,730,563 | 100% | ||

| dovycola | 0 | 3,545,813,020 | 100% | ||

| kerokus | 0 | 13,585,196,391 | 100% | ||

| dahpilot | 0 | 3,281,508,550 | 10% | ||

| kathajimenezr | 0 | 6,714,081,306 | 100% | ||

| szejq | 0 | 226,454,930,192 | 100% | ||

| karina.gpt | 0 | 0 | 100% | ||

| jgiordi | 0 | 493,545,482 | 80% | ||

| tahastories1 | 0 | 487,343,085 | 4.5% | ||

| kap1 | 0 | 1,122,125,035 | 30% | ||

| les90 | 0 | 1,050,733,780 | 4.5% | ||

| aslamrer | 0 | 6,077,545,354 | 30.35% | ||

| bihutnetwork | 0 | 1,685,156,077 | 50% | ||

| samuraiscam | 0 | 2,694,315,799 | 0.25% | ||

| marianosdeath | 0 | 20,618,146,481 | 100% | ||

| hive-lu | 0 | 543,352,878,751 | 68.13% | ||

| like2cbrs | 0 | 801,266,736 | 100% | ||

| empo.voter | 0 | 12,067,459,132,917 | 50% | ||

| reyn-is-chillin | 0 | 760,649,633 | 50% | ||

| hivebeecon | 0 | 11,974,460,031 | 100% | ||

| riseofthepixels | 0 | 11,802,004,342 | 100% | ||

| thebeedevs | 0 | 50,911,014,591 | 100% | ||

| cbrsphilanthropy | 0 | 68,448,314,238 | 100% | ||

| buraimi | 0 | 10,259,246,247 | 9% | ||

| pixels.vault | 0 | 522,780,232,337 | 100% | ||

| kryptofoto | 0 | 2,030,134,724 | 100% | ||

| justinmora | 0 | 37,668,671,774 | 100% | ||

| lamaluis79 | 0 | 2,972,786,236 | 100% | ||

| encuentro | 0 | 12,843,434,727 | 100% | ||

| setpiece | 0 | 802,198,878 | 100% | ||

| blkchn | 0 | 22,830,362,978 | 60% | ||

| daryh | 0 | 36,044,247,496 | 100% | ||

| lolz.byte | 0 | 0 | 100% | ||

| lourica | 0 | 684,605,408 | 50% | ||

| yahya.umer.hayat | 0 | 14,207,056,036 | 100% | ||

| leader7 | 0 | 735,002,459 | 50% | ||

| tecnotronics | 0 | 50,354,599,981 | 100% | ||

| picazzy005 | 0 | 1,313,053,775 | 50% | ||

| hivelife-pl | 0 | 36,926,748,062 | 100% | ||

| mama21 | 0 | 13,140,551,389 | 100% | ||

| cocinator | 0 | 6,674,096,617 | 100% | ||

| muhammadhalim | 0 | 3,597,124,176 | 49% | ||

| trovepower | 0 | 568,574,288 | 13% | ||

| mmbbot | 0 | 518,933,200 | 13% | ||

| nabila24 | 0 | 2,442,352,026 | 100% |

> because Hive's got a little over 89 millions of blocks now Heh. I see I'm not the only one who takes a month to write a post :oP > After the oldest part file containing initial 1 million blocks is removed, the block log is effectively pruned and full replay is no longer possible. Technically the information is correct, but it is worth pointing out explicitly that even if you are missing oldest block_log parts, you can still replay as long as you have valid snapshot that covers missing blocks. Although personally I'd keep all parts somewhere, because snapshots are easily outdated.

| author | andablackwidow |

|---|---|

| permlink | re-thebeedevs-2024118t16422782z |

| category | hive-139531 |

| json_metadata | {"tags":["hive","dev","hived","block-log","pruning"],"app":"ecency/3.0.35-surfer","format":"markdown+html"} |

| created | 2024-11-08 15:04:21 |

| last_update | 2024-11-08 15:04:21 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-15 15:04:21 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 591 |

| author_reputation | 97,189,462,735,448 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,342,563 |

| net_rshares | 0 |

!PIZZA

| author | danzocal |

|---|---|

| permlink | re-thebeedevs-smnqla |

| category | hive-139531 |

| json_metadata | {"tags":["hive-139531"],"app":"peakd/2024.10.15","image":[],"users":[]} |

| created | 2024-11-09 00:27:09 |

| last_update | 2024-11-09 00:27:09 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-16 00:27:09 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 6 |

| author_reputation | 12,432,803,008,396 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,351,276 |

| net_rshares | 0 |

Awesome feature, especially for witnesses, because usually they are running tons of various Hive nodes to serve the community.

{(Amidala meme: "Because they are running tons of various Hive nodes to serve the community???")}

For example, I can run a few broadcaster nodes on cheap VPS servers, as they no longer need a huge amount of storage. It will also improve my block_log serving service, as it will be much easier to resume downloads, even if you had a different source of blocks before (the blocks are the same, but because of the compression, their storage can differ between the nodes).| author | gtg |

|---|---|

| permlink | smmz4z |

| category | hive-139531 |

| json_metadata | {"app":"hiveblog/0.1"} |

| created | 2024-11-08 14:34:09 |

| last_update | 2024-11-08 14:34:09 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-15 14:34:09 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 597 |

| author_reputation | 461,898,288,716,338 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,342,106 |

| net_rshares | 29,562,232,858 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| resiliencia | 0 | 18,147,446,091 | 100% | ||

| urun | 0 | 11,414,786,767 | 100% | ||

| endhivewatchers | 0 | 0 | 0.01% |

#### Hello thebeedevs! **It's nice to let you know that your article won 🥇 place.** Your post is among the best articles voted 7 days ago by the @hive-lu | King Lucoin Curator by **szejq** You and your curator receive **0.0587 Lu** (Lucoin) investment token and a **15.51%** share of the reward from [Daily Report 477](/lucoin/@hive-lu/daily-report-day-477). Additionally, you can also receive a unique **LUGOLD** token for taking 1st place. All you need to do is reblog [this](/lucoin/@hive-lu/daily-report-day-477) report of the day with your winnings. <center>[](/@hive-lu)</center> --- <center><sub>Invest in the **Lu token** (Lucoin) and get paid. With 50 Lu in your wallet, you also become the curator of the @hive-lu which follows your upvote. Buy Lu on the [Hive-Engine](https://hive-engine.com/trade/LU) exchange | World of Lu created by @szejq </sub></center> <center><sub>_If you no longer want to receive notifications, reply to this comment with the word_ `STOP` _or to resume write a word_ `START`</sub> </center>

| author | hive-lu |

|---|---|

| permlink | lucoin-prize-9fx4se |

| category | hive-139531 |

| json_metadata | "" |

| created | 2024-11-16 03:30:12 |

| last_update | 2024-11-16 03:30:12 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-23 03:30:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 1,183 |

| author_reputation | 33,701,449,834,975 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,484,597 |

| net_rshares | 0 |

Congratulations @thebeedevs! You have completed the following achievement on the Hive blockchain And have been rewarded with New badge(s) <table><tr><td><img src="https://images.hive.blog/60x70/https://hivebuzz.me/@thebeedevs/upvoted.png?202411081735"></td><td>You received more than 4500 upvotes.<br>Your next target is to reach 4750 upvotes.</td></tr> </table> <sub>_You can view your badges on [your board](https://hivebuzz.me/@thebeedevs) and compare yourself to others in the [Ranking](https://hivebuzz.me/ranking)_</sub> <sub>_If you no longer want to receive notifications, reply to this comment with the word_ `STOP`</sub> **Check out our last posts:** <table><tr><td><a href="/hive-122221/@hivebuzz/pum-202410-delegations"><img src="https://images.hive.blog/64x128/https://i.imgur.com/fg8QnBc.png"></a></td><td><a href="/hive-122221/@hivebuzz/pum-202410-delegations">Our Hive Power Delegations to the October PUM Winners</a></td></tr></table>

| author | hivebuzz |

|---|---|

| permlink | notify-1731087815 |

| category | hive-139531 |

| json_metadata | {"image":["https://hivebuzz.me/notify.t6.png"]} |

| created | 2024-11-08 17:43:36 |

| last_update | 2024-11-08 17:43:36 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-15 17:43:36 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 956 |

| author_reputation | 369,483,390,319,365 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,344,932 |

| net_rshares | 0 |

This is indeed an exciting feature. In this case, I think we can run a full node locally, and when necessary, such as upgrades or hard forks, we can replay from the beginning. Then run lightweight nodes on the server (bare metal or VPS), which can meet the needs and save disk space costs.

| author | oflyhigh |

|---|---|

| permlink | smnxxz |

| category | hive-139531 |

| json_metadata | {"app":"hiveblog/0.1"} |

| created | 2024-11-09 03:06:00 |

| last_update | 2024-11-09 03:06:00 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-16 03:06:00 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 289 |

| author_reputation | 6,435,987,827,056,412 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,352,601 |

| net_rshares | 0 |

<center>PIZZA! $PIZZA slices delivered: @danzocal<sub>(7/10)</sub> tipped @thebeedevs </center>

| author | pizzabot |

|---|---|

| permlink | re-core-services-the-block-log-revolution-is-here-20241109t002908z |

| category | hive-139531 |

| json_metadata | "{"app": "pizzabot"}" |

| created | 2024-11-09 00:29:06 |

| last_update | 2024-11-09 00:29:06 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-16 00:29:06 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 100 |

| author_reputation | 7,737,314,250,392 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,351,301 |

| net_rshares | 0 |

This is going to be great! Reducing the cost of running nodes is going to get more participants! Great work.

| author | rishi556 |

|---|---|

| permlink | smowsb |

| category | hive-139531 |

| json_metadata | {"app":"hiveblog/0.1"} |

| created | 2024-11-09 15:38:39 |

| last_update | 2024-11-09 15:38:39 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-11-16 15:38:39 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 108 |

| author_reputation | 134,530,025,716,854 |

| root_title | "Core services - the block log revolution is here!" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 138,360,498 |

| net_rshares | 0 |

hiveblocks

hiveblocks