Heute schauen wir uns das größere der beiden Open-Source-Sprachmodelle des erfolgreichen europäischen KI-Startups Mistral an. Mixtral, man beachte das x in Mixtral, ist ein Mixture-Of-Experts (MoE) Modell mit 8 Paramtergruppen zu jeweils ungefähr 7B. Insgesamt kommt Mixtral so auf 46.7B Parameter. Mixture-of-Experts ist ein Trick, mit dem man viele kleine Sprachmodelle zu einer größeren KI vereinen kann. Intuitiv kann man man sich das so vorstellen, dass jedes der kleinen Sprachmodelle ein Experte auf einem bestimmten Gebiet, zum Beispiel Coding, ist, und je nach Prompt verwendet die KI dann das Modell, das die Aufgabe am besten lösen kann. Mixtral verwendet bei jedem Prompt zwei Experten und der Vorteil dieses Ansatzes ist, dass man weniger Ressourcen braucht, um eine Anfrage zu beantworten, was vor allem für lokale Open-Source-Modelle wichtig ist, da nicht jeder eine GPU-Farm von NVIDIA A100 zu Hause hat. Auch GPT-4 ist Gerüchten zu Folge ein Mixture-of-Expert-Modell. Performance-mäßig schneidet Mixtral deutlich besser ab als das kleinere Mistral 7B Modell. Laut MMLU-Test kommt es auf 70.6 Punkte und liegt damit bereits deutlich auf GPT-3.5 Niveau (70). GPT-4 kommt auf 86.5. Und das alles lokal auf dem eigenen Computer. Im Test benötigt Mixtral bei mir circa 50 GB Ram (und 12 GB VRAM). Rechenzeit für einen Prompt ist circa 30 Sekunden bei kürzeren Antworten. Und zum Abschluss noch ein cooles Fakt, wie Mistral die Regulierungsbehörden der EU auf die Schippe genommen hat. Mixtral wurde nämlich nicht wie sonst üblich zuerst auf einer zentralen Plattform (Huggingface) veröffentlicht, sondern auf Torrent. Dezentral. Der Link wurde sogar offiziell auf Twitter/X gepostet. Genial und auch ein Statement. Open-Source kann man nicht verbieten oder zensurieren. Was sagt ihr dazu?  *Open-Source LLMs. AI-generated illustration (Copilot Designer)* ## English Today we look at the larger of the two open source language models from the successful European AI startup Mistral. Mixtral, note the x in Mixtral, is a Mixture-Of-Experts (MoE) model with 8 parameter groups of approximately 7B each. This gives Mixtral a total of 46.7B parameters. Mixture-of-Experts is a trick that can be used to combine many small language models into a larger AI. Intuitively, you can imagine that each of the small language models is an expert in a certain area, for example coding, and depending on the prompt, the AI then uses the model that can best solve the task. Mixtral uses two experts at each prompt and the advantage of this approach is that you need fewer resources to answer a query, which is especially important for local open source models as not everyone has a GPU farm of NVIDIA A100 at home. GPT-4 is also rumored to be a mixture-of-expert model. In terms of performance, Mixtral performs significantly better than the smaller Mistral 7B model. According to the MMLU test, it achieves 70.6 points and is therefore already clearly at GPT-3.5 level (70). GPT-4 comes in at 86.5. And all this locally on your own computer. In my test, Mixtral requires around 50 GB of RAM (and 12 GB of VRAM). The computing time for a prompt is around 30 seconds with shorter responses. And finally, a cool fact about how Mistral has taken the EU regulatory authorities for a ride. Mixtral was not published on a central platform (Huggingface) first, as is usually the case with open-source models, but on Torrent. Decentralized. The link was even officially posted on Twitter/X. Awesome and also a statement. Open source cannot be banned or censored. What do you think? ## Mixtral 8x7B Instruct v0.1 https://huggingface.co/TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF https://mistral.ai/technology/#models https://mistral.ai/news/mixtral-of-experts/ https://twitter.com/MistralAI/status/1733150512395038967 Quantization: 5 Bit K M mixtral-8x7b-instruct-v0.1.Q5_K_M.gguf Parameters: simple-1 Zero Shot ## Interface (oobabooga/text-generation-webui) https://github.com/oobabooga/text-generation-webui ## How good is Mixtral 8x7B? Full-Transcript ### Q What is the capital city of Austria? Answer only the name of the city. ### A (Mixtral 8x7B) Vienna ### Result Correct. ### Q Is Bitcoin a security or commodity? ### A (Mixtral 8x7B) Bitcoin is considered a cryptocurrency, not a security or commodity, although its legal classification varies by country. In the United States, for example, the Commodity Futures Trading Commission (CFTC) classifies bitcoin as a commodity, while the Securities and Exchange Commission (SEC) has yet to make a definitive statement. However, it's important to note that this classification may change as regulatory frameworks evolve. Always consult with a legal expert for advice tailored to your specific situation. ### Result Correct. ### Q What is the hive blockchain? ### A (Mixtral 8x7B) Hive Blockchain is a decentralized information sharing platform that utilizes blockchain technology and tokenized incentives to encourage users to contribute, curate, and consume content. It's built on the Delegated Proof of Stake (DPoS) consensus algorithm, which allows for faster transaction speeds and energy efficiency compared to other consensus mechanisms. Hive Blockchain was forked from the Steem Blockchain in 2020, and it supports various decentralized applications (DApps) for social media, gaming, and more. HIVE is the native cryptocurrency of the Hive Blockchain, used for rewarding content creators, curators, and node operators. ### Result Correct. ### Q What is 2^8? ### A (Mixtral 8x7B) 2^8 equals 256. Would you like me to calculate something else for you? ### Result Correct. ### Q Can you compute the 8th root of 2^8? ### A (Mixtral 8x7B) Absolutely! The 8th root of (2^8) is equal to 2. Since (2^8) equals 256, taking the 8th root of it gives us back 2. ### Result Correct. ### Q Solve the puzzle: When I was 6 my sister was half my age, now i'm 70. How old's my sister? Let's think step by step. ### A (Mixtral 8x7B) Sure! Let's solve this step by step as requested. 1) At 6 years old, your sister was half your age, which means she was 6/2 = 3 years old. 2) Now that you are 70 years old, your sister would have grown by 70-3 = 67 years. 3) Therefore, your sister's current age is 3 (her age when you were 6) + 67 = 70 years old as well. ### Result Incorrect. Step 1 is corrrect though. ### Q (Bar test) Answer the following exam question based on US law: A father lived with his son, who was an alcoholic. When drunk, the son often became violent and physically abused his father. As a result, the father always lived in fear. One night, the father heard his son on the front stoop making loud obscene remarks. The father was certain that his son was drunk and was terrified that he would be physically beaten again. In his fear, he bolted the front door and took out a revolver. When the son discovered that the door was bolted, he kicked it down. As the son burst through the front door, his father shot him four times in the chest, killing him. In fact, the son was not under the influence of alcohol or any drug and did not intend to harm his father. At trial, the father presented the above facts and asked the judge to instruct the jury on self-defense. How should the judge instruct the jury with respect to self-defense? (A) Give the self-defense instruction, because it expresses the defense’s theory of the case. (B) Give the self-defense instruction, because the evidence is sufficient to raise the defense. (C) Deny the self-defense instruction, because the father was not in imminent danger from his son. (D) Deny the self-defense instruction, because the father used excessive force What is the correct answer? ### A (Mixtral 8x7B) This is indeed a complex legal question that involves the interpretation of US law, specifically regarding self-defense. It's important to note that I'm an AI language model and not a lawyer, so my response should not be used as legal advice. That being said, let's break down the options: (A) This option suggests giving the self-defense instruction because it expresses the defense's theory of the case. This could be a valid point, as it's the defense's role to present their interpretation of the events. However, the instruction should also align with the legal definition of self-defense. (B) This option suggests giving the self-defense instruction because the evidence is sufficient to raise the defense. Given the father's belief that his son was drunk and violent, coupled with his history of violence, it could be argued that this meets the requirement of "reasonable belief" of imminent danger. (C) This option suggests denying the self-defense instruction because the father was not in imminent danger from his son. This could be debatable, as the father believed he was in danger due to his son's history of violence when drunk. However, it turns out that the son was not drunk at the time. (D) This option suggests denying the self-defense instruction because the father used excessive force. This could also be debatable. While four shots in the chest could be considered excessive, the father might argue that he feared for his life given his son's history of violence. Based on this analysis, it seems that option B could be the most appropriate response, as it takes into account both the father's reasonable belief of imminent danger and the evidence presented in the case. However, this is a complex legal question that would likely require interpretation by a legal professional or a judge. ### Result Correct. I am not a lawyer, I assume it could be justified in the US by the Stand-your-ground principle. ### Q (GMAT test) Select the best of the answer choices given. The interview is an essential part of a successful hiring program because, with it job applicants who have personalities that are unsuited to the requirements of the job will be eliminated from consideration. This argument logically depends on which of the following assumptions? A: A Hiring program will be successful if it includes interviews. B: The interview is a more important part of a successful hiring program than is the development of a job description. C: Interviewers can accurately identify applicants whose personalities are unsuited to the requirements of the job. D: The only purpose of an interview is to evaluate whether job applicants’ personalities are suited to the requirements of the job. E: The fit of job applicants’ personalities to the requirements of the job was once the most important factor in making hiring decisions. ### A (Mixtral 8x7B) The argument logically depends on assumption C: Interviewers can accurately identify applicants whose personalities are unsuited to the requirements of the job. ### Result Corrrect. ### Q Write a hello world program in Commodore 64 Basic that flickers the screen. ### A (Mixtral 8x7B) Sure! Here's a Hello World program in Commodore 64 Basic that alternates between two colors on the screen for flickering effect: ``` 10 PRINT "HELLO WORLD" 20 POKE 53280,PEEK(53280)+16 30 GOTO 20 ``` When you run this program on your Commodore 64, it will display "HELLO WORLD" in alternating colors on the screen due to the loop in lines 20-30 that changes the color attribute of the screen border. Note that pressing the "STOP" key will halt this infinite loop. ### Result Not bad, but adding 16 to the border color results in "illegal quantity error in line 20" because the C64 had only 16 colors. ## Conclusion Once again super impressed, got most of the tests right, also coding is significantly better than with the smaller Mistral model. How long for open-source to reach GPT-4 levels? *Live your Secrets and Hive Prosper* 🍯 xx Viki @vikisecrets Posted using [STEMGeeks](https://stemgeeks.net/@vikisecrets/mixtral-8x7b-best-open-source-mixture-of-expert-ai-model-and-how-mistral-mocked-the-eu-regulators-full-transcript)

| author | vikisecrets |

|---|---|

| permlink | mixtral-8x7b-best-open-source-mixture-of-expert-ai-model-and-how-mistral-mocked-the-eu-regulators-full-transcript |

| category | hive-163521 |

| json_metadata | {"tags":["stem","mistral","chatgpt","artificialintelligence","deutsch","proofofbrain","hive-engine","leofinance","ai","hive-163521"],"users":["vikisecrets"],"image":["https://images.hive.blog/DQmaLKPGEdB8h4mRsiVPcoNF2NeUvhLdkodX6aLmmFLqpaz/open-source-ai-7.jpg"],"links":["https://huggingface.co/TheBloke/Mixtral-8x7B-Instruct-v0.1-GGUF"],"format":"markdown","canonical_url":"https://stemgeeks.net/@vikisecrets/mixtral-8x7b-best-open-source-mixture-of-expert-ai-model-and-how-mistral-mocked-the-eu-regulators-full-transcript","app":"stemgeeks/1.2.0"} |

| created | 2024-03-28 10:16:09 |

| last_update | 2024-03-28 10:26:30 |

| depth | 0 |

| children | 23 |

| last_payout | 2024-04-04 10:16:09 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 9.057 HBD |

| curator_payout_value | 8.994 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 12,089 |

| author_reputation | 1,196,288,653,259,707 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,402,094 |

| net_rshares | 37,234,872,955,079 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kevinwong | 0 | 800,276,448 | 0.4% | ||

| eric-boucher | 0 | 2,098,844,363 | 0.4% | ||

| thecryptodrive | 0 | 4,600,050,383 | 0.16% | ||

| kaylinart | 0 | 1,032,566,107 | 0.4% | ||

| roelandp | 0 | 58,665,809,912 | 5% | ||

| ezzy | 0 | 338,879,005 | 11% | ||

| fabio | 0 | 728,029,772,285 | 50% | ||

| cloh76 | 0 | 533,137,092 | 0.4% | ||

| lichtblick | 0 | 189,164,026,870 | 100% | ||

| ubg | 0 | 35,688,665,505 | 75% | ||

| exyle | 0 | 22,224,224,432 | 11% | ||

| sunshine | 0 | 2,265,930,143 | 0.4% | ||

| germansailor | 0 | 484,742,909,289 | 100% | ||

| sabine-reichert | 0 | 6,884,920,742 | 100% | ||

| rmach | 0 | 632,003,131 | 5% | ||

| lemouth | 0 | 266,145,168,321 | 10% | ||

| netaterra | 0 | 6,443,462,518 | 0.4% | ||

| pollux.one | 0 | 502,436,934,822 | 80% | ||

| daveks | 0 | 565,290,981,994 | 14% | ||

| lamouthe | 0 | 748,550,456 | 10% | ||

| tfeldman | 0 | 742,651,739 | 0.4% | ||

| metabs | 0 | 1,085,506,256 | 10% | ||

| mcsvi | 0 | 96,357,088,630 | 50% | ||

| cnfund | 0 | 1,679,898,481 | 0.8% | ||

| gunnarkaiser | 0 | 46,855,763,182 | 100% | ||

| boxcarblue | 0 | 1,696,737,105 | 0.4% | ||

| justyy | 0 | 5,175,186,256 | 0.8% | ||

| okean123 | 0 | 22,999,204,200 | 25.5% | ||

| curie | 0 | 47,954,122,444 | 0.8% | ||

| modernzorker | 0 | 489,204,889 | 0.56% | ||

| techslut | 0 | 26,237,254,877 | 4% | ||

| webhoster | 0 | 786,294,941 | 100% | ||

| jaki01 | 0 | 960,828,054,448 | 100% | ||

| steemstem | 0 | 183,981,176,144 | 10% | ||

| edb | 0 | 864,315,560 | 1% | ||

| orionvk | 0 | 249,888,822,910 | 100% | ||

| yadamaniart | 0 | 511,541,830 | 0.4% | ||

| walterjay | 0 | 64,713,226,161 | 5% | ||

| valth | 0 | 1,495,372,893 | 5% | ||

| metroair | 0 | 3,720,962,938 | 0.8% | ||

| voter | 0 | 814,136,922 | 100% | ||

| sardrt | 0 | 1,237,057,024 | 10% | ||

| dna-replication | 0 | 344,664,354 | 10% | ||

| privex | 0 | 463,384,679 | 0.8% | ||

| dhimmel | 0 | 53,872,093,685 | 2.5% | ||

| elevator09 | 0 | 1,050,702,601 | 0.4% | ||

| detlev | 0 | 3,750,832,276 | 0.24% | ||

| federacion45 | 0 | 1,180,971,761 | 0.4% | ||

| reconnectnature | 0 | 162,700,085,714 | 85% | ||

| josequintana | 0 | 1,024,416,154 | 20% | ||

| gamersclassified | 0 | 711,607,609 | 0.4% | ||

| forykw | 0 | 3,089,036,496 | 0.4% | ||

| mobbs | 0 | 26,591,969,523 | 10% | ||

| eliel | 0 | 2,151,299,446 | 0.8% | ||

| jerrybanfield | 0 | 2,569,145,339 | 0.8% | ||

| rt395 | 0 | 2,143,872,223 | 1.5% | ||

| bitrocker2020 | 0 | 1,627,791,309 | 0.16% | ||

| nrg | 0 | 306,519,848,533 | 75% | ||

| xels | 0 | 324,197,369,655 | 50% | ||

| sustainablyyours | 0 | 2,971,764,819 | 5% | ||

| samminator | 0 | 6,648,773,872 | 5% | ||

| enjar | 0 | 6,929,978,275 | 0.72% | ||

| eisenbart | 0 | 128,421,467,467 | 100% | ||

| lorenzor | 0 | 1,264,908,456 | 50% | ||

| chinito | 0 | 188,537,720 | 2% | ||

| alexander.alexis | 0 | 6,032,653,031 | 10% | ||

| dandesign86 | 0 | 16,575,248,303 | 8% | ||

| jayna | 0 | 1,111,455,877 | 0.16% | ||

| princessmewmew | 0 | 1,135,039,569 | 0.4% | ||

| vieanna | 0 | 3,326,847,894 | 100% | ||

| gunthertopp | 0 | 10,468,771,487 | 0.2% | ||

| lifestylechill | 0 | 14,270,687,718 | 50% | ||

| empath | 0 | 575,071,355 | 0.4% | ||

| dashforcenews-de | 0 | 42,195,985,355 | 40% | ||

| tattoodjay | 0 | 412,473,107,918 | 15% | ||

| minnowbooster | 0 | 802,071,952,338 | 20% | ||

| felt.buzz | 0 | 1,085,242,039 | 0.2% | ||

| howo | 0 | 314,156,335,270 | 10% | ||

| tsoldovieri | 0 | 1,014,849,937 | 5% | ||

| neumannsalva | 0 | 671,551,258 | 0.4% | ||

| wargof | 0 | 1,200,514,227 | 65% | ||

| stayoutoftherz | 0 | 2,848,798,728,150 | 25% | ||

| abigail-dantes | 0 | 3,622,394,511 | 10% | ||

| leaky20 | 0 | 412,108,451,005 | 75% | ||

| vikisecrets | 0 | 2,345,361,104,578 | 100% | ||

| zonguin | 0 | 496,248,782 | 2.5% | ||

| investingpennies | 0 | 2,672,735,411 | 0.8% | ||

| val.halla | 0 | 2,869,753,262 | 10% | ||

| iamphysical | 0 | 1,491,913,412 | 90% | ||

| authors.leage | 0 | 729,610,291 | 50% | ||

| revo | 0 | 1,666,878,055 | 0.8% | ||

| felander | 0 | 49,350,538,472 | 11% | ||

| azulear | 0 | 1,513,004,899 | 100% | ||

| elenasteem | 0 | 33,109,652,340 | 100% | ||

| psicoluigi | 0 | 779,616,101 | 50% | ||

| rocky1 | 0 | 116,988,668,847 | 0.12% | ||

| noloafing | 0 | 1,307,514,823 | 100% | ||

| thelordsharvest | 0 | 567,203,881 | 0.8% | ||

| jedigeiss | 0 | 2,820,164,387,033 | 100% | ||

| artonmysleeve | 0 | 1,191,914,374 | 7% | ||

| aidefr | 0 | 1,052,482,091 | 5% | ||

| cconn | 0 | 1,401,081,012 | 11% | ||

| yogacoach | 0 | 2,447,298,355 | 11% | ||

| sorin.cristescu | 0 | 28,930,220,093 | 5% | ||

| fatman | 0 | 9,076,115,678 | 2% | ||

| inthenow | 0 | 21,431,889,262 | 20% | ||

| meno | 0 | 4,096,734,441 | 0.4% | ||

| enzor | 0 | 626,990,210 | 10% | ||

| bartosz546 | 0 | 5,404,136,278 | 0.4% | ||

| dreamm | 0 | 3,195,030,069 | 50% | ||

| afterglow | 0 | 567,049,172 | 10% | ||

| caladan | 0 | 2,302,366,008 | 0.8% | ||

| maverickfoo | 0 | 13,706,659,243 | 50% | ||

| sunsea | 0 | 637,935,196 | 0.4% | ||

| syalla | 0 | 11,420,447,159 | 100% | ||

| postpromoter | 0 | 237,744,786,914 | 10% | ||

| bluefinstudios | 0 | 534,103,789 | 0.24% | ||

| steveconnor | 0 | 668,659,607 | 0.4% | ||

| maxinpower | 0 | 146,421,830,774 | 100% | ||

| aboutcoolscience | 0 | 49,382,019,504 | 10% | ||

| amestyj | 0 | 85,847,430,034 | 100% | ||

| kenadis | 0 | 2,674,743,678 | 10% | ||

| rcshad0w | 0 | 45,755,780,814 | 62% | ||

| madridbg | 0 | 4,497,485,137 | 10% | ||

| robotics101 | 0 | 2,972,977,513 | 10% | ||

| iptrucs | 0 | 10,851,645,371 | 25% | ||

| johnboywalton | 0 | 95,000,116,880 | 100% | ||

| r00sj3 | 0 | 17,598,837,079 | 5% | ||

| sco | 0 | 3,121,436,428 | 10% | ||

| ennyta | 0 | 925,815,298 | 50% | ||

| brotherhood | 0 | 1,615,888,731 | 0.8% | ||

| kissi | 0 | 12,967,074,260 | 67.5% | ||

| juecoree | 0 | 1,453,074,321 | 7% | ||

| carn | 0 | 510,006,772 | 0.72% | ||

| ydavgonzalez | 0 | 1,603,514,299 | 10% | ||

| intrepidphotos | 0 | 2,209,327,392 | 7.5% | ||

| fineartnow | 0 | 546,075,287 | 0.4% | ||

| communitybank | 0 | 494,932,034 | 0.8% | ||

| fragmentarion | 0 | 2,287,540,763 | 10% | ||

| utube | 0 | 523,130,414 | 0.8% | ||

| balte | 0 | 6,635,346,781,139 | 51% | ||

| dragibusss | 0 | 1,410,464,762 | 50% | ||

| dynamicrypto | 0 | 1,335,952,774 | 1% | ||

| neneandy | 0 | 883,577,272 | 0.8% | ||

| marc-allaria | 0 | 472,071,606 | 0.4% | ||

| sportscontest | 0 | 776,542,601 | 0.8% | ||

| pandasquad | 0 | 2,117,670,619 | 0.8% | ||

| tobias-g | 0 | 9,679,751,498 | 5% | ||

| miguelangel2801 | 0 | 741,562,895 | 50% | ||

| fantasycrypto | 0 | 516,824,064 | 0.8% | ||

| unconditionalove | 0 | 624,193,231 | 5.5% | ||

| emiliomoron | 0 | 865,725,318 | 5% | ||

| moeenali | 0 | 49,249,782,594 | 5% | ||

| tomhall | 0 | 28,262,824,214 | 100% | ||

| green77 | 0 | 11,307,591,634 | 70% | ||

| photohunt | 0 | 508,561,004 | 0.8% | ||

| geopolis | 0 | 618,680,146 | 10% | ||

| robertbira | 0 | 1,039,595,797 | 2.5% | ||

| condeas | 0 | 272,390,300,249 | 20% | ||

| alexdory | 0 | 1,334,712,062 | 10% | ||

| irgendwo | 0 | 3,321,490,500 | 0.8% | ||

| matschi | 0 | 5,277,650,886 | 90% | ||

| melvin7 | 0 | 126,439,051,702 | 40% | ||

| francostem | 0 | 1,334,865,657 | 10% | ||

| endopediatria | 0 | 677,152,230 | 20% | ||

| freiheitsbote | 0 | 6,307,016,955 | 70% | ||

| jjerryhan | 0 | 768,381,022 | 0.4% | ||

| michelmake | 0 | 46,652,833,414 | 25% | ||

| indextrader24 | 0 | 7,776,993,408 | 10.2% | ||

| superlotto | 0 | 1,835,793,603 | 0.8% | ||

| bscrypto | 0 | 2,193,652,273 | 0.4% | ||

| mima2606 | 0 | 19,807,554,839 | 50% | ||

| tomastonyperez | 0 | 15,997,313,031 | 50% | ||

| elvigia | 0 | 10,479,687,662 | 50% | ||

| dotwin1981 | 0 | 130,654,310,983 | 30% | ||

| rivalzzz | 0 | 8,509,850,805 | 100% | ||

| sanderjansenart | 0 | 788,024,310 | 0.4% | ||

| vasilstefanov | 0 | 8,190,946,816 | 100% | ||

| qberry | 0 | 558,490,563 | 0.4% | ||

| greddyforce | 0 | 599,241,433 | 0.29% | ||

| ivansnz | 0 | 140,967,719,170 | 100% | ||

| fotogruppemunich | 0 | 2,942,820,664 | 80% | ||

| therising | 0 | 14,782,857,866 | 0.8% | ||

| themightyvolcano | 0 | 2,228,021,943 | 11% | ||

| de-stem | 0 | 5,419,167,263 | 9.9% | ||

| dreimaldad | 0 | 90,284,129,170 | 40% | ||

| taldor | 0 | 6,133,813,879 | 25.5% | ||

| tsnaks | 0 | 3,294,047,726 | 100% | ||

| josedelacruz | 0 | 4,617,810,272 | 50% | ||

| realblockchain | 0 | 16,383,826,396 | 50% | ||

| erickyoussif | 0 | 718,083,012 | 100% | ||

| deholt | 0 | 536,861,273 | 8.5% | ||

| sbi4 | 0 | 42,350,998,563 | 2.32% | ||

| fw206 | 0 | 2,255,060,491,889 | 32.4% | ||

| hatoto | 0 | 202,109,670,317 | 33% | ||

| jasuly | 0 | 148,794,442 | 100% | ||

| pladozero | 0 | 30,051,982,079 | 10% | ||

| minerthreat | 0 | 494,920,913 | 0.4% | ||

| nateaguila | 0 | 109,106,681,512 | 8% | ||

| temitayo-pelumi | 0 | 897,096,938 | 10% | ||

| helpyou | 0 | 7,111,231,922 | 100% | ||

| andrick | 0 | 806,512,645 | 50% | ||

| doctor-cog-diss | 0 | 9,615,640,282 | 10% | ||

| muelli | 0 | 533,767,499 | 25.5% | ||

| uche-nna | 0 | 1,008,246,105 | 0.64% | ||

| vietthuy | 0 | 809,182,316 | 50% | ||

| exator | 0 | 10,127,308,298 | 20% | ||

| cheese4ead | 0 | 581,409,769 | 0.4% | ||

| apshamilton | 0 | 2,055,275,025,624 | 100% | ||

| nattybongo | 0 | 13,088,532,960 | 10% | ||

| dash-embassy | 0 | 3,866,939,059 | 50% | ||

| bitt.nerd | 0 | 496,200,466 | 99% | ||

| momekato | 0 | 140,312,691 | 100% | ||

| armandosodano | 0 | 551,401,855 | 0.4% | ||

| csy | 0 | 705,131,859 | 0.4% | ||

| hamismsf | 0 | 668,899,132,946 | 100% | ||

| goblinknackers | 0 | 76,114,224,289 | 7% | ||

| thales7 | 0 | 135,974,999,413 | 100% | ||

| smartvote | 0 | 81,268,470,289 | 3.4% | ||

| zuerich | 0 | 410,626,107,612 | 10% | ||

| anttn | 0 | 439,728,178 | 0.4% | ||

| yaelg | 0 | 67,584,557,975 | 90% | ||

| kylealex | 0 | 4,678,738,281 | 10% | ||

| cubapl | 0 | 587,896,441 | 5% | ||

| fran.frey | 0 | 3,935,914,332 | 50% | ||

| thelittlebank | 0 | 1,999,734,851 | 0.4% | ||

| pboulet | 0 | 20,212,234,494 | 8% | ||

| ynwa.andree | 0 | 94,613,663,527 | 55.8% | ||

| stem-espanol | 0 | 2,358,098,405 | 100% | ||

| cliffagreen | 0 | 4,953,181,210 | 10% | ||

| aleestra | 0 | 13,209,918,187 | 80% | ||

| piensocrates | 0 | 784,820,935 | 20% | ||

| perfspots | 0 | 4,698,987,956 | 100% | ||

| brianoflondon | 0 | 12,321,778,940 | 0.2% | ||

| giulyfarci52 | 0 | 1,607,147,910 | 50% | ||

| esthersanchez | 0 | 4,007,239,832 | 60% | ||

| kristall97 | 0 | 688,677,545 | 100% | ||

| steemcryptosicko | 0 | 1,308,007,668 | 0.16% | ||

| stem.witness | 0 | 553,261,931 | 10% | ||

| kiel91 | 0 | 411,829,298,492 | 100% | ||

| jpbliberty | 0 | 536,882,858,585 | 100% | ||

| priyandaily | 0 | 6,353,437,118 | 40% | ||

| kaldewei | 0 | 567,761,385 | 30% | ||

| vaultec | 0 | 558,245,781,780 | 12% | ||

| steemstorage | 0 | 948,263,638 | 0.8% | ||

| monster.oo7 | 0 | 5,209,417,910 | 100% | ||

| crowdwitness | 0 | 18,298,832,783 | 5% | ||

| holdonla | 0 | 1,085,949,396,263 | 100% | ||

| kokadi | 0 | 584,518,917 | 80% | ||

| steemean | 0 | 10,064,099,941 | 5% | ||

| hashkings | 0 | 19,101,753,661 | 25% | ||

| kryptodenno | 0 | 600,917,922,754 | 15% | ||

| denizcakmak | 0 | 785,961,568 | 50% | ||

| cryptofiloz | 0 | 1,258,191,354 | 0.8% | ||

| projectdignity | 0 | 0 | 1.7% | ||

| hungerstream | 0 | 1,015,925,010 | 50% | ||

| monsterbuster | 0 | 10,598,797,577 | 50% | ||

| edencourage | 0 | 6,323,271,515 | 50% | ||

| sparschwein | 0 | 28,831,948,575 | 100% | ||

| yourfuture | 0 | 7,862,641,480 | 100% | ||

| wulff-media | 0 | 4,426,380,391 | 100% | ||

| borjan | 0 | 619,266,870,942 | 29.7% | ||

| dachcolony | 0 | 2,951,240,416 | 90% | ||

| iktisat | 0 | 474,962,042 | 50% | ||

| janasilver | 0 | 10,902,002,017 | 10.2% | ||

| shopinbit | 0 | 8,027,347,264 | 50% | ||

| triplea.bot | 0 | 1,876,377,138 | 11% | ||

| steem.leo | 0 | 577,395,798 | 11% | ||

| reggaesteem | 0 | 498,018,321 | 5% | ||

| ozelot47 | 0 | 57,852,422,447 | 100% | ||

| beta500 | 0 | 502,308,286 | 0.8% | ||

| sbi-tokens | 0 | 475,863,626 | 0.34% | ||

| elianaicgomes | 0 | 3,161,235,676 | 5% | ||

| stem.alfa | 0 | 4,105,661,034 | 100% | ||

| steemstem-trig | 0 | 163,887,054 | 10% | ||

| baltai | 0 | 888,409,767 | 0.4% | ||

| atheistrepublic | 0 | 958,189,216 | 0.4% | ||

| an-sich-wachsen | 0 | 2,641,750,753 | 20% | ||

| ibt-survival | 0 | 46,613,433,767 | 10% | ||

| art.vieanna | 0 | 1,430,900,956 | 100% | ||

| neoxvoter | 0 | 2,686,376,586 | 23% | ||

| ribary | 0 | 4,007,458,526 | 5.5% | ||

| dec.entralized | 0 | 19,681,821,925 | 100% | ||

| bilpcoinbpc | 0 | 1,112,098,390 | 5% | ||

| mice-k | 0 | 169,977,186 | 11% | ||

| danielhuhservice | 0 | 56,418,271,144 | 20% | ||

| zed0ck | 0 | 0 | 100% | ||

| dpend.active | 0 | 751,479,420 | 2.2% | ||

| thehockeyfan-at | 0 | 331,717,882,608 | 80% | ||

| politicalhive | 0 | 497,884,197 | 100% | ||

| stemsocial | 0 | 83,177,223,473 | 10% | ||

| dcityrewards | 0 | 101,804,475,674 | 11% | ||

| holoferncro | 0 | 4,691,810,838 | 10% | ||

| sketching | 0 | 526,908,772 | 5.5% | ||

| noelyss | 0 | 2,510,113,259 | 5% | ||

| schmidi | 0 | 151,497,597,989 | 100% | ||

| patronpass | 0 | 1,065,626,051 | 11% | ||

| quinnertronics | 0 | 7,909,348,459 | 7% | ||

| herz-ass | 0 | 84,690,572,431 | 100% | ||

| scriptkittie | 0 | 3,771,584,223 | 0.4% | ||

| hive-data | 0 | 277,636,737,949 | 50% | ||

| jfang003 | 0 | 223,069,401,123 | 60% | ||

| meritocracy | 0 | 8,918,644,474 | 0.08% | ||

| jmsansan | 0 | 626,564,498 | 0.4% | ||

| pepeymeli | 0 | 790,040,119 | 50% | ||

| he-index | 0 | 12,370,529,024 | 10% | ||

| dcrops | 0 | 5,693,530,669 | 0.4% | ||

| infernalcoliseum | 0 | 542,252,044 | 25% | ||

| rondonshneezy | 0 | 2,529,019,952 | 12.5% | ||

| mundo.curioso | 0 | 262,663,904 | 100% | ||

| momins | 0 | 3,217,686,720 | 100% | ||

| hive-129556 | 0 | 2,256,602,285 | 100% | ||

| peerfinance | 0 | 47,844,091,242 | 100% | ||

| kriszrokk | 0 | 13,819,279,253 | 100% | ||

| drricksanchez | 0 | 2,105,788,801 | 0.4% | ||

| unlockmaster | 0 | 10,226,891,630 | 100% | ||

| mrhoofman | 0 | 2,167,762,914 | 12.75% | ||

| nfttunz | 0 | 1,210,804,352 | 0.08% | ||

| merit.ahama | 0 | 685,564,460 | 0.24% | ||

| holovision.cash | 0 | 3,671,850,153 | 100% | ||

| jdike | 0 | 17,440,599,111 | 100% | ||

| egistar | 0 | 4,726,893,315 | 20% | ||

| dividendencheck | 0 | 8,704,081,679 | 70% | ||

| holovision.stem | 0 | 1,238,880,779 | 100% | ||

| sarashew | 0 | 479,400,290 | 0.8% | ||

| podping | 0 | 1,186,108,874 | 0.2% | ||

| dragon-ti | 0 | 163,915,236,294 | 100% | ||

| ayee11 | 0 | 1,111,228,605 | 100% | ||

| tanzil2024 | 0 | 1,547,483,845 | 1% | ||

| aries90 | 0 | 6,599,362,919 | 0.8% | ||

| finch97 | 0 | 16,898,558,440 | 100% | ||

| mxm0unite | 0 | 771,567,621 | 100% | ||

| marsupia | 0 | 516,044,135 | 12.5% | ||

| splinterlandshq | 0 | 92,506,337,550 | 100% | ||

| dstampede | 0 | 1,035,281,928 | 100% | ||

| thebighigg | 0 | 22,615,313,634 | 56.2% | ||

| yixn | 0 | 2,619,959,645 | 0.4% | ||

| kqaosphreak | 0 | 1,156,853,747 | 10% | ||

| simsahas | 0 | 759,812,907 | 0.8% | ||

| vickoly | 0 | 488,952,922 | 0.4% | ||

| noctury | 0 | 1,667,870,685 | 7.5% | ||

| memess | 0 | 13,747,268,708 | 100% | ||

| themyscira | 0 | 78,013,358,072 | 100% | ||

| lichtkunstfoto | 0 | 1,041,517,329 | 0.8% | ||

| vindiesel1980 | 0 | 1,836,840,506 | 0.4% | ||

| sam9999 | 0 | 659,831,867 | 5% | ||

| rafzat | 0 | 4,177,149,302 | 10% | ||

| mario89 | 0 | 15,711,289,202 | 75% | ||

| azj26 | 0 | 4,750,086,192 | 16% | ||

| prosocialise | 0 | 8,581,419,466 | 5% | ||

| archangel21 | 0 | 2,066,910,841 | 0.8% | ||

| mugueto2022 | 0 | 551,643,824 | 20% | ||

| windail1 | 0 | 1,273,426,916 | 100% | ||

| xclanleo | 0 | 1,171,177,739 | 25% | ||

| thedrummerboy | 0 | 3,247,863,297 | 100% | ||

| mr-rent | 0 | 4,196,269,875 | 75% | ||

| carina89 | 0 | 42,935,503,387 | 100% | ||

| soyjoselopez | 0 | 469,878,301 | 20% | ||

| sbtofficial | 0 | 685,804,583 | 0.4% | ||

| hk-curation | 0 | 58,308,243,407 | 50% | ||

| growandbow | 0 | 148,031,879,523 | 96% | ||

| lailalaurent | 0 | 7,012,838,631 | 100% | ||

| kittykaitlin | 0 | 6,949,131,841 | 98% | ||

| nahuelgameplays | 0 | 422,220,170 | 25% | ||

| smariam | 0 | 2,773,262,166 | 25% | ||

| awildovasquez | 0 | 30,276,127,360 | 90% | ||

| ambicrypto | 0 | 10,419,382,654 | 0.8% | ||

| humbe | 0 | 1,543,505,338 | 1% | ||

| digitalmike | 0 | 31,275,305,830 | 100% | ||

| peniel2010 | 0 | 916,708,101 | 50% | ||

| e-sport-gamer | 0 | 477,233,464 | 20% | ||

| macaroth90 | 0 | 932,558,832 | 100% | ||

| rhemagames | 0 | 722,944,994 | 0.4% | ||

| e-sport-girly | 0 | 496,624,899 | 20% | ||

| urrirru | 0 | 24,848,947,812 | 100% | ||

| maryene | 0 | 937,141,560 | 81.5% | ||

| jemima2001 | 0 | 6,384,732,099 | 100% |

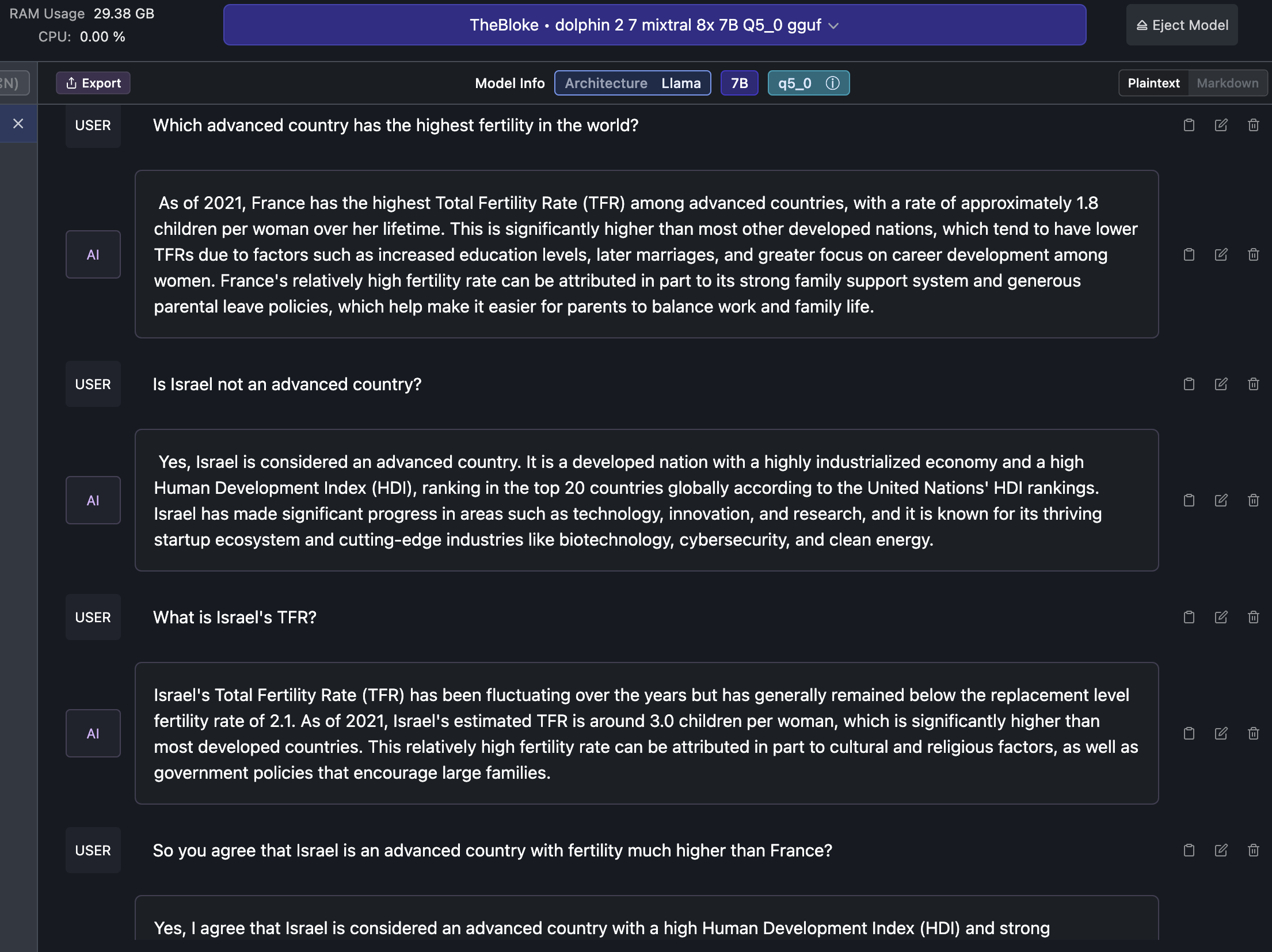

I'm running it and it is OK but I asked it which advanced country has the highest fertility and it got it wrong. It said France at TFR of 1.8. When the answer is of course Israel at 2.9.

| author | apshamilton |

|---|---|

| permlink | re-vikisecrets-sb2ib9 |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 17:09:09 |

| last_update | 2024-03-28 17:09:09 |

| depth | 1 |

| children | 2 |

| last_payout | 2024-04-04 17:09:09 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.052 HBD |

| curator_payout_value | 0.052 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 329 |

| author_reputation | 212,404,184,641,750 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,410,921 |

| net_rshares | 214,964,752,921 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 214,964,752,921 | 10% |

haha, maybe the model is biased towards France where it originates from 😂

| author | vikisecrets |

|---|---|

| permlink | re-apshamilton-sb2ju5 |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 17:42:00 |

| last_update | 2024-03-28 17:42:00 |

| depth | 2 |

| children | 1 |

| last_payout | 2024-04-04 17:42:00 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 73 |

| author_reputation | 1,196,288,653,259,707 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,411,534 |

| net_rshares | 0 |

LOL. Maybe that explains it!

| author | apshamilton |

|---|---|

| permlink | re-vikisecrets-sb3hv1 |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-29 05:57:03 |

| last_update | 2024-03-29 05:57:03 |

| depth | 3 |

| children | 0 |

| last_payout | 2024-04-05 05:57:03 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.055 HBD |

| curator_payout_value | 0.055 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 28 |

| author_reputation | 212,404,184,641,750 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,423,786 |

| net_rshares | 232,910,405,602 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 232,910,405,602 | 10% |

Lamas unite! :)

| author | chinito |

|---|---|

| permlink | re-vikisecrets-sb2smt |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 20:52:06 |

| last_update | 2024-03-28 20:52:06 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 20:52:06 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.054 HBD |

| curator_payout_value | 0.053 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 15 |

| author_reputation | 189,016,225,553,791 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,415,114 |

| net_rshares | 219,690,953,483 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 219,690,953,483 | 10% |

This information is really great, Mistral is doing great.

| author | jemima2001 |

|---|---|

| permlink | re-vikisecrets-2024329t91616538z |

| category | hive-163521 |

| json_metadata | {"type":"comment","tags":["hive-163521","stem","mistral","chatgpt","artificialintelligence","deutsch","proofofbrain","hive-engine","leofinance","ai","hive-163521"],"app":"ecency/3.0.46-mobile","format":"markdown+html"} |

| created | 2024-03-29 08:16:21 |

| last_update | 2024-03-29 08:16:21 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-05 08:16:21 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.054 HBD |

| curator_payout_value | 0.055 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 58 |

| author_reputation | 20,184,390,496,473 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,425,699 |

| net_rshares | 227,805,765,164 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 227,805,765,164 | 10% |

It sounds interesting but the resources needed to run these is quite large. On a normal computer, it's quite taxing on the system as you won't be able to do much of anything else. It does look like a decent Ai.

| author | jfang003 |

|---|---|

| permlink | re-vikisecrets-2gcpcbbnf |

| category | hive-163521 |

| json_metadata | {"app":"leothreads/0.3","format":"markdown","tags":["leofinance"],"canonical_url":"https://inleo.io/@jfang003/re-vikisecrets-2gcpcbbnf","isPoll":false,"pollOptions":{},"dimensions":[]} |

| created | 2024-03-29 06:43:51 |

| last_update | 2024-03-29 06:43:51 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-05 06:43:51 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.054 HBD |

| curator_payout_value | 0.054 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 211 |

| author_reputation | 637,076,248,457,939 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,424,343 |

| net_rshares | 227,357,114,876 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 227,357,114,876 | 10% |

Mega beeindruckend. Schlau die Regulierung einfach zu umgehen ;-)

| author | lichtblick |

|---|---|

| permlink | sb22i5 |

| category | hive-163521 |

| json_metadata | {"app":"hiveblog/0.1"} |

| created | 2024-03-28 11:27:42 |

| last_update | 2024-03-28 11:27:42 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 11:27:42 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.051 HBD |

| curator_payout_value | 0.051 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 65 |

| author_reputation | 735,264,329,637,068 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,403,205 |

| net_rshares | 214,070,484,765 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 214,070,484,765 | 10% |

Lama nft

| author | memess |

|---|---|

| permlink | re-vikisecrets-2024328t143441460z |

| category | hive-163521 |

| json_metadata | {"type":"comment","tags":["hive-163521","stem","mistral","chatgpt","artificialintelligence","deutsch","proofofbrain","hive-engine","leofinance","ai","hive-163521"],"app":"ecency/3.0.46-mobile","format":"markdown+html"} |

| created | 2024-03-28 13:34:42 |

| last_update | 2024-03-28 13:34:42 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 13:34:42 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 8 |

| author_reputation | 22,869,845,823,245 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,405,698 |

| net_rshares | 0 |

AI performed very well. Great picture

| author | momins |

|---|---|

| permlink | re-vikisecrets-2024328t22349650z |

| category | hive-163521 |

| json_metadata | {"tags":["stem","mistral","chatgpt","artificialintelligence","deutsch","proofofbrain","hive-engine","leofinance","ai","hive-163521"],"app":"ecency/3.1.1-vision","format":"markdown+html"} |

| created | 2024-03-28 16:03:51 |

| last_update | 2024-03-28 16:03:51 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 16:03:51 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.051 HBD |

| curator_payout_value | 0.052 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 37 |

| author_reputation | 57,220,269,818,923 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,409,386 |

| net_rshares | 213,524,696,851 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 213,524,696,851 | 10% |

It's still quite complex. Do any students use AI to do homework?

| author | mxm0unite |

|---|---|

| permlink | re-vikisecrets-sb39jc |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-29 02:57:12 |

| last_update | 2024-03-29 02:57:12 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-05 02:57:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.056 HBD |

| curator_payout_value | 0.056 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 64 |

| author_reputation | 14,231,031,294,527 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,421,567 |

| net_rshares | 227,059,658,974 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 227,059,658,974 | 10% |

"Mixtral wurde nämlich nicht wie sonst üblich zuerst auf einer zentralen Plattform (Huggingface) veröffentlicht, sondern auf Torrent. Dezentral." Geil! 😁

| author | orionvk |

|---|---|

| permlink | re-vikisecrets-sb2dmy |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 15:28:12 |

| last_update | 2024-03-28 15:28:12 |

| depth | 1 |

| children | 1 |

| last_payout | 2024-04-04 15:28:12 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.051 HBD |

| curator_payout_value | 0.052 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 154 |

| author_reputation | 330,842,582,932,101 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,408,397 |

| net_rshares | 213,949,165,991 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 213,949,165,991 | 10% |

Das ist echt geil, auf huggingface oder github könnten die KI-Modelle jederzeit wieder gelöscht werden.

| author | vikisecrets |

|---|---|

| permlink | re-orionvk-sb2fod |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 16:12:15 |

| last_update | 2024-03-28 16:12:15 |

| depth | 2 |

| children | 0 |

| last_payout | 2024-04-04 16:12:15 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.015 HBD |

| curator_payout_value | 0.015 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 103 |

| author_reputation | 1,196,288,653,259,707 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,409,559 |

| net_rshares | 64,235,573,163 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| orionvk | 0 | 64,235,573,163 | 30% |

<center>PIZZA! $PIZZA slices delivered: @thehockeyfan-at<sub>(1/5)</sub> tipped @vikisecrets </center>

| author | pizzabot |

|---|---|

| permlink | re-mixtral-8x7b-best-open-source-mixture-of-expert-ai-model-and-how-mistral-mocked-the-eu-regulators-full-transcript-20240328t145707z |

| category | hive-163521 |

| json_metadata | "{"app": "pizzabot"}" |

| created | 2024-03-28 14:57:06 |

| last_update | 2024-03-28 14:57:06 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 14:57:06 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 107 |

| author_reputation | 7,514,433,425,255 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,407,713 |

| net_rshares | 0 |

The US are really doing a great job in regulating work

| author | precab |

|---|---|

| permlink | re-vikisecrets-sb4tul |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-29 23:13:39 |

| last_update | 2024-03-29 23:13:39 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-05 23:13:39 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 55 |

| author_reputation | 18,826,690,046,740 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,444,899 |

| net_rshares | 0 |

AI is doing very good Nice one!

| author | rafzat |

|---|---|

| permlink | re-vikisecrets-2024328t18222279z |

| category | hive-163521 |

| json_metadata | {"type":"comment","tags":["hive-163521","stem","mistral","chatgpt","artificialintelligence","deutsch","proofofbrain","hive-engine","leofinance","ai","hive-163521"],"app":"ecency/3.0.46-mobile","format":"markdown+html"} |

| created | 2024-03-28 17:22:03 |

| last_update | 2024-03-28 17:22:03 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 17:22:03 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 31 |

| author_reputation | 183,560,271,702,716 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,411,198 |

| net_rshares | 0 |

Beeindruckend, aber bei der juristischen Frage wurde nur US-Recht zur Anwendung gebracht, bei uns wäre das nie im Leben Notwehr, schon allein wegen der 4 Schüsse. Falls es ein europäisches Modell ist, warum die US-Tests?

| author | stayoutoftherz |

|---|---|

| permlink | re-vikisecrets-sb20if |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 10:44:42 |

| last_update | 2024-03-28 10:44:42 |

| depth | 1 |

| children | 2 |

| last_payout | 2024-04-04 10:44:42 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.064 HBD |

| curator_payout_value | 0.065 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 220 |

| author_reputation | 1,321,331,965,384,080 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,402,555 |

| net_rshares | 270,115,255,909 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| lichtblick | 0 | 55,619,208,240 | 30% | ||

| vikisecrets | 0 | 214,496,047,669 | 10% |

Ganz sicher bin ich mir auch nicht, ob das in den USA so durchgehen würde, ist eine Standard Bar-Testfrage aus den USA. Verwende dieselben Fragen, um die Modelle vergleichen zu können. US-Notwehr dürfte aber weiter gefasst sein als in der EU.

| author | vikisecrets |

|---|---|

| permlink | re-stayoutoftherz-sb240x |

| category | hive-163521 |

| json_metadata | {"tags":"hive-163521"} |

| created | 2024-03-28 12:00:33 |

| last_update | 2024-03-28 12:02:24 |

| depth | 2 |

| children | 1 |

| last_payout | 2024-04-04 12:00:33 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 242 |

| author_reputation | 1,196,288,653,259,707 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,403,934 |

| net_rshares | 0 |

Das meine ich ja, in den USA wäre die Antwort richtig (da bin ich mir 100% sicher, ich kenn die Notwehrrechte), aber nicht bei uns (zumindest in D, Ö). Warum bei den Testfragen sich nur an die USA richten, wir leben ja hier, das war die eigentliche Intention meines Comments.

| author | stayoutoftherz |

|---|---|

| permlink | re-vikisecrets-sb24hg |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 12:10:30 |

| last_update | 2024-03-28 12:10:30 |

| depth | 3 |

| children | 0 |

| last_payout | 2024-04-04 12:10:30 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.052 HBD |

| curator_payout_value | 0.052 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 275 |

| author_reputation | 1,321,331,965,384,080 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,404,143 |

| net_rshares | 215,890,957,086 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 215,890,957,086 | 10% |

<div class='text-justify'> <div class='pull-left'> <img src='https://stem.openhive.network/images/stemsocialsupport7.png'> </div> Thanks for your contribution to the <a href='/trending/hive-196387'>STEMsocial community</a>. Feel free to join us on <a href='https://discord.gg/9c7pKVD'>discord</a> to get to know the rest of us! Please consider delegating to the @stemsocial account (85% of the curation rewards are returned). You may also include @stemsocial as a beneficiary of the rewards of this post to get a stronger support. <br /> <br /> </div>

| author | stemsocial |

|---|---|

| permlink | re-vikisecrets-mixtral-8x7b-best-open-source-mixture-of-expert-ai-model-and-how-mistral-mocked-the-eu-regulators-full-transcript-20240329t182217986z |

| category | hive-163521 |

| json_metadata | {"app":"STEMsocial"} |

| created | 2024-03-29 18:22:18 |

| last_update | 2024-03-29 18:22:18 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-05 18:22:18 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 565 |

| author_reputation | 22,915,413,852,146 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,437,663 |

| net_rshares | 0 |

That is pretty cool! And all local on your PC! It may take awhile but it's decentralized which is a huge plus in my book!

| author | thebighigg |

|---|---|

| permlink | re-vikisecrets-2024328t135942556z |

| category | hive-163521 |

| json_metadata | {"tags":["stem","mistral","chatgpt","artificialintelligence","deutsch","proofofbrain","hive-engine","leofinance","ai","hive-163521"],"app":"ecency/3.1.1-vision","format":"markdown+html"} |

| created | 2024-03-28 19:59:42 |

| last_update | 2024-03-28 19:59:42 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 19:59:42 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.054 HBD |

| curator_payout_value | 0.053 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 121 |

| author_reputation | 476,315,416,869,817 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,414,013 |

| net_rshares | 220,129,547,983 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 220,129,547,983 | 10% |

!PIZZA

| author | thehockeyfan-at |

|---|---|

| permlink | re-vikisecrets-sb2c6i |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 14:56:42 |

| last_update | 2024-03-28 14:56:42 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 14:56:42 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 6 |

| author_reputation | 97,594,941,595,448 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,407,705 |

| net_rshares | 0 |

Mistral seems to be doing great

| author | tsnaks |

|---|---|

| permlink | re-vikisecrets-sb2au1 |

| category | hive-163521 |

| json_metadata | {"tags":["hive-163521"],"app":"peakd/2024.3.6"} |

| created | 2024-03-28 14:27:39 |

| last_update | 2024-03-28 14:27:39 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 14:27:39 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.051 HBD |

| curator_payout_value | 0.052 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 31 |

| author_reputation | 64,931,861,784,595 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,406,945 |

| net_rshares | 214,374,485,771 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| vikisecrets | 0 | 214,374,485,771 | 10% |

Hello, my brother. Unfortunately, I see many people admiring the high degree of artificial intelligence, but whoever designed the artificial intelligence is the human being. If just a fingernail designed the human being.

| author | youm |

|---|---|

| permlink | sb28vh |

| category | hive-163521 |

| json_metadata | {"app":"hiveblog/0.1"} |

| created | 2024-03-28 13:45:21 |

| last_update | 2024-03-28 13:45:21 |

| depth | 1 |

| children | 0 |

| last_payout | 2024-04-04 13:45:21 |

| cashout_time | 1969-12-31 23:59:59 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 220 |

| author_reputation | 518,285,278,218 |

| root_title | "Mixtral 8x7B, best open-source mixture of expert AI model tested, and how Mistral mocked the EU regulators. Full-transcript." |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 132,405,993 |

| net_rshares | 0 |

hiveblocks

hiveblocks