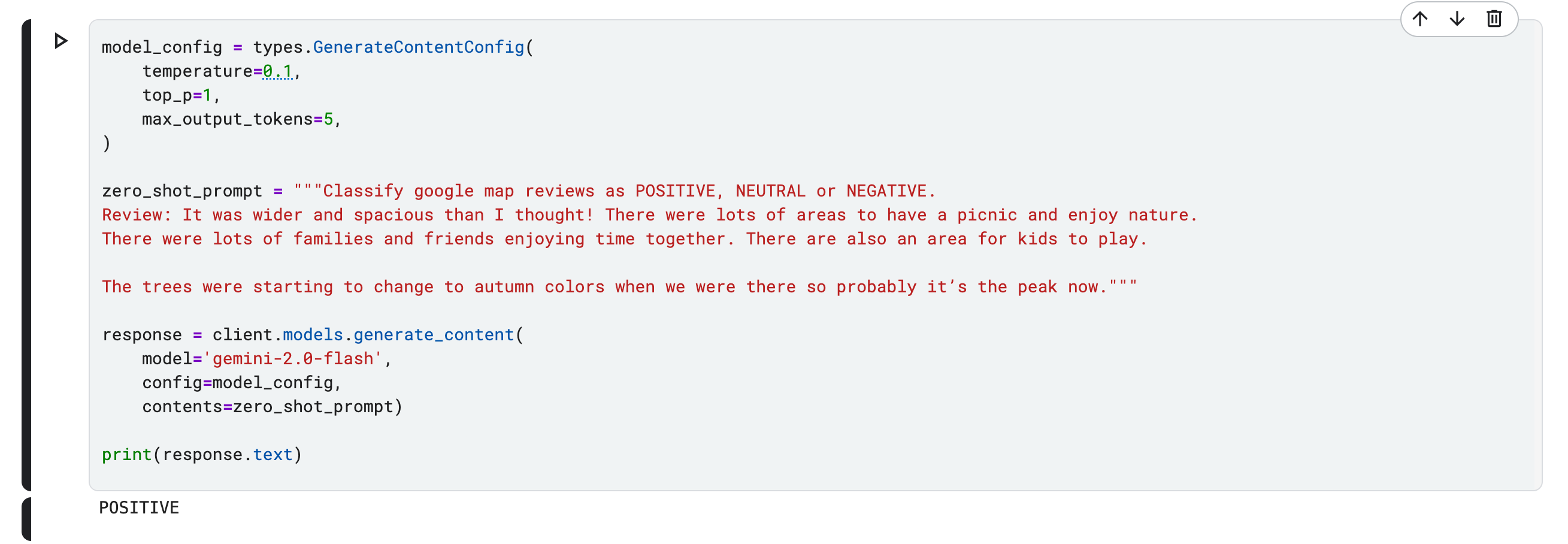

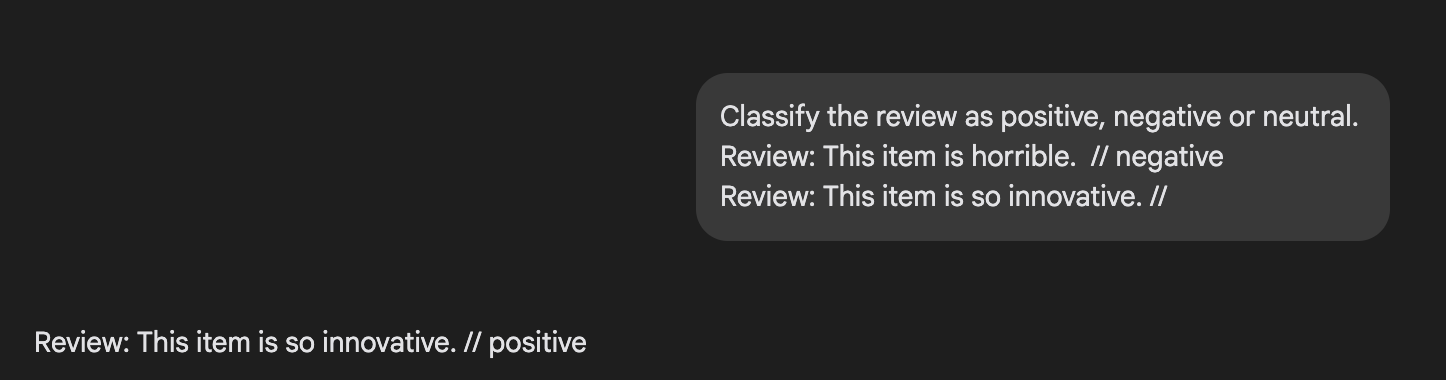

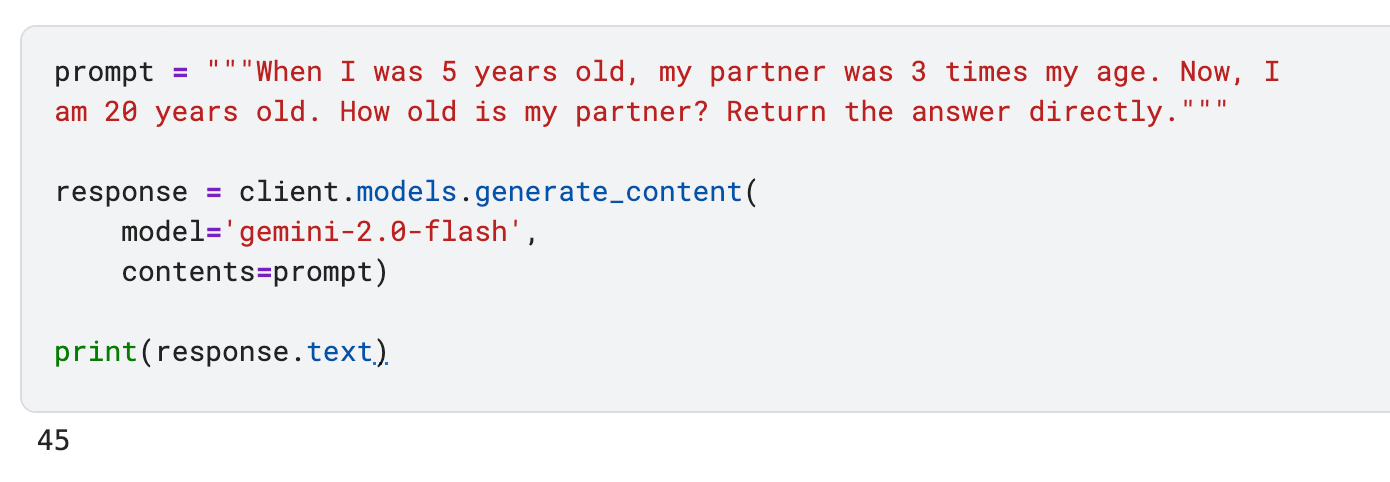

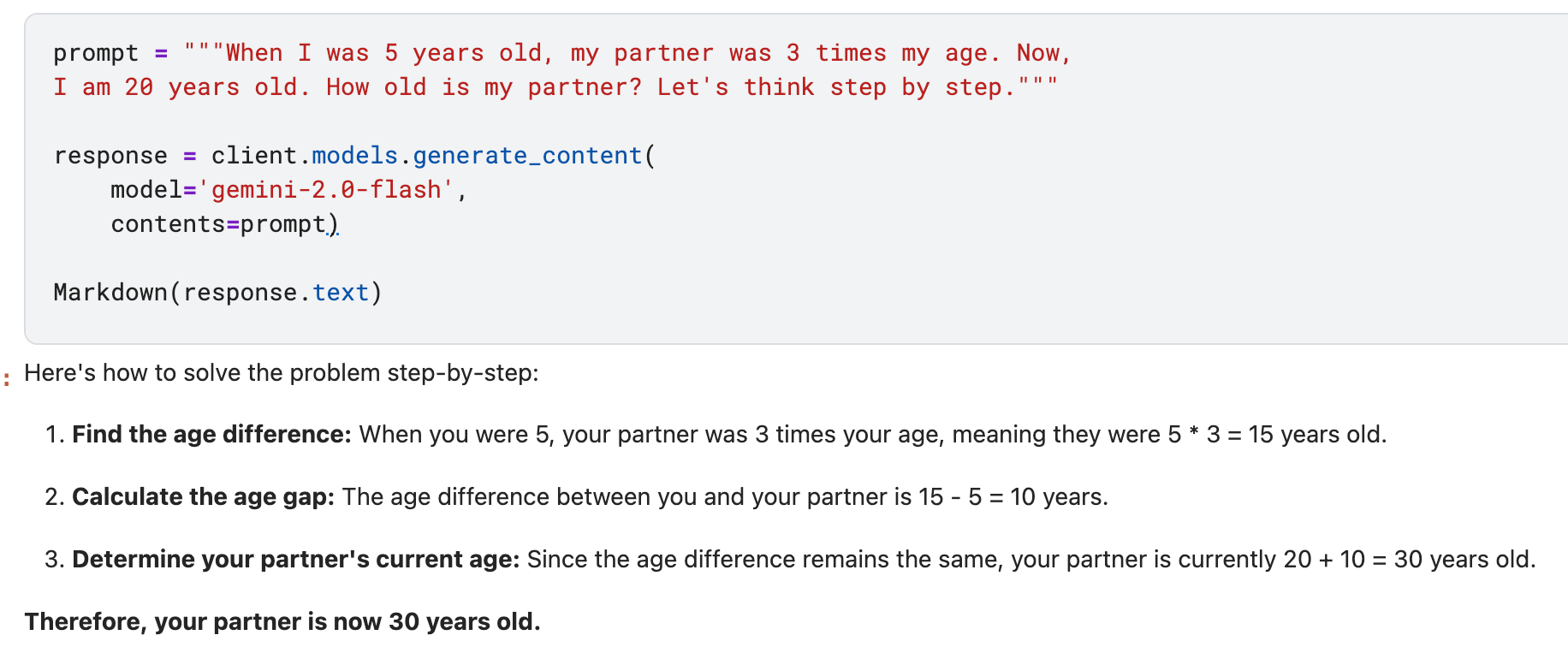

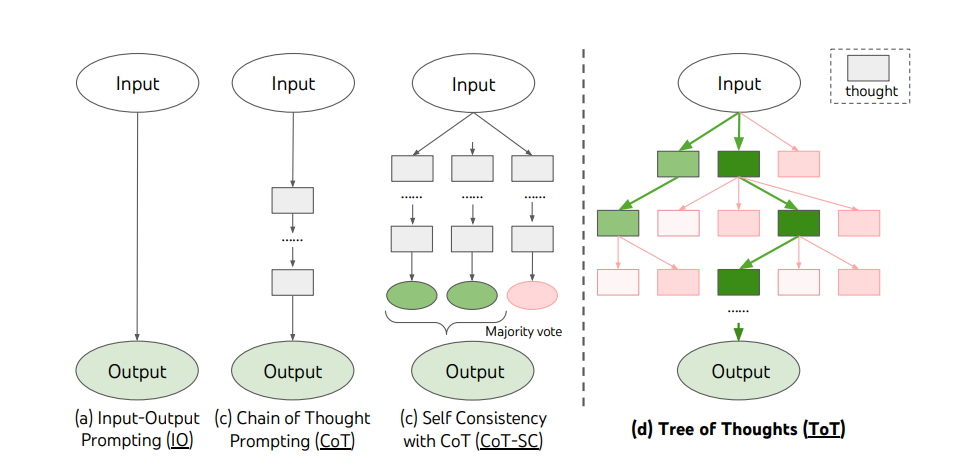

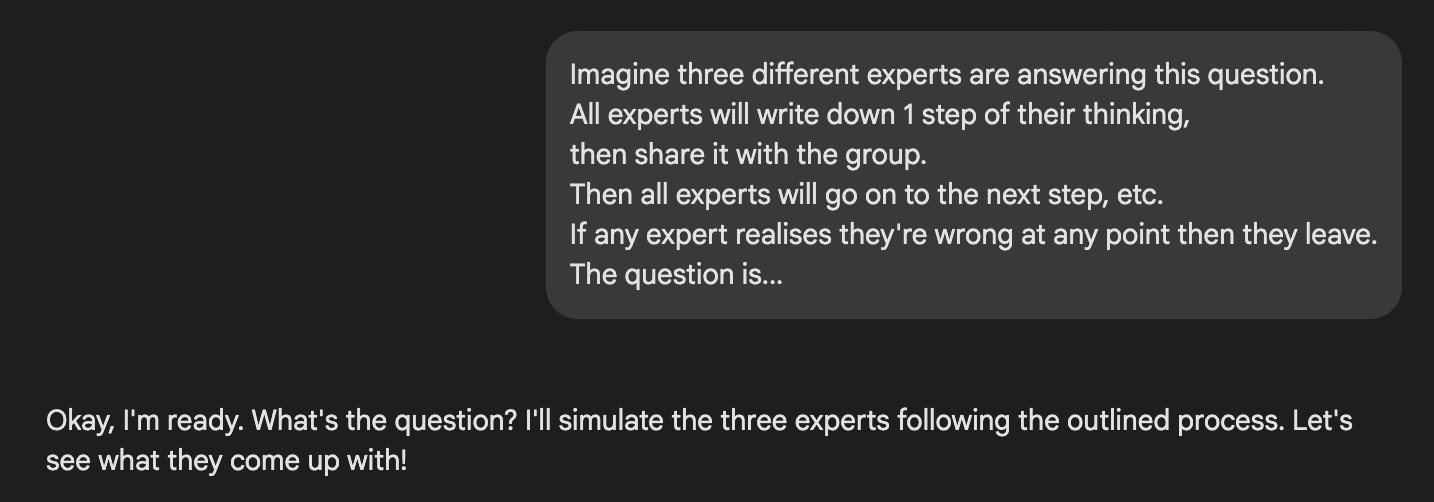

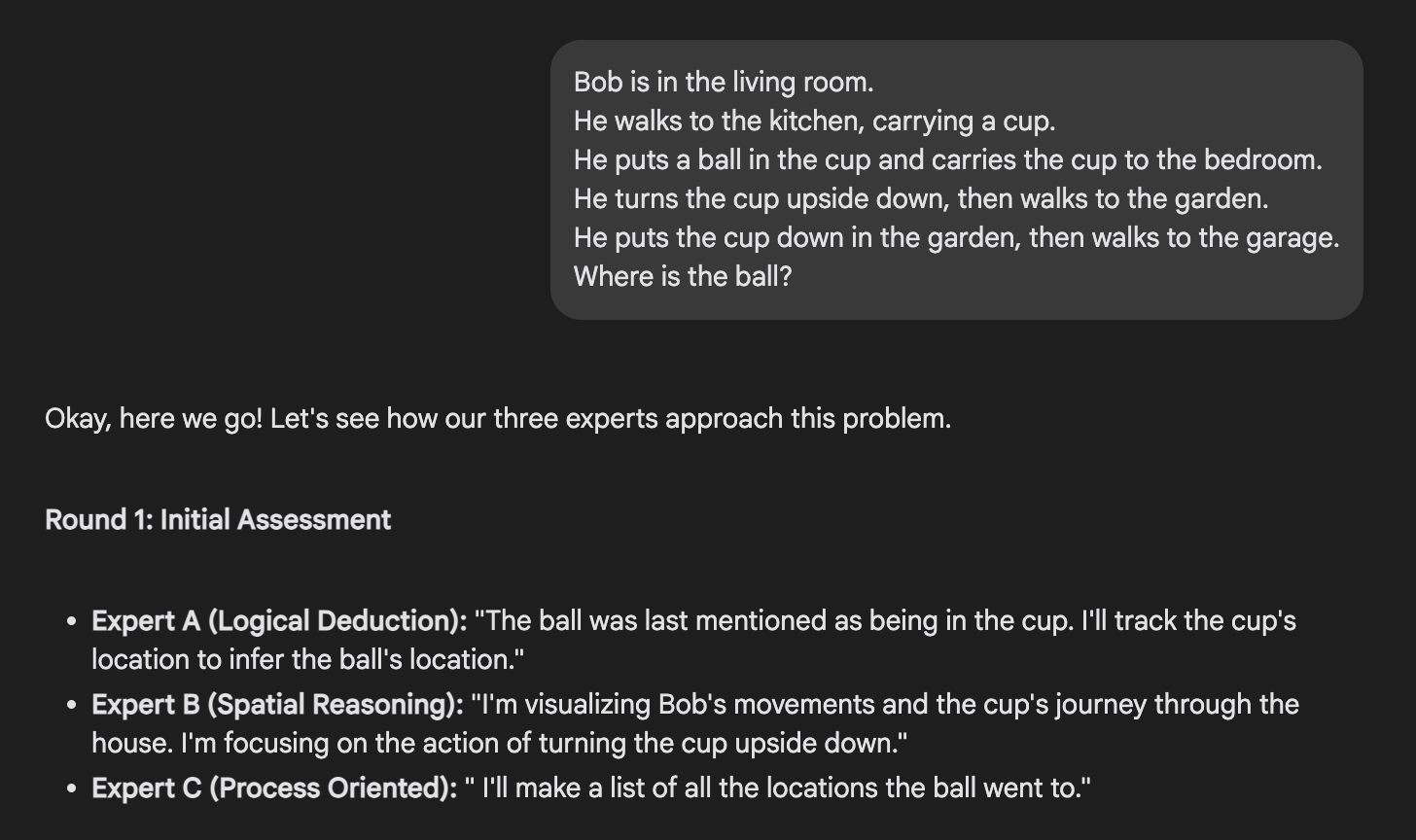

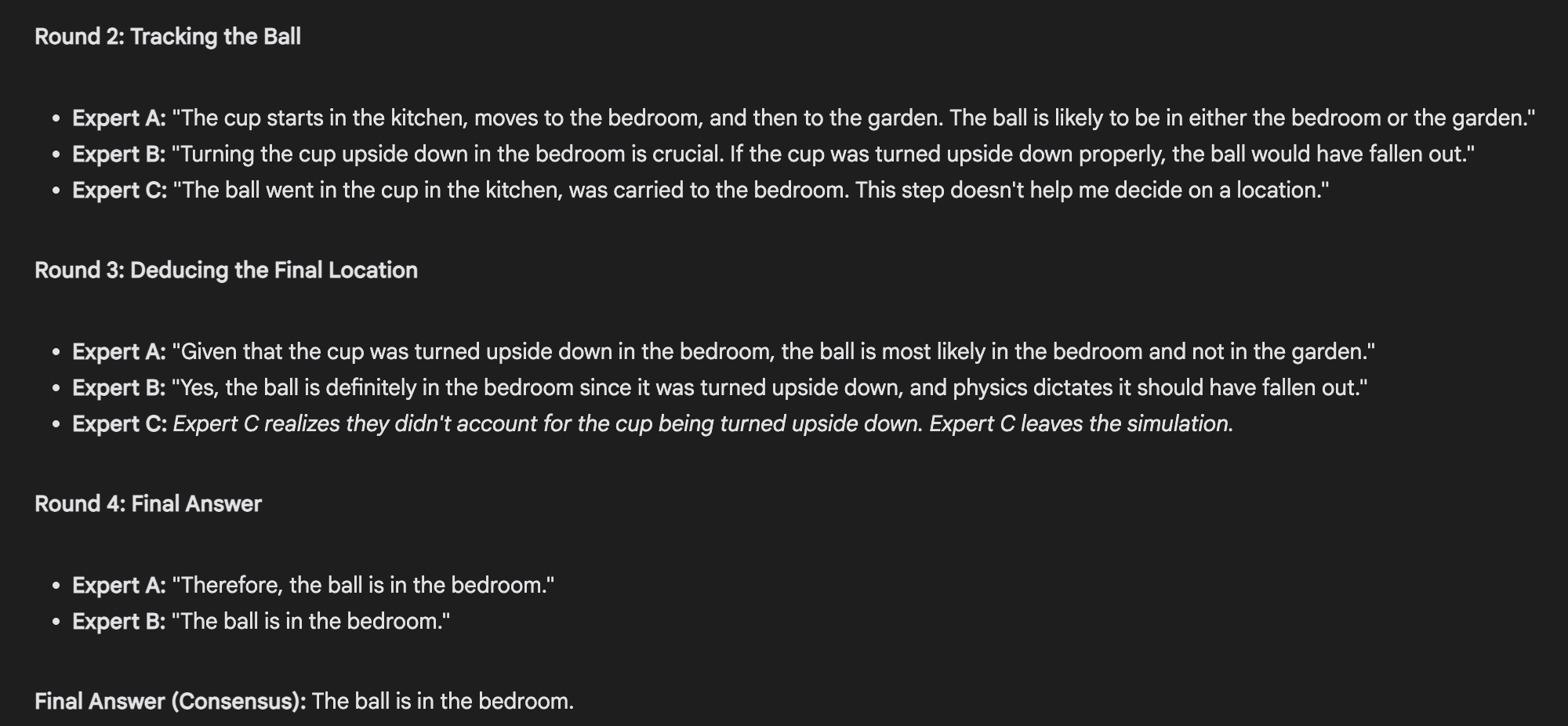

*Last month, I attended an event in Google and it was all about AI. It was a 2-day workshop where we learned about prompting and AI agents. I only joined the first day which was like an introduction to prompt engineering. But there's a recording about the topics discussed on the 2nd day so even if I wasn't able to join, I could still learn from the recording.*  *I'm writing this post as a takeaway/notes from what I've learned about prompt engineering based on the event I joined and based on this [whitepaper from Kaggle](https://www.kaggle.com/whitepaper-prompt-engineering). I wouldn't say I'm an expert in this subject, so if you found anything that you think is not right, please let me know in the comments. In that way, I can expand my knowledge and learn from you too.* --- # What Is Prompt Engineering Prompt Engineering is probably a term you'll stumble upon when you tackle about AI. To understand its meaning, it's better to address what prompt is first. In my understanding, **prompts** are the set of instructions or text that you feed to AI and in return, you'll get a response from AI about it. Good prompts will most likely generate good results, while bad prompts may produce unwanted results or hallucinations. Prompt engineering is the process of tweaking and refining the prompts and then testing it. It's not just a simple method of giving an instruction to the AI - you'll have to keep refining it and take into consideration the model's behavior, the structure of your prompt and the settings you set. The purpose is to guide AI to produce high quality responses. Usually in each prompt, you need to generate more than a hundred tries and evaluate which is good on average. So prompt engineering basically involves a lot of experimentation and trial and error. --- # Model Settings ## Output Length More tokens => longer text => slower response times => higher costs Sometimes it's better to instruct AI to "keep it short and simple" rather than setting a "max output token". ``` Write a summary of this post (max 100 words). ``` Too short = maybe not good because it may leave out important details Too long = maybe not good because it may include gibberish or repeat the same response ## Temperature Temperature controls creativity. Closer to 1 will work well for self consistency. If you make it close to 0, 0 reduces diversity in the answer, which doesn't work for self consistency. For less random answers, a low temperature (0~0.3) should be okay. If you need right and wrong mix of answers, so temp should be closer to 0.7~1 but it will never give you a perfect answer but a decent answer. ## Top-K / Top-P Sampling Top-K: pick from top K most likely next words K = 1 => greedy, will pick the most likely, very predictable word K = 3 => will randomly choose from top 3 most likely words K = 5 => more varied results Top-P: pick from enough top words to reach P% certainty Top-P = 0.9 => will give out 90% confidence Top-P = 1 => include everything which will be fully random Top-P = 0.1 => only the most confident few will be returned | Setting | Effect | Example Scenarios For Usage | |-|-|-| | Low Top-K / Low Top-P | more predictable, safer answers | Math, problem solving | | High Top-K / Low Top-P | creative, varied answers | Brainstorming, creative writing| In combination with temperatures, Low temperature + Low Top-K / Low Top-P => precise High temperature + High Top-K / Low Top-P => creative # Prompting Techniques ## Zero Shot The simplest type of prompt. Zero shot means no examples are provided to the AI. You ask, and you'll be given a direct answer.  In this example, I asked the AI and got responded based on what I asked - to classify the review. ## One-shot And Few-shot **One-shot** means you provide an example to the AI. **Few-shot** means you provide multiple examples to the AI. Providing examples will guide the AI's response.  The above is a simple example of One-shot prompting. If I gave more examples, then it will become Few-shot prompting. You usually use one-shot and/or few-shot when zero-shot fails. When the pattern is easy to follow or has a fixed format, it's better to use one-shot. When it becomes complex or you want variations, it's better to use few-shot. ## Self-Consistency Basically it's like how we do a majority vote. Run the same prompt multiple times so you get multiple answers. From there, you see which is the most "correct" answer by determining which is common. This helps reduce randomness. Sample Prompt: ``` I have 10,000 yen in my wallet. A friend borrowed 500 for a drink. I bought 5 donuts for 220 yen each. How much money do I have left? ``` ✅ Try #1: ``` You have 8,400 yen left. ``` ✅ Try #2: ``` You have 8,400 yen left. ``` ❌ Try #3: ``` You have 9,280 yen left. ``` If you run this prompt only once, it may give a wrong or random answer (*hallucination*) but now that we've ran it multiple times, we get a correct answer based on the majority of the results. ## Chain of Thought (CoT) To understand CoT better, we need to understand the Standard Prompting.  This is an example of Standard or Direct prompting. The response is 45 which you would wonder how did it come up with that answer. If you compute, it shouldn't arrive to this answer. Standard prompting like this can give you an answer quickly but it's prone to *hallucinations* like these. Applying CoT, we'll tweak a bit of the same prompt.  Now, the response I'm getting has a reasoning and calculation behind it which now makes sense. It now arrived to the correct answer. Notice the difference? Just by changing the `Return the answer directly.` to `Let's think step by step.`, I was able to get the answer I am looking for. Although this will increase the tokens usage, the quality of the response is better than the standard prompting. This could prevent *hallucinations* but not completely eliminate it. ## Tree of Thoughts (ToT) It's like an advanced technique of CoT where there are many possible paths running in parallel. The image below best explains what ToT means.  <sub>source: https://arxiv.org/pdf/2305.10601</sub> It's useful for very complex tasks like planning or brainstorming, creative writing, and/or puzzles and riddles. I tried to run this example prompt taken from this [github](https://github.com/dave1010/tree-of-thought-prompting).    ## ReAct (Reason + Act) Allows AI to think, do an action, observe, and repeat (but now with the new information from the first set of process) until there's an output. ``` Thought 1 -> Action 1 -> Observation 1 -> Thought 2 -> Action 2 -> Observation 2 -> … -> Output ``` Actions could involve searching the web, call APIs, run a code or anything. This is useful for AI agents or assistants or any multi step tasks. This example is a simplified example generated by ChatGPT but in reality, this could get complicated. ``` Question: How many books did author X write? Thought: I need to find how many books Author X has written. Action: Search “books by Author X” Observation: Author X wrote 12 books. Thought: Got it. Final Answer: 12 ``` We can also provide tools for AI to refer when performing actions. --- # Conclusion - Prompting is an iterative process of refining and testing - Try to generate a hundred times for each prompt to see and evaluate which is better - Start simple (use zero-shot) then move on to different complicated techniques as needed - Be specific and clear with no room for ambiguity when writing prompts - Instructions over constraints: tell what to do rather than what not to do - Add your most important instructions at the beginning of your prompt or at the end of the prompt, not in the middle - Use imperative expressions when writing prompts (e.g. `You MUST use the code execution tool to generate and execute the code.`) - Use prompt optimizer tools to improve prompts - Experiment and document => 2 keys to mastering prompt engineering --- Thanks for reading! See you around! じゃあ、またね! <sub> All images are screenshots from the [Kaggle notebook](https://www.kaggle.com/code/markishere/day-1-prompting) and the Google AI Studio (using Gemini) unless stated otherwise. Most prompts are from the example in Kaggle notebooks - Day 1 laboratory. All information here are from my notes and takeaways from the seminar and articles online like [Prompt Engineering Guide](https://www.promptingguide.ai/). </sub>

| author | wittythedev |

|---|---|

| permlink | learning-prompt-engineering |

| category | hive-196387 |

| json_metadata | "{"app":"peakd/2025.7.1","format":"markdown","description":"Just my notes on what I've learned about prompt engineering","tags":["promptengineering","ai","prompting","programming","development","pob","stemsocial","creativecoin","wittythedev"],"users":[],"image":["https://files.peakd.com/file/peakd-hive/wittythedev/23xVP1vx6j4w9k9LRbV96ArKvSLe3M6w4NCXtGMJ2P7pREv9FE378KoiFj5Mn45uf6jFf.jpg","https://files.peakd.com/file/peakd-hive/wittythedev/23u6WumPLLwnHDowH2pY1HEHfjdY4ymVef46JfXG4oZt6asUKDKbj46iq13S7c8U5u7py.png","https://files.peakd.com/file/peakd-hive/wittythedev/23t76bthUzQmxAs9B7J9pU6p4n5XnmKDZm3wJzjq9kqVMQhKZTE7WVnu9rx2We5WsvUoa.png","https://files.peakd.com/file/peakd-hive/wittythedev/23uFueZwSDwkhdYCAtgzXCjReNgZKJUDnoYJV7gvTdSYfjpP9tgU2nNisBL3sCAKgdXkm.png","https://files.peakd.com/file/peakd-hive/wittythedev/23tSwpGL8DD6Jds3kGoxcrwFyoYs4LFHLupueqrSXjzyYETpXsWZAh3W15qYHJw2SP886.png","https://files.peakd.com/file/peakd-hive/wittythedev/23uFwWZh4moYyoNwi75nXuJVSN1W17SDzc9kNbUEJKmxLV2rMM32ho1byuf2d4UsxEZMz.png","https://files.peakd.com/file/peakd-hive/wittythedev/Eo1wrX2WWvWMa7XMZSa2tm7owNNEzQddtGEu6AcGrNbDS8E927Jt7UdmHiCqXq88ULP.png","https://files.peakd.com/file/peakd-hive/wittythedev/23t7AySwBrrxnZJjbYziH9AjbRWCuud4a5Kyc2YkQdQ4iCFTuAiUAy77jixBWuFDFRF3d.png","https://files.peakd.com/file/peakd-hive/wittythedev/23tGXuSR7vdwJ3VghHYkEx4mwsajUWjrqBDZqdTjLkTckq1mZVUNhfC2GWfxm4PgA2Ytr.png"]}" |

| created | 2025-07-09 14:28:27 |

| last_update | 2025-07-09 14:28:27 |

| depth | 0 |

| children | 2 |

| last_payout | 1969-12-31 23:59:59 |

| cashout_time | 2025-07-16 14:28:27 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 1.720 HBD |

| promoted | 0.000 HBD |

| body_length | 9,744 |

| author_reputation | 1,357,239,420,868 |

| root_title | "Learning Prompt Engineering" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 143,895,573 |

| net_rshares | 5,487,475,378,875 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| kevinwong | 0 | 1,380,264,348 | 0.6% | ||

| eric-boucher | 0 | 3,433,702,490 | 0.6% | ||

| roelandp | 0 | 54,635,964,898 | 5% | ||

| cloh76 | 0 | 824,658,025 | 0.6% | ||

| joshglen | 0 | 1,517,160,028 | 8.75% | ||

| psygambler | 0 | 1,628,042,970 | 8.75% | ||

| rmach | 0 | 599,634,330 | 5% | ||

| lemouth | 0 | 343,370,359,530 | 10% | ||

| tfeldman | 0 | 1,138,863,348 | 0.6% | ||

| metabs | 0 | 1,145,418,623 | 10% | ||

| mcsvi | 0 | 105,209,459,562 | 50% | ||

| boxcarblue | 0 | 3,469,346,426 | 0.6% | ||

| justyy | 0 | 9,210,424,471 | 1.2% | ||

| michelle.gent | 0 | 745,674,045 | 0.24% | ||

| curie | 0 | 75,726,921,071 | 1.2% | ||

| modernzorker | 0 | 628,319,797 | 0.84% | ||

| techslut | 0 | 26,778,072,120 | 4% | ||

| steemstem | 0 | 184,553,127,379 | 10% | ||

| yadamaniart | 0 | 1,006,633,674 | 0.6% | ||

| walterjay | 0 | 105,511,351,862 | 5% | ||

| valth | 0 | 918,036,280 | 5% | ||

| metroair | 0 | 6,790,247,328 | 1.2% | ||

| driptorchpress | 0 | 471,662,945 | 0.3% | ||

| dna-replication | 0 | 344,722,857 | 10% | ||

| annazsarinacruz | 0 | 535,946,555 | 16.62% | ||

| dhimmel | 0 | 5,756,210,730 | 2.5% | ||

| oluwatobiloba | 0 | 589,678,557 | 10% | ||

| elevator09 | 0 | 10,564,237,804 | 0.6% | ||

| detlev | 0 | 8,569,655,986 | 0.36% | ||

| dune69 | 0 | 632,434,299 | 1.2% | ||

| frankydoodle | 0 | 1,257,916,818 | 8.75% | ||

| gamersclassified | 0 | 1,216,357,365 | 0.6% | ||

| mobbs | 0 | 24,603,492,864 | 10% | ||

| eliel | 0 | 910,704,225 | 1.2% | ||

| jerrybanfield | 0 | 4,478,295,044 | 1.2% | ||

| rt395 | 0 | 877,733,195 | 1.5% | ||

| bitrocker2020 | 0 | 2,538,206,783 | 0.24% | ||

| ohamdache | 0 | 754,061,511 | 0.6% | ||

| sustainablyyours | 0 | 694,222,938 | 5% | ||

| helo | 0 | 9,043,760,856 | 5% | ||

| arunava | 0 | 3,631,498,679 | 0.48% | ||

| juancar347 | 0 | 5,086,641,014 | 0.6% | ||

| samminator | 0 | 5,594,915,408 | 5% | ||

| bearone | 0 | 13,205,285,195 | 26.6% | ||

| enjar | 0 | 13,583,169,966 | 1.08% | ||

| lorenzor | 0 | 1,275,529,636 | 50% | ||

| alexander.alexis | 0 | 6,931,610,364 | 10% | ||

| jayna | 0 | 1,841,838,359 | 0.24% | ||

| princessmewmew | 0 | 1,575,294,334 | 0.6% | ||

| joeyarnoldvn | 0 | 450,005,573 | 1.47% | ||

| jamiz | 0 | 546,603,576 | 8.75% | ||

| gunthertopp | 0 | 12,233,443,811 | 0.24% | ||

| pipiczech | 0 | 528,104,852 | 1.2% | ||

| empath | 0 | 1,738,894,237 | 1.02% | ||

| minnowbooster | 0 | 799,084,496,965 | 20% | ||

| felt.buzz | 0 | 1,801,099,907 | 0.3% | ||

| howo | 0 | 174,335,325,540 | 10% | ||

| tsoldovieri | 0 | 1,031,174,363 | 5% | ||

| neumannsalva | 0 | 1,118,074,348 | 0.6% | ||

| stayoutoftherz | 0 | 37,382,721,268 | 0.3% | ||

| abigail-dantes | 0 | 3,803,372,017 | 10% | ||

| coindevil | 0 | 651,563,639 | 0.96% | ||

| investingpennies | 0 | 3,431,271,830 | 1.2% | ||

| jenesa | 0 | 1,810,837,160 | 8.75% | ||

| iamphysical | 0 | 26,398,771,550 | 90% | ||

| azulear | 0 | 735,929,036 | 100% | ||

| psicoluigi | 0 | 771,317,348 | 50% | ||

| rocky1 | 0 | 167,549,069,304 | 0.18% | ||

| marysent | 0 | 731,142,030 | 17.5% | ||

| aidefr | 0 | 1,162,375,165 | 5% | ||

| cloudspyder | 0 | 532,133,083 | 90% | ||

| sorin.cristescu | 0 | 31,512,791,468 | 5% | ||

| splash-of-angs63 | 0 | 13,222,973,787 | 50% | ||

| diosarich | 0 | 1,493,755,031 | 16.62% | ||

| buttcoins | 0 | 10,898,801,083 | 0.24% | ||

| enzor | 0 | 585,770,515 | 10% | ||

| bartosz546 | 0 | 662,310,344 | 0.6% | ||

| steemph.cebu | 0 | 3,580,356,998 | 17.5% | ||

| sonu084 | 0 | 1,803,323,350 | 100% | ||

| g10a | 0 | 563,681,418 | 8.31% | ||

| sunsea | 0 | 1,759,453,682 | 0.6% | ||

| steemph.manila | 0 | 525,429,663 | 33.25% | ||

| bluefinstudios | 0 | 937,144,026 | 0.36% | ||

| steveconnor | 0 | 1,118,875,816 | 0.6% | ||

| aboutcoolscience | 0 | 3,233,095,644 | 10% | ||

| junebride | 0 | 2,717,006,483 | 16.62% | ||

| kneelyrac | 0 | 902,965,477 | 16.62% | ||

| tpkidkai | 0 | 2,858,422,195 | 28% | ||

| kenadis | 0 | 2,680,723,725 | 10% | ||

| madridbg | 0 | 2,503,680,794 | 10% | ||

| robotics101 | 0 | 3,104,320,399 | 10% | ||

| iptrucs | 0 | 5,569,921,811 | 25% | ||

| sneakyninja | 0 | 1,718,669,154 | 2.33% | ||

| godlovermel25 | 0 | 9,309,629,235 | 29.92% | ||

| adelepazani | 0 | 766,408,093 | 0.24% | ||

| r00sj3 | 0 | 476,343,315 | 5% | ||

| sco | 0 | 3,155,758,066 | 10% | ||

| ennyta | 0 | 933,650,169 | 50% | ||

| juecoree | 0 | 639,357,933 | 7% | ||

| azmielbanjary | 0 | 647,839,014 | 8.75% | ||

| gabrielatravels | 0 | 900,135,357 | 0.42% | ||

| hetty-rowan | 0 | 459,860,752 | 0.6% | ||

| intrepidphotos | 0 | 2,806,630,986 | 7.5% | ||

| angelbless | 0 | 785,437,105 | 16.62% | ||

| fineartnow | 0 | 897,158,327 | 0.6% | ||

| oscarina | 0 | 740,622,298 | 10% | ||

| aiziqi | 0 | 1,092,454,745 | 5% | ||

| steemvault | 0 | 505,528,034 | 1.2% | ||

| christianyocte | 0 | 465,483,931 | 1.75% | ||

| utube | 0 | 878,462,538 | 1.2% | ||

| m1alsan | 0 | 1,182,113,873 | 1.2% | ||

| neneandy | 0 | 1,349,853,758 | 1.2% | ||

| marc-allaria | 0 | 549,855,360 | 0.6% | ||

| sportscontest | 0 | 1,233,507,619 | 1.2% | ||

| gribouille | 0 | 455,594,391 | 10% | ||

| pandasquad | 0 | 3,490,519,513 | 1.2% | ||

| jazzhero | 0 | 19,750,954,021 | 24.93% | ||

| miguelangel2801 | 0 | 747,945,231 | 50% | ||

| mproxima | 0 | 697,227,001 | 0.6% | ||

| fantasycrypto | 0 | 878,961,509 | 1.2% | ||

| emiliomoron | 0 | 886,751,219 | 5% | ||

| photohunt | 0 | 785,679,019 | 1.2% | ||

| geopolis | 0 | 618,812,226 | 10% | ||

| ajfernandez | 0 | 773,953,653 | 100% | ||

| robertbira | 0 | 1,038,148,781 | 2.5% | ||

| gohenry | 0 | 896,475,050 | 8.31% | ||

| atomcollector | 0 | 497,443,150 | 0.3% | ||

| starzy | 0 | 494,265,754 | 8.75% | ||

| alexdory | 0 | 1,871,338,463 | 10% | ||

| takowi | 0 | 25,519,391,167 | 1.2% | ||

| irgendwo | 0 | 3,863,418,964 | 1.2% | ||

| cyprianj | 0 | 2,617,225,456 | 5% | ||

| melvin7 | 0 | 17,908,476,250 | 5% | ||

| francostem | 0 | 1,335,129,181 | 10% | ||

| endopediatria | 0 | 678,625,705 | 20% | ||

| chrislybear | 0 | 1,329,923,636 | 0.6% | ||

| croctopus | 0 | 1,386,785,684 | 100% | ||

| jjerryhan | 0 | 1,545,040,700 | 0.6% | ||

| putu300 | 0 | 1,113,221,979 | 5% | ||

| zipporah | 0 | 600,403,095 | 0.24% | ||

| superlotto | 0 | 1,249,700,016 | 1.2% | ||

| jorgebgt | 0 | 4,775,287,358 | 100% | ||

| bscrypto | 0 | 3,711,701,795 | 0.6% | ||

| tomastonyperez | 0 | 16,126,930,657 | 50% | ||

| bil.prag | 0 | 603,169,593 | 0.06% | ||

| vcclothing | 0 | 771,368,146 | 0.36% | ||

| elvigia | 0 | 10,441,654,455 | 50% | ||

| sanderjansenart | 0 | 1,264,012,279 | 0.6% | ||

| qberry | 0 | 930,608,567 | 0.6% | ||

| greddyforce | 0 | 1,145,031,872 | 0.44% | ||

| gadrian | 0 | 88,163,843,687 | 6% | ||

| therising | 0 | 23,556,376,675 | 1.2% | ||

| cryptocoinkb | 0 | 461,847,404 | 0.6% | ||

| de-stem | 0 | 5,420,177,457 | 9.9% | ||

| michellpiala | 0 | 967,633,912 | 16.62% | ||

| imcore | 0 | 868,800,276 | 10% | ||

| me2selah | 0 | 5,606,625,968 | 16.62% | ||

| josedelacruz | 0 | 4,958,376,451 | 50% | ||

| softa | 0 | 995,708,347 | 0.24% | ||

| kendallron | 0 | 664,540,066 | 33.25% | ||

| erickyoussif | 0 | 647,131,359 | 100% | ||

| steemph.uae | 0 | 5,252,781,719 | 33.25% | ||

| deholt | 0 | 536,802,874 | 8.5% | ||

| sbi4 | 0 | 307,756,398,134 | 20.65% | ||

| anneporter | 0 | 1,113,029,139 | 8.75% | ||

| pladozero | 0 | 9,608,601,667 | 10% | ||

| minerthreat | 0 | 925,667,411 | 0.6% | ||

| nateaguila | 0 | 60,920,215,376 | 5% | ||

| temitayo-pelumi | 0 | 948,043,401 | 10% | ||

| andrick | 0 | 813,437,674 | 50% | ||

| doctor-cog-diss | 0 | 9,756,017,699 | 10% | ||

| musicvoter2 | 0 | 2,799,274,669 | 1% | ||

| amayphin | 0 | 2,451,669,404 | 16.62% | ||

| vinzie1 | 0 | 2,461,468,801 | 31.5% | ||

| acont | 0 | 1,550,931,274 | 50% | ||

| uche-nna | 0 | 1,728,273,765 | 0.96% | ||

| cheese4ead | 0 | 995,911,755 | 0.6% | ||

| nattybongo | 0 | 3,799,596,496 | 10% | ||

| sarimanok | 0 | 688,934,107 | 16.62% | ||

| talentclub | 0 | 734,517,966 | 0.6% | ||

| mrnightmare89 | 0 | 4,690,816,145 | 16.62% | ||

| thedailysneak | 0 | 2,362,606,329 | 2.33% | ||

| armandosodano | 0 | 1,248,663,094 | 0.6% | ||

| gerdtrudroepke | 0 | 27,591,149,581 | 20% | ||

| goblinknackers | 0 | 83,220,003,790 | 7% | ||

| smartvote | 0 | 214,946,301,724 | 10% | ||

| justnyz | 0 | 452,037,646 | 16.62% | ||

| kylealex | 0 | 5,324,557,894 | 10% | ||

| fran.frey | 0 | 3,968,139,519 | 50% | ||

| pboulet | 0 | 18,276,172,850 | 8% | ||

| stem-espanol | 0 | 2,465,027,054 | 100% | ||

| babysavage | 0 | 868,051,753 | 4.67% | ||

| cliffagreen | 0 | 5,152,210,146 | 10% | ||

| aleestra | 0 | 15,827,341,556 | 80% | ||

| palasatenea | 0 | 493,534,702 | 0.6% | ||

| the.success.club | 0 | 776,013,785 | 0.6% | ||

| macoolette | 0 | 44,325,790,995 | 17.5% | ||

| bntcamelo | 0 | 941,937,435 | 100% | ||

| rosauradels | 0 | 1,538,589,643 | 100% | ||

| giulyfarci52 | 0 | 1,620,546,673 | 50% | ||

| multifacetas | 0 | 773,856,329 | 0.6% | ||

| cakemonster | 0 | 644,609,247 | 1.2% | ||

| stem.witness | 0 | 563,282,703 | 10% | ||

| double-negative | 0 | 520,384,244 | 20% | ||

| wilmer14molina | 0 | 578,068,300 | 50% | ||

| steemstorage | 0 | 1,577,066,088 | 1.2% | ||

| aqua.nano | 0 | 634,701,342 | 100% | ||

| crowdwitness | 0 | 3,457,612,158 | 5% | ||

| hairgistix | 0 | 710,738,338 | 0.6% | ||

| instagram-models | 0 | 2,978,757,989 | 0.6% | ||

| steemean | 0 | 10,065,367,432 | 5% | ||

| littlesorceress | 0 | 1,282,522,293 | 1.2% | ||

| cryptofiloz | 0 | 1,909,718,445 | 1.2% | ||

| dawnoner | 0 | 574,538,347 | 0.12% | ||

| memehub | 0 | 713,346,540 | 0.6% | ||

| qwerrie | 0 | 1,343,725,573 | 0.09% | ||

| psyo | 0 | 1,123,991,197 | 8.75% | ||

| tiffin | 0 | 753,973,720 | 1.2% | ||

| reggaesteem | 0 | 505,474,814 | 5% | ||

| sbi-tokens | 0 | 5,122,397,900 | 4.67% | ||

| steemstem-trig | 0 | 163,918,765 | 10% | ||

| baltai | 0 | 1,757,723,819 | 0.6% | ||

| ibt-survival | 0 | 36,598,790,694 | 10% | ||

| bella.bear | 0 | 1,140,140,228 | 16.62% | ||

| biggypauls | 0 | 47,045,035,228 | 50% | ||

| hive-199963 | 0 | 1,135,646,093 | 1.2% | ||

| hiveph | 0 | 46,777,850,492 | 33.25% | ||

| hivephilippines | 0 | 1,198,171,528,867 | 35% | ||

| stemsocial | 0 | 83,649,807,043 | 10% | ||

| mami.sheh7 | 0 | 964,909,021 | 16.62% | ||

| the100 | 0 | 1,095,942,607 | 0.6% | ||

| hivelist | 0 | 701,791,223 | 0.36% | ||

| iameden | 0 | 1,495,811,584 | 16.62% | ||

| noelyss | 0 | 3,053,251,995 | 5% | ||

| iamyohann | 0 | 13,298,972,009 | 100% | ||

| quinnertronics | 0 | 15,014,967,879 | 7% | ||

| ilovegames | 0 | 1,367,043,666 | 3.5% | ||

| altleft | 0 | 5,389,713,127 | 0.01% | ||

| hive-144994 | 0 | 458,314,131 | 100% | ||

| meritocracy | 0 | 15,761,440,706 | 0.12% | ||

| dcrops | 0 | 5,203,852,005 | 0.6% | ||

| ronasoliva1104 | 0 | 1,244,290,042 | 16.62% | ||

| whywhy | 0 | 511,010,068 | 0.33% | ||

| hive-126300 | 0 | 491,076,329 | 100% | ||

| yozen | 0 | 1,755,009,034 | 0.6% | ||

| tawadak24 | 0 | 969,952,493 | 0.6% | ||

| failingforwards | 0 | 752,941,440 | 0.6% | ||

| drricksanchez | 0 | 3,578,424,170 | 0.6% | ||

| counterterrorism | 0 | 474,679,005 | 8.75% | ||

| unlockmaster | 0 | 648,120,225 | 85% | ||

| nfttunz | 0 | 2,769,853,119 | 0.12% | ||

| okluvmee | 0 | 1,004,674,208 | 0.6% | ||

| holovision.cash | 0 | 4,471,836,861 | 100% | ||

| stephyymullen | 0 | 485,343,736 | 8.75% | ||

| ifarmgirl | 0 | 41,986,499,383 | 6.3% | ||

| nazatpt | 0 | 1,299,013,489 | 100% | ||

| yoieuqudniram | 0 | 5,168,217,215 | 16.62% | ||

| arcgspy | 0 | 1,072,591,209 | 30% | ||

| demotry | 0 | 879,821,433 | 35% | ||

| altryx | 0 | 454,303,225 | 0.6% | ||

| sidalim88 | 0 | 475,709,574 | 0.6% | ||

| mochilub | 0 | 3,483,277,040 | 16.62% | ||

| aries90 | 0 | 11,773,957,733 | 1.2% | ||

| mervinthepogi | 0 | 1,386,465,624 | 50% | ||

| rencongland | 0 | 2,125,606,382 | 8.75% | ||

| blingit | 0 | 854,988,720 | 0.6% | ||

| chileng17 | 0 | 934,445,501 | 16.62% | ||

| scion02b | 0 | 20,163,260,707 | 26.6% | ||

| tonmarinduque | 0 | 745,367,798 | 16.62% | ||

| ate.eping | 0 | 484,968,048 | 8.75% | ||

| anonymous02 | 0 | 1,681,200,828 | 17.5% | ||

| captaindingus | 0 | 1,826,690,373 | 0.6% | ||

| metapiziks | 0 | 707,902,730 | 23.27% | ||

| crimsonowl-art | 0 | 488,607,851 | 16.62% | ||

| khoola | 0 | 1,809,580,133 | 35% | ||

| mutedgeek | 0 | 934,389,750 | 50% | ||

| newilluminati | 0 | 3,709,965,285 | 0.6% | ||

| vickoly | 0 | 619,530,994 | 0.6% | ||

| motherof2dragons | 0 | 830,213,213 | 50% | ||

| lichtkunstfoto | 0 | 1,926,599,983 | 1.2% | ||

| lukasbachofner | 0 | 1,124,168,211 | 0.6% | ||

| sam9999 | 0 | 469,882,287 | 5% | ||

| azj26 | 0 | 7,431,580,776 | 16% | ||

| belug | 0 | 1,684,381,627 | 0.36% | ||

| wittyzell | 0 | 22,584,769,967 | 16.62% | ||

| etselec23 | 0 | 758,954,744 | 16.62% | ||

| bluepark | 0 | 6,909,730,182 | 50% | ||

| callmesmile | 0 | 22,281,107,836 | 16.62% | ||

| justfavour | 0 | 1,138,315,050 | 0.6% | ||

| jeansapphire | 0 | 1,692,981,080 | 16.62% | ||

| zehn34 | 0 | 1,196,353,377 | 17.5% | ||

| pinkchic | 0 | 3,688,260,075 | 10.5% | ||

| jenthoughts | 0 | 11,999,765,166 | 17.5% | ||

| yopeks | 0 | 455,114,652 | 16.62% | ||

| bvrlordona | 0 | 2,176,605,848 | 100% | ||

| lhes | 0 | 22,791,024,631 | 14% | ||

| cthings | 0 | 23,429,902,511 | 40% | ||

| jijisaurart | 0 | 18,720,355,108 | 17.5% | ||

| xanreo | 0 | 13,203,273,256 | 33.25% | ||

| cebu-walkers | 0 | 2,040,675,158 | 100% | ||

| jloberiza | 0 | 2,609,936,428 | 16.62% | ||

| khairro | 0 | 795,806,629 | 16.62% | ||

| wasined | 0 | 4,016,434,446 | 1.2% | ||

| mizlhaine | 0 | 986,412,952 | 16.62% | ||

| clpacksperiment | 0 | 640,766,491 | 0.6% | ||

| ambicrypto | 0 | 30,456,780,439 | 1.2% | ||

| humbe | 0 | 8,175,960,347 | 2% | ||

| jhymi | 0 | 1,173,776,518 | 0.6% | ||

| blip-blop | 0 | 2,068,708,870 | 17.5% | ||

| jobeliever | 0 | 6,645,918,133 | 16.62% | ||

| kembot | 0 | 635,922,459 | 35% | ||

| dehai | 0 | 10,686,118,556 | 80% | ||

| itravelrox | 0 | 1,658,032,729 | 3.5% | ||

| argo8 | 0 | 1,396,416,267 | 0.6% | ||

| rhemagames | 0 | 1,160,158,772 | 0.6% | ||

| sherline | 0 | 558,107,137 | 17.5% | ||

| joycebuzz | 0 | 1,362,051,748 | 16.62% | ||

| appleeatingapple | 0 | 2,503,709,545 | 17.5% | ||

| nachtsecre | 0 | 1,914,459,194 | 17.5% | ||

| coach-p | 0 | 1,919,176,096 | 17.5% | ||

| chaisemfry | 0 | 706,369,052 | 17.5% | ||

| mavis-muggletum | 0 | 707,228,678 | 5% | ||

| lolz.byte | 0 | 0 | 100% | ||

| indiasierra | 0 | 4,628,596,899 | 17.5% | ||

| profwhitetower | 0 | 3,315,577,626 | 5% | ||

| kai-ermae | 0 | 950,883,401 | 9.97% | ||

| anlizapasaje1234 | 0 | 6,686,284,773 | 100% | ||

| gretelarmfeg | 0 | 42,472,874,829 | 100% | ||

| anthonzcatz | 0 | 2,045,753,923 | 35% | ||

| magic.byte | 0 | 0 | 100% | ||

| arka1 | 0 | 1,371,625,616 | 0.6% |

Congratulations @wittythedev! You have completed the following achievement on the Hive blockchain And have been rewarded with New badge(s) <table><tr><td><img src="https://images.hive.blog/60x70/https://hivebuzz.me/@wittythedev/upvoted.png?202507091433"></td><td>You received more than 2250 upvotes.<br>Your next target is to reach 2500 upvotes.</td></tr> </table> <sub>_You can view your badges on [your board](https://hivebuzz.me/@wittythedev) and compare yourself to others in the [Ranking](https://hivebuzz.me/ranking)_</sub> <sub>_If you no longer want to receive notifications, reply to this comment with the word_ `STOP`</sub>

| author | hivebuzz |

|---|---|

| permlink | notify-1752071835 |

| category | hive-196387 |

| json_metadata | {"image":["https://hivebuzz.me/notify.t6.png"]} |

| created | 2025-07-09 14:37:15 |

| last_update | 2025-07-09 14:37:15 |

| depth | 1 |

| children | 0 |

| last_payout | 1969-12-31 23:59:59 |

| cashout_time | 2025-07-16 14:37:15 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.000 HBD |

| promoted | 0.000 HBD |

| body_length | 637 |

| author_reputation | 369,400,933,268,717 |

| root_title | "Learning Prompt Engineering" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 143,895,890 |

| net_rshares | 0 |

<div class='text-justify'> <div class='pull-left'> <img src='https://stem.openhive.network/images/stemsocialsupport7.png'> </div> Thanks for your contribution to the <a href='/trending/hive-196387'>STEMsocial community</a>. Feel free to join us on <a href='https://discord.gg/9c7pKVD'>discord</a> to get to know the rest of us! Please consider delegating to the @stemsocial account (85% of the curation rewards are returned). Consider setting @stemsocial as a beneficiary of this post's rewards if you would like to support the community and contribute to its mission of promoting science and education on Hive. <br /> <br /> </div>

| author | stemsocial |

|---|---|

| permlink | re-wittythedev-learning-prompt-engineering-20250710t020023804z |

| category | hive-196387 |

| json_metadata | {"app":"STEMsocial"} |

| created | 2025-07-10 02:00:24 |

| last_update | 2025-07-10 02:00:24 |

| depth | 1 |

| children | 0 |

| last_payout | 1969-12-31 23:59:59 |

| cashout_time | 2025-07-17 02:00:24 |

| total_payout_value | 0.000 HBD |

| curator_payout_value | 0.000 HBD |

| pending_payout_value | 0.005 HBD |

| promoted | 0.000 HBD |

| body_length | 646 |

| author_reputation | 22,918,491,691,707 |

| root_title | "Learning Prompt Engineering" |

| beneficiaries | [] |

| max_accepted_payout | 1,000,000.000 HBD |

| percent_hbd | 10,000 |

| post_id | 143,917,469 |

| net_rshares | 16,780,697,749 |

| author_curate_reward | "" |

| voter | weight | wgt% | rshares | pct | time |

|---|---|---|---|---|---|

| wittyzell | 0 | 16,780,697,749 | 12% |

hiveblocks

hiveblocks